AI hardware is synonymous with Nvidia and one wouldn’t be wrong to think that other chip-maker heavyweights such as Intel, Qualcomm and Advanced Micro Devices (AMD) have almost ceded space to the world’s leading GPU manufacturer that is dominating the data centres now. But the fight for AI hardware dominance is not over yet and as much as NVIDIA wants to be crowned the king of AI, the GPU manufacturer still has a lot of ground to cover, especially with rival Intel and AMD intensifying the battle. But before we dive into the present, let’s have a look at how NVIDIA foresaw the rise of AI and improved its GPU performance for data centres.

Did Google Help NVIDIA Take a Lead In AI Wave

Its widely known how Nvidia turned AI-savvy at a time when other hardware players like Intel, Qualcomm and AMD failed to see the bigger picture. But the question is what led to Nvidia entering the AI hardware race five years back and building its own line of GPUs for AI workloads. According to Norwegian tech expert and Østfold University lecturer Salvador Baille, Nvidia got a major push by Google when the search giant made AI its new business strategy and launched the Google Brain project in 2011.

This sparked a chain of events – which hardware should the AI algorithms run on? With AI algorithms requiring massive computational power, its partnership with AI-focused company Google led Nvidia to increase the efficiency of its GPU architecture fast enough to help run AI algorithms. Since 2014, Nvidia has improved the efficiency of its GPU chips about 10x to help it stay competitive in a market rife with new contenders who want to ride the second “AI chip wave”.

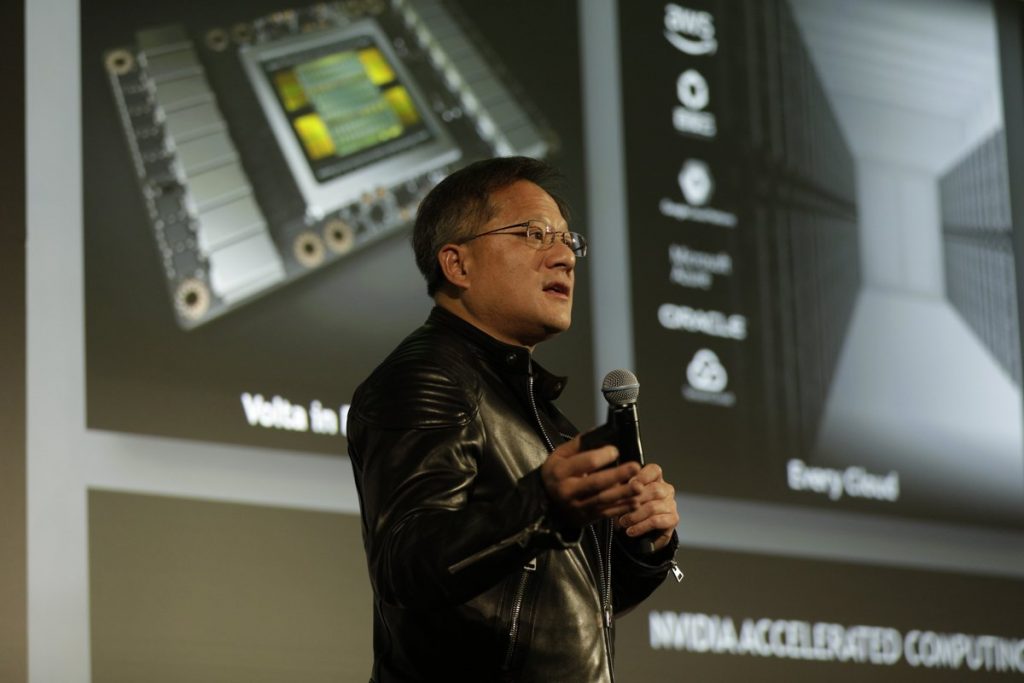

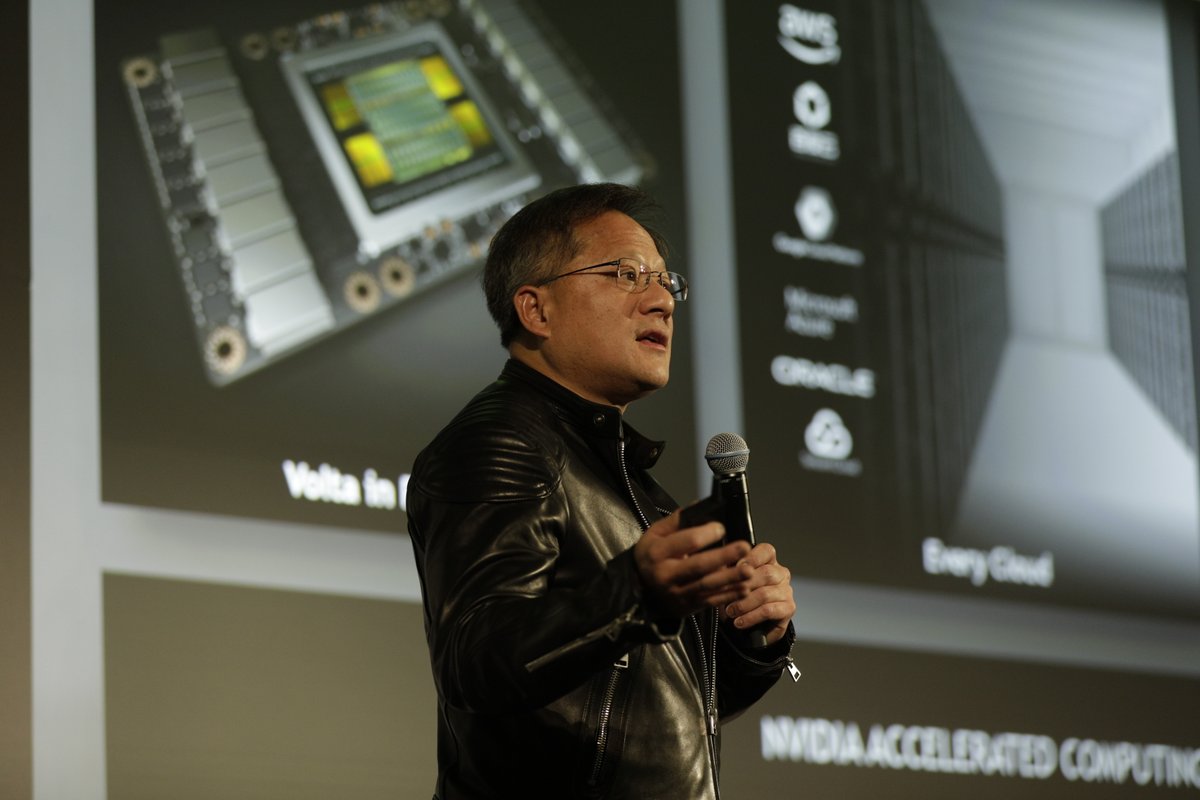

Such is the dominance, that Nvidia stays unruffled with efforts from partner company Google marketing its Tensor Processing Unit (TPU) as custom built for AI workloads. Recently, Nvidia’s CEO Jen-Hsun Huang made a flamboyant statement to investors in August, saying that “a GPU is basically a TPU that does a lot more”. Here’s the catch – Nvidia’s GPUs were originally built for image and video processing unlike the competitor’s chips that are custom built for AI workloads only. This means that Facebook and Google also use Nvidia’s GPUs in datacenters for image and video processing workloads.

Rival Intel Steps Up In a Bid To Catch Up With Nvidia Santa Clara-headquartered Intel led by CEO Brian Krzanich wants to be the driving force in the IT industry. Krzanich is also betting big on AI which will become a bigger part of the company’s data centre business. But more so, Intel announced its new line of Neural Network Processors that will soon be shipped by the end of this year. Designed from the ground up for AI, Intel Nervana NNP is a purpose built architecture for deep learning, said Intel’s VP & General Manager of Artificial Intelligence Naveen Rao.

Santa Clara-headquartered Intel led by CEO Brian Krzanich wants to be the driving force in the IT industry. Krzanich is also betting big on AI which will become a bigger part of the company’s data centre business. But more so, Intel announced its new line of Neural Network Processors that will soon be shipped by the end of this year. Designed from the ground up for AI, Intel Nervana NNP is a purpose built architecture for deep learning, said Intel’s VP & General Manager of Artificial Intelligence Naveen Rao.

According to Rao, the goal of this new architecture is to provide the flexibility to support all deep learning primitives while making core hardware components as efficient as possible. He further emphasized that Intel has collaborated with Facebook to bring the next-generation hardware into the market. Meanwhile, Rao is also confident that the company has a product roadmap that will put it on track and achieve a 100x increase in deep learning training performance by 2020.

Rao’s startup Nervana Systems was snapped up by Intel last year to propel the company’s AI-specific hardware development strategy and seems like the big-ticket acquisition has paid off.

The chipmaker is also going from strength to strength, the company reportedly earned $8.9 billion from its data center division (a resounding seven per cent increase).

Another big announcement coming last week was Intel wooing Raja Koduri away from AMD to lead the Core and Visual computing group. The former Apple and AMD exec is a known graphics chip expert and will be leading the development of graphics chips for data centers, and devices that could include security cameras and sensors.

Chip giants AMD & Qualcomm gain ground against Nvidia

In a first of its kind partnership, AMD and Intel have teamed up to produce a laptop computer chip that will use an Intel processor and an AMD graphics unit. The new chip will aim to blunt Nvidia’s heft in the gaming market as it is designed for serious gamers. The chips are made for laptops that thin and portable, but powerful enough for gamers who are on a lookout for a better option to play games. Interestingly, AMD’s Ryzen chips that are also designed for ultrathin laptops.

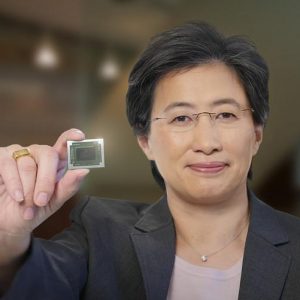

So how did AMD miss the AI hardware bus? According to Baille, AMD stuck to the stagnating PC industry rather than courting any AI customer and what’s worse, it was a time when the PC industry itself was staving off competition from tablet and iPad market. A lack of operational strategy coupled with diminished R&D front resulted in AMD capturing only 30 per cent of the GPU market. And while the AMD & Intel partnership is hailed by the industry, Koduri’s defecting from AMD has hit the company hard with the stocks plummeting. Interestingly, Koduri headed AMD‘s Radeon Technology Group and was instrumental in launching the Vega GPU which significantly challenged and blunted Nvidia’s market share. Under the leadership of CEO Lisa Su, AMD is committed to come out of the shadows of Intel and Nvidia and Su has been hard at work weaning AMD from the stagnating PC market and focusing on providing chips to the three leading video game console makers — Microsoft, Sony, and Nintendo.

The AI chip boom rattled the traditional chipmaker Qualcomm which is now pushing in the similar direction. For years, Qualcomm has dominated the smartphone market and its processors account for 40 per cent of the mobile market. With the next AI battle set to be played out on smartphones, Qualcomm launched Neural Processing Engine – an SDK that would eventually help developers optimize their apps to run AI applications on Qualcomm’s Snapdragon 600 and 800 series processors. Reportedly, the first company to integrate Qualcomm’s SDK is Facebook, that is using it to speed up the augmented reality filters in its mobile app. Today, Facebook’s filters get loaded load five times faster as compared to a “generic CPU implementation.”

Qutlook

According to co-chairman of OpenAI Sam Altman, the next wave of AI will focus on building better hardware to build better AI algorithms. With the fierce demand of blazingly fast computing power required for training deep learning algorithms to execution, chip giants are stepping up their R&D efforts to serve a much larger market. The next level of growth will come from AI hardware dominance. From acquisitions to R&D efforts chipmakers have kicked off a heated battle to blunt Nvidia’s hegemony in the AI chips market. Because in the new world order of AI, hardware will play a key role in fueling growth.