Is it time to standardise big data technologies? The once siloed, inaccessible, and mostly underutilised data has now become crucial to enterprises for success. And experts say that there is still room to promote interoperability between the available tools. But how does one define ‘standardisation’? Northeastern University’s College of Computer and Information Science defines “standard” as a formal agreement of meaning of a collection of concepts among groups — in this case, tech companies and enterprises.

According to an ISO (a standards development organisation like IEEE) report, big data paradigm consists of the distribution of data systems across horizontally coupled independent resources to achieve the scalability needed for the processing of extensive data sets.

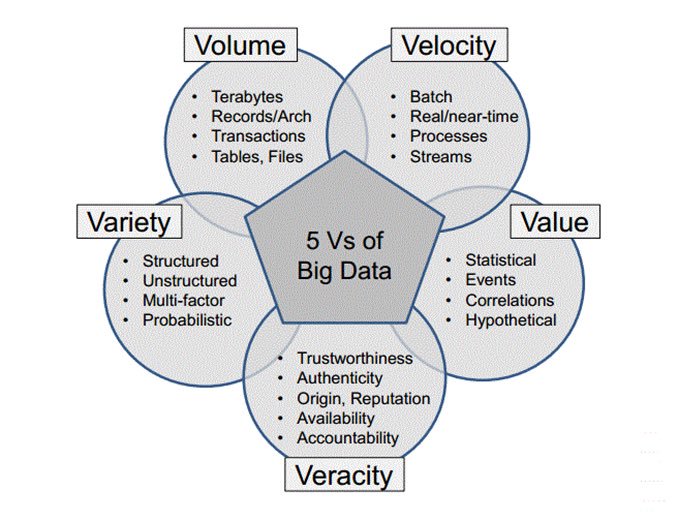

One of the first documentation on big data standardisation came from the International Telecommunication Union (ITU) which defined big data as “a paradigm for collection, storage, management, analysis and visualisation of extensive datasets with heterogeneous characteristics, that include high-volume, high-velocity and high-variety.” ISO defined big data engineering as storage and data manipulation technologies that leverage a collection of horizontally-coupled resources to achieve a nearly linear scalability in performance.

Standardisation Can Increase Interoperability Between Tools

According to a recent IDC study, we are fast approaching the data age with the global datasphere growing to a trillion gigabytes by 2025. This will be ten times the 16.1 ZB of data generated in 2016. IDC also stresses that around 20% of the data in the world will be critical to our daily lives by 2025. While data and data-related tools grow, how can business leaders find out what data matters the most?

In the last decade, there has been a spurt in the number of tools around big data and data analytics developed by different organisations, but as Jim Melton, editor of ISO/IEC 9075 (SQL) pointed out to ISO, tools lack interoperability. According to Dr Klaus-Peter Eckert, an expert who works on interoperability in distributed systems, all technical building blocks present but the key tool missing is interoperability. Eckert spoke to ISO, “There is a distinct lack of an architecture which can combine the many components of big data solutions. This is where standards will come into play in the game.” It is widely believed that the development of standards will pave a platform for interoperability.

According to an O’Reilly report, big data standards will play a key role in these areas — storage engine interfaces, querying, benchmark, metadata management and integration with technologies like AI. While retention of data has considerably improved, there are challenges associated with processing, querying and analysing data.

Big Data Interoperability And Portability Challenges

Increased demand for real-time analytics, means faster data integration: Over the last few years, demand for faster analytics on streaming data, self-service business intelligence applications has increased.

Lack of interfacing in systems: The lack of interfacing in systems means that two or more systems are unable to communicate because of different communication protocols and data formats. Technologies that are platform-dependent can only function with certain proprietary hardware or software components and cannot be interfaced with open source software or technologies from different vendors.

Lack of semantic interoperability: Semantic interoperability is defined as combining data from heterogeneous sources which is a key issue faced by both private and public enterprises. When it comes to processing large amounts of data, the most widely used big data technology stacks based on Hadoop focuses on utilising metadata when processing information which is more efficient than moving around the data itself. However, in the process, data integration with other information systems becomes more complex, so traditional big data implementations often lead to the creation of new data silos as data integration becomes complex.

Understanding NIST Big Data Interoperability Framework

NIST, the National Technology Transfer and Advancement Act is working with industries to define standards and has also released the NIST Big Data Interoperability Framework (NBDIF) series of volumes. NIST has worked towards identifying the technical challenges that are present at many levels and is defining open standards and reference frameworks that can bring improvements in data management, indexing, querying capabilities, knowledge discovery and visualisation.

Outlook

In terms of benefits, big data standardisation will help enterprises inculcate state-of-the-art practices, integrate appropriate tools and technologies. From a system developer perspective, introducing standards will help end users identify the right solution, identify gaps and improve performance. The development of reference frameworks will also help in promoting research and development.