After seeing the growth of the computing market, hardware manufacturers are keen to jump onto the next trend; AI compute. Existing market leaders, such as Intel, NVIDIA, AMD, and more are competing for a share of the AI pie in datacenter computing.

After seeing the growth of the computing market, hardware manufacturers are keen to jump onto the next trend; AI compute. Existing market leaders, such as Intel, NVIDIA, AMD, and more are competing for a share of the AI pie in datacenter computing.

Even as existing hardware such as GPUs and CPUs are being programmed to be better for training algorithms, solutions are emerging that promise an order-of-magnitude improvement over existing solutions.

Methods To Improve Compute Effectiveness

Training and running large AI algorithms take a lot of time. Therefore, there is a big need for a concept known as hardware acceleration. Hardware acceleration is when dedicated hardware, usually in the form of a GPU today, is used to speed up the computing processes present in an AI workflow.

Hardware acceleration holds many benefits, with the primary being speed. Accelerators can greatly decrease the amount of time it takes to train and execute an AI model, and can also be used to execute special AI-based tasks that cannot be conducted on a CPU. This use-case is seen in Apple’s Bionic chip series, which feature an integrated neural-network accelerator.

Accelerators have many optimisations that make them suitable for their use-case. Some include:

Parallelism: Training and executing AI algorithms is an embarrassingly (perfectly) parallel workload, which necessitates the requirement for the hardware to have a high degree of parallelism. This is, by far, one of the biggest requirements for an accelerator, and is the reason why GPUs are currently well-suited to accelerator tasks.

Low-Precision Arithmetic: Training algorithms features performing calculations on floating-point variables. While integer calculations are used for efficiency today, reducing the precision or ‘detail’ of floating-point operations provides an easy way to increase the effectiveness of the hardware.

Facebook has created and open-sourced a general-purpose floating-point standard that requires hardware-level implementation but offers benefits over existing systems such as bfloat16 and even int8/32.

Advanced Low-Level Architecture: AI accelerators are required to break away from the traditional (Von Neumann) low-level architecture of systems today to create new implementations. While existing architectures are optimized for general-purpose compute, a new architecture would provide low-level optimisations for deep learning applications.

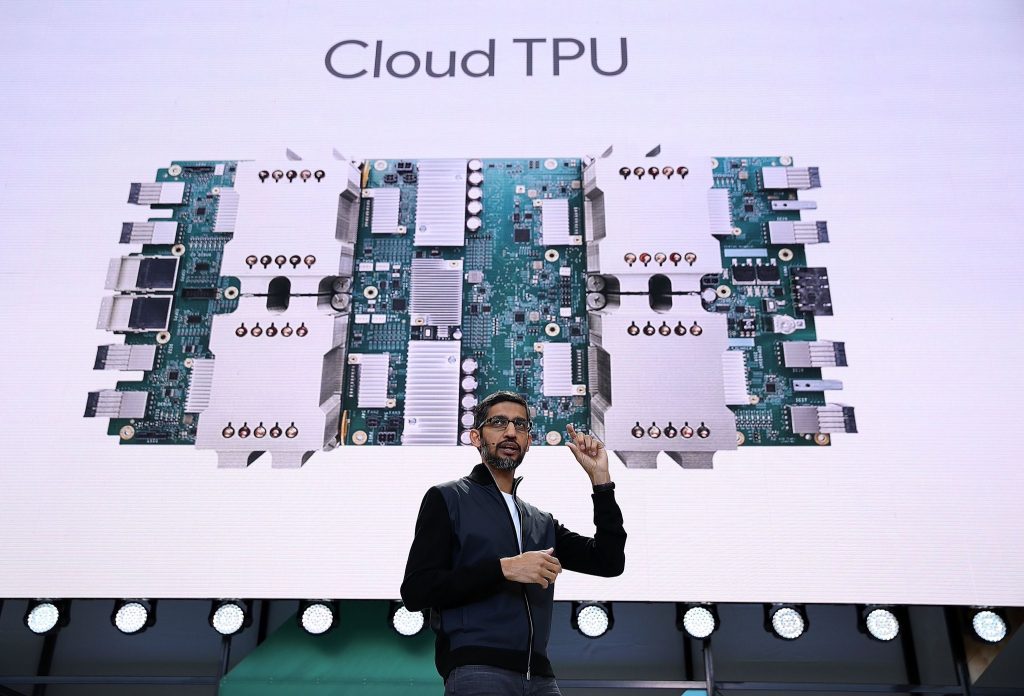

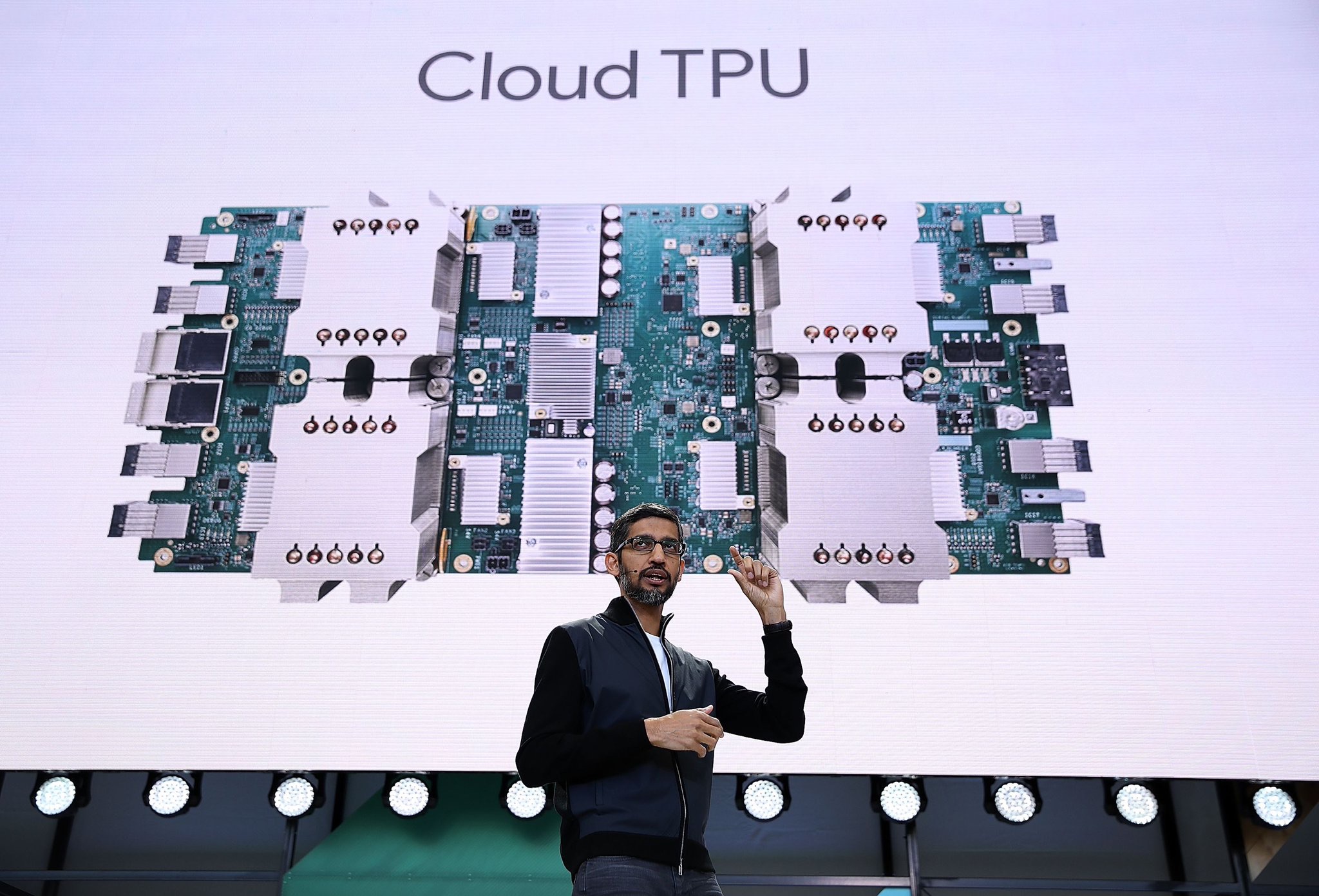

This has already been seen in Google’s Tensor Processing Unit architecture. The architecture has been overhauled to bring memory and compute units closer together, thus creating an optimised package for running TensorFlow tasks.

Allowances For In-Memory Processing: The accelerator will also need to make allowances for the smooth execution of in-memory analytics, which speeds up the training by increasing access to the dataset. This includes a high-speed interconnect between the processing unit and the memory (currently HBM2), along with a larger pool of memory to fit big datasets.

AI Compute Today

Today, the graphics processing unit (GPU) is the de facto way to train AI algorithms. NVIDIA GPUs have also been released with specialized cores known as RTCores, which can be used to speed up training. GPUs also feature a large number of processors, creating room for parallelism and splitting up of tasks.

Solutions such as Field Programmable Gate Arrays also provide options for GPU inference and training in embedded devices. These FPGAs are mainly made by two players; Intel, after their acquisition of Altera, and Xilinx. FPGAs are unique for AI compute use, as they can be programmed to the hardware level for optimizations in workloads.

FPGAs are well-suited to running inference tasks for many different kinds of AI, as they can change on the fly and provide dependable performance at a low-powered form factor. However, they are not cost-effective, and the expertise required to program FPGAs is expensive as well. However, one solution promises to end these problems.

The Rise Of The ASIC

Application-specific integrated circuits are set to be the next big step in delivering AI compute. Google, Facebook, and Amazon have all expressed an interest in creating their own ASICs, with Google already having come out with the Tensor Processing Unit. The TPU, now in its 3rd iteration, is an ASIC built for training and inference of machine learning models.

It benefits from the parallelism seen in GPUs while removing any general-purpose additions to the architecture. TPUs are purely created and optimised from the ground up for AI, ML and DL tasks, and are specialised for high-speed, low-precision floating-point operations. They consume less power than GPUs, while still maintaining the performance that beats the latter out.

In addition to this, the v3 chips feature 16 GB of High-Bandwidth Memory (HBM) RAM, allowing for datasets to be pulled from the memory at a throughput of 1 TB/s. All of these optimizations come together to create a reported cost savings of 80%.

Amazon has also made their first move in the arena through AWS Inferentia, a chip built for neural network inferencing. Reportedly, this chip was created to provide “high throughput, low latency inference performance at an extremely low cost”. The problem Inferentia is trying to solve is inferencing calls for AI models, thus providing a fast and easily accessible compute source.

Facebook is also trying to get in on the game to support the large amount of AI compute they need. The company’s chief AI scientist, Yann LeCun, stated in a recent interview:

“Facebook has been known to build its hardware when required — build its own ASIC, for instance. If there’s any stone unturned, we’re going to work on it.”

All in all, ASICs seem to be the direction in which the market is moving for AI compute. The sizeable benefits offered, along with market players’ attention towards the technology come together to create a growing field in the next few years.