The 33rd annual conference on Neural Information Processing Systems (NeurIPS) is going to be held at Vancouver Convention Center, Vancouver, Canada from December 8th to 14th, 2019.

The primary focus of the Foundation is the presentation of a continuing series of professional meetings known as the Neural Information Processing Systems Conference, held over the years at various locations in the United States, Canada and Spain.

NeurIPS received a record-breaking 6743 submissions this year, of which 1428 were accepted.

Here are few exciting works from the accepted papers at the 33rd edition of this widely popular global conference:

Quality Aware Generative Adversarial Networks By IIT Hyderabad

With the publication of this paper in 2014, applications of GANs have witnessed a tremendous growth. Generative-Adversarial Networks(GANs) have been successfully used for high-fidelity natural image synthesis, improving learned image compression and data augmentation tasks.

GANs have advanced to a point where they can pick up trivial expressions denoting significant human emotions. They have become the powerhouses of unsupervised machine learning.

HyperGCN By IISc Bangalore

A popular learning paradigm is hypergraph-based semi-supervised learning (SSL) where the goal is to assign labels to initially unlabeled vertices in a hypergraph. Motivated by the fact that a graph convolutional network (GCN) has been effective for graph-based SSL, the authors propose HyperGCN, a novel GCN for SSL on attributed hypergraphs. Additionally, the authors show how HyperGCN can be used as a learning-based approach for combinatorial optimisation on NP-hard hypergraph problems. The authors also demonstrate HyperGCN’s effectiveness through detailed experimentation on real-world hypergraphs.

Stand-Alone Self-Attention in Vision Models By Google Brain

Recent approaches have argued for going beyond convolutions in order to capture long-range dependencies. These efforts focus on augmenting convolutional models with content-based interactions, such as self-attention and non-local means, to achieve gains on a number of vision tasks.

This work primarily focuses on content-based interactions to establish their virtue for vision tasks. A simple procedure of replacing all instances of spatial convolutions with a form of self-attention applied to ResNet model produces a fully self-attentional model that outperforms the baseline on ImageNet classification

A Geometric Perspective on Optimal Representations for Reinforcement Learning By DeepMind

The authors propose a new perspective on representation learning in reinforcement learning

based on geometric properties of the space of value functions. This work opens up the possibility of automatically generating auxiliary tasks in deep reinforcement

learning, analogous to how deep learning itself enabled a move away from hand-crafted features.

ViLBERT By Georgia Inst. Of Technology & FAIR

ViLBERT (short for Vision-and-Language BERT), is a model for learning task-agnostic joint representations of image content and natural language. The authors have extended the popular BERT architecture to a multi-modal two-stream model, pro- cessing both visual and textual inputs in separate streams.

This work represents a shift away from learning groundings between vision and language only as part of task training and towards treating visual grounding as a pre trainable and transferable capability

Neural Attribution for Semantic Bug-Localization in Student Programs By IISc Bangalore & Google

This work proposes a first deep learning based technique that can localize bugs in a faulty program w.r.t. a failing test, without even running the program.

Most open online courses on programming make use of automated grading systems to support programming assignments and give real-time feedback.

The authors, in this technique introduce a novel tree convolutional neural network which is trained to predict whether a program passes or fails a given test.

Adversarial Examples Are Not Bugs, They Are Features By MIT

The researchers in this paper, demonstrate that adversarial examples can be directly attributed to the presence of non-robust features: features derived from patterns in the data distribution that are highly predictive, yet brittle and incomprehensible to humans.

After capturing these features within a theoretical framework, they establish their widespread existence in standard datasets.

Weight Agnostic Neural Networks By Adam Gaier & David Ha

In this work, the authors question to what extent neural network architectures alone, without learning any weight parameters, can encode solutions for a given task. They propose a search method for neural network architectures that can already perform a task without any explicit weight training. The results show that, on a supervised learning domain, network architectures achieve much higher than chance accuracy on MNIST using random weights.

Deep Learning Without Weight Transport By University Of Toronto

The authors describe two mechanisms – a neural circuit called a weight mirror and a modification of an algorithm proposed by Kolen and Pollack in 1994 – both of which let the feedback path learn appropriate synaptic weights quickly and accurately even in large networks, without weight transport or complex wiring.

Tested on the ImageNet visual-recognition task, these mechanisms outperform both feedback alignment and the newer sign-symmetry method, and nearly match backprop, the standard algorithm of deep learning, which uses weight transport.

Deep Equilibrium Models By Carnegie Mellon University

The researchers at CMU present a new approach to modeling sequential data: the deep equilibrium model (DEQ). Motivated by the observation that the hidden layers of many existing deep sequence models converge towards some fixed point, they propose the DEQ approach that directly finds these equilibrium points via root-finding. Such a method is equivalent to running an infinite depth (weight-tied) feedforward network, but has the notable advantage that can analytically backpropagate through the equilibrium point using implicit differentiation. Using this approach, training and prediction in these networks require only constant memory, regardless of the effective “depth” of the network.

Check all the accepted papers here.

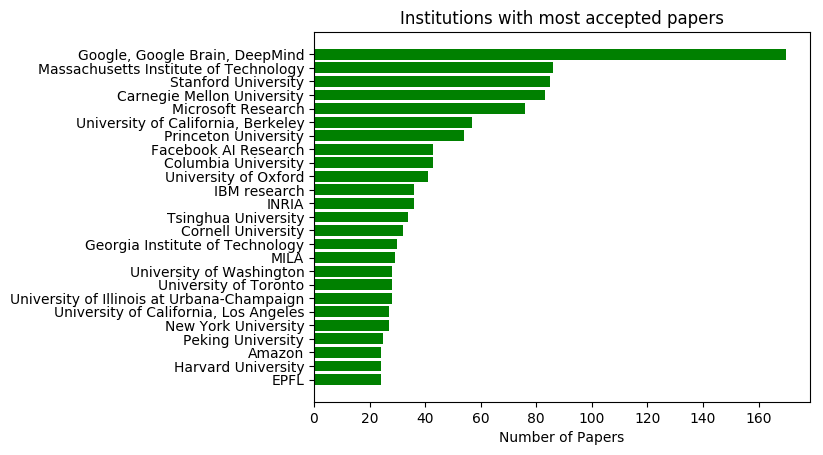

This year, NeurIPS accepted more papers from Google AI while the premier institutes like MIT, Stanford and CMU took the next spots.

The Neural Information Processing Systems Foundation is a non-profit corporation whose purpose is to foster the exchange of research on neural information processing systems in their biological, technological, mathematical, and theoretical aspects. Neural information processing is a field which benefits from a combined view of biological, physical, mathematical, and computational sciences.