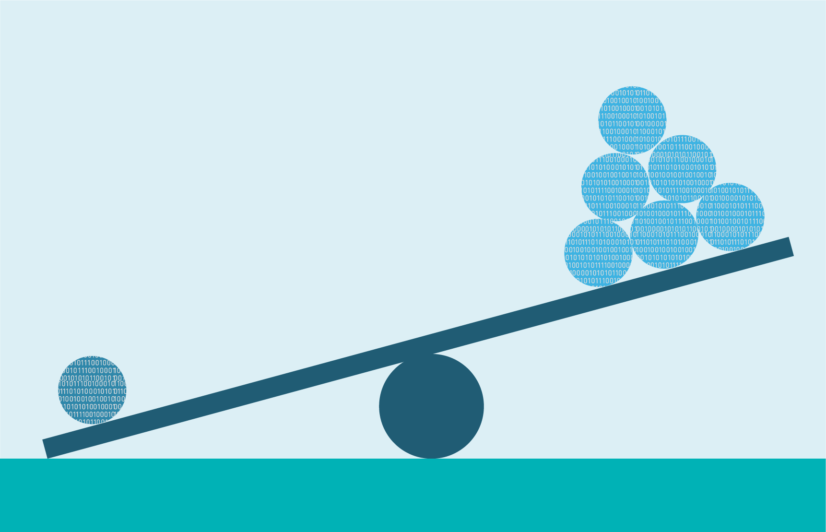

Experienced data science and machine learning experts know that imbalanced class distribution is one of the most frequently encountered problems in data science. It occurs when the number of observations belonging to one class is significantly lower than those belonging to the other classes.

What Is Data Imbalance?

Imbalanced dataset occurs when one set of classes are much more than the instances of another class where the majority and minority class or classes are taken as negative and positive. In other words, data imbalance takes place when the majority classes dominate over the minority classes.

Most machine learning models assume that data is equally distributed. This results in the algorithms being more biased towards majority classes, resulting in an unequal distribution of classes within a dataset. Also, most ML Algorithms are usually designed to improve accuracy by reducing the error. So they do not take into account the balance of classes. In such cases, the predictive model developed using conventional machine learning algorithms could be biased and inaccurate.

Here are different approaches for solving these imbalanced dataset problems using various sampling techniques:

1.Collect more data:

A larger amount of data will always add to the insights that one can obtain from the data. A larger dataset will reduce the data to be imbalanced and might turn out to have a balanced perspective on the data.

2.Penalized Models:

Penalized learning algorithms increase the cost of classification mistakes on the minority class. A popular algorithm for this technique is Penalized-SVM and Penalized-LDA. There are different penalty schemes to suit different imbalance data problems.

During training in the Scikit-learn library, one can use the argument class_weight=’balanced’ to penalize mistakes on the minority class by an amount proportional to how under-represented it is. These penalties can bias the model to pay more attention to the minority class. Using penalization is desirable if one is locked into a specific algorithm and is unable to resample.

3.New models and algorithms:

Imbalanced data can be solved using an appropriate model. XGBoost model internally takes care that the bags it trains on are not imbalanced. It can deal with the imbalanced dataset by giving different weights to different classes. But the data here is resampled, it is just happening secretly. Traditional classification algorithms do not perform very well on imbalanced data sets and small sample size. The current algorithm might not be as suitable as some other one and that could be a cause of imbalanced datasets. Decision trees algorithms have a hierarchical structure that lets them learn signals from both kinds of classes involves and hence it often performs well on imbalanced datasets. The splitting rules that look at the class variable used in the creation of the trees helps in addressing both classes.

4.Resample:

Resample with different ratios can help lead to solving the problem of imbalanced datasets. The best ratio depends on the data and the models that are used. But instead of training all models with the same ratio in the ensemble, it is worth trying to ensemble different ratios. There are two main methods that can be used for resampling:

1.Over-sampling: This technique is used to modify the unequal data classes to create balanced datasets. When the quantity of data is insufficient, the oversampling method tries to balance by incrementing the size of rare samples.

2.Under-sampling: Unlike oversampling, this technique balances the imbalance dataset by reducing the size of the class which is dominant among the two classes.

5.Change the performance metric:

The data evaluation metric used can be unsuitable for the data and applying an inappropriate one using imbalanced data can cause unfavourable results. Metrics give you more insight into imbalanced classes. It is important to choose the evaluation metric of the model correctly or one would end up optimizing a useless parameter. One should try to change the performance metric while solving the problem of imbalanced data.

Concluding Note

Data imbalance problem hampers the performance of a classifier. Imbalanced data sets can lead to the traditional data mining algorithms behaving undesirably because of the distribution of the data not being taken into consideration in the algorithm being deployed. There are different approaches proposed to handle the data imbalance problem.