Spark NLP, an open source, state-of-the-art NLP library by Jon Snow Labs has been gaining immense popularity lately. Built natively on Apache Spark and TensorFlow, the library provides simple, performant as well as accurate NLP notations for machine learning pipelines which can scale easily in a distributed environment. This library is reusing the Spark ML pipeline along with integrating NLP functionality.

In a recent annual survey by O’Reilly, it identified several trends among enterprise companies for adopting artificial intelligence. According to the survey results, Spark NLP library was listed as the seventh most popular across all AI frameworks and tools. It is also by far the most widely used NLP library – twice as common as spaCy. It was also found to be the most popular AI library after sci-kit-learn, TensorFlow, Keras, and PyTorch.

While it has gained immense popularity and is largely being used in enterprises, we try to analyse five crucial reasons why Spark NLP is growing to be one of the favourites.

- Why Spark is the new R?

- How Apache Spark Became A Dominant Force In Analytics

- How Apache Spark Became Essential For Machine Learning

- Analytics courses that are most in demand today

- Apache Spark Turns 10: The Secret Sauce Behind One Of The World’s Most Popular Open Source Projects

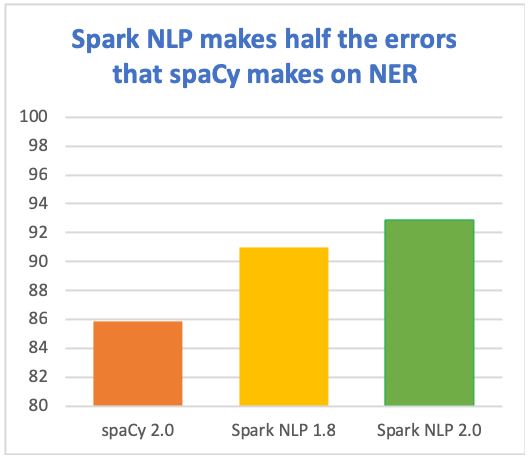

1| Accuracy

The Spark NLP 2.0 library obtained the best performing academic peer-reviewed results. The library claims to deliver state-of-the-art accuracy & speed which makes constant production in the latest scientific advances. This library also includes production-ready implementation of BERT embeddings for named entity recognition. It makes half the errors which spaCy makes on NER.

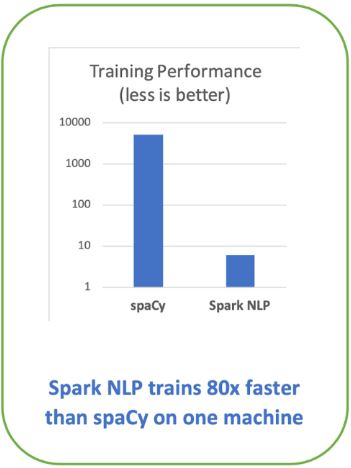

2| Speed

In Spark NLP, optimisations are done in such a way that the common NLP pipelines could run orders of magnitude faster than what the inherent design limitations of legacy libraries allow. The reasons for its speed are the second generation Tungsten engine for vectorised in-memory columnar data, no copying of text in memory, extensive profiling, configuration and code optimisation of Spark and TensorFlow, and optimisation for training and inference. Using TensorFlow under the hood for deep learning enables Spark NLP to make the most of modern computer platforms – from nVidia’s DGX-1to Intel’s Cascade Lake processors.

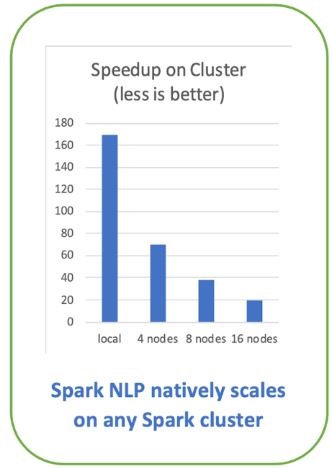

3| Scalability

This library is able to scale model training, inference, and full AI pipelines from a local machine to a cluster with little or no code changes. Being natively built on Apache Spark ML enables Spark NLP to scale on any Spark cluster, on-premise or in any cloud provider. Spark’s distributed execution planning & caching optimised the speedups and has been tested on just about any current storage and compute platform and the speedups depend on what you do. The reasons behind the scalability include zero code changes to scale a pipeline to any Spark cluster, natively distributed open-source NLP library, etc.

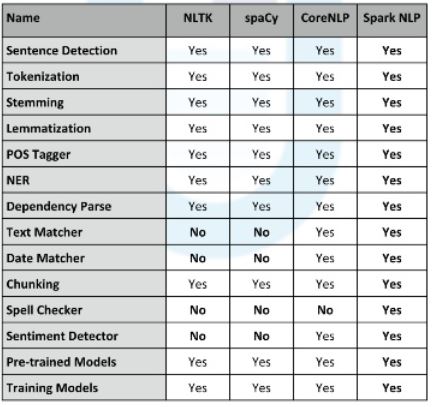

4| Performance With Out Of The Box Functionality

The Spark NLP includes features which provide full Java API, full Scala AI, full Python API, supports training on GPU, supports user-defined deep learning networks, supports Spark natively, supports Hadoop (YARN and HDFS). It provides the concepts of annotators and it includes more than what other NLPs include. It includes sentence detection, tokenisation, stemming, lemmatisation, POS Tagger, NER, dependency parse, text matcher, date matcher, chunking, sentiment detector, pre-trained models, and training models. A comparison between four popular libraries is shown below:

5| Full Python, Java And Scala API’s

A library which is supporting multiple languages not only gains audiences but also enables you to take advantage of the implemented models without having to move data back and forth between runtime environments. The Spark NLP library is under active development by a full core team, in addition to community contributions.