Physicists often involve projects that need programming as well as metadata. Having loads of data and analysing it is a job of the majority of the branches in this field of Physics research. A job of a physicist is nothing but a combination of many fields and data science is most evidently and undeniably one of them.

This article is about the different methods that physics professionals that are into research work, often use in their everyday life.

1. Monte-Carlo Simulation:

Whenever there is an ample amount of uncertainty involved in a project, Monte Carlo simulation always comes in useful. This is one big example of a statistical probability method used in Physics. The core idea of the method is to use random samples of parameters or inputs to study complex processes. Early days problems in physics like neutron diffusion used to be too complex for an analytical solution. So, the numerical evaluation had to be done and this method proved to be very successful in finding the solution. It is very popular in the fields of simulating quantum field theories, evaluation of integrals. Also used in Lattice QCD and Electroweak theories, simulated annealing, diffusive dynamics, classical dynamics of particles. galaxy dynamics, Hartree-Fock approximation, density functional method and finite element method based methods for partial differential equations.

2. Bayesian Statistics:

Bayesian statistics is used very largely in physics. They are used in hierarchical models, posterior samplers and models for complex data types like images and functions. Hierarchical Bayesian models (HBM) are used for problems where individual object parameters and population parameters are unknown, and they, therefore, find applications like for detecting and characterizing galaxies in astronomical images, with variational inference to approximate the posterior, for modelling of supernovae light curves, and to fit cosmic ray data.

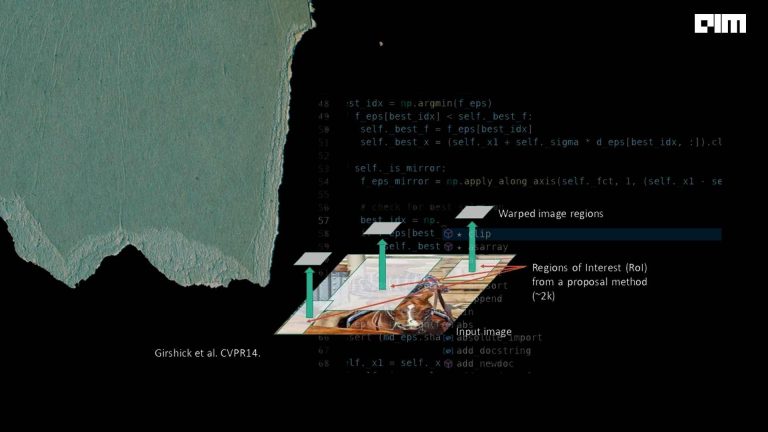

3. Statistical image analysis:

Data collected from detectors and satellites are huge in number. This data, usual input in a tabular form, is very difficult to visualise. The data may also contain images that have patterns, which are again difficult to decode. This data and non-trivial correlations might go undetected. Proper tools need to be applied to make sense out of them.

Among the methods that have been developed to facilitate the exploration of multivariate astronomical data are phylogenetic trees, graphs, chords, and starfish diagrams.

Image processing is a common thing to do, be it astrophysics data or material science. These methods commonly involve statistics, and after that theoretical modelling, predicting and forecasting is also done on it. Feature recognition and automated image processing techniques take help from statistics.

4. Error models:

Models typically assume errors with the same variance. Predictor variables in regression models are often assumed to be measured without error. But these errors are often the norm in Physics. Further, it is common practice to have estimates of the measurement error variances available through modelling of uncertainties inherent to the detection procedure. In cases where the measurement error is large, explicit errors-in-variables models are necessary to avoid biased estimates, particularly in regression models. These models often have a hierarchical structure in which the true predictor values are treated as parameters. Approaches to solving this problem include the bivariate correlated errors and intrinsic scatter model.

5. Chi-Square Test

This is one of the very important tests that several research projects go through, in experimental physics. The test basically tells if there is a significant difference between the observed and the expected value. This test stands as a reliable way to check if the observation of an experiment is correct. It is used to check if the proposed theoretical model really works on the observed data or not.

Conclusion

The relationship between statistics and physics has dramatically changed since recent times. Physics, and also other fields of science uses statistics to support and make stronger their research. An amalgamation of both these fields is sure proving to be a success story.