Due to the ambiguity in Deep Learning solutions, there has been a lot of talk about how to make explainability inclusive of an ML pipeline. Explainable AI refers to methods and techniques in the application of artificial intelligence technology (AI) such that the results of the solution can be understood by human experts. It contrasts with the concept of the “black box” in machine learning and enables transparency.

For example, consider the following two images:

The first picture consists of a bunch of mathematical expressions chained together that represent the way inner layers of an algorithm or a neural network function. Whereas the second picture also contains the working of an algorithm but the message is more lucid.

Given an opportunity to choose, any client would prefer the second example. The kind of reputation that ML models have gained over the years has made users skeptical. This problem is more prevalent in use cases where the results are of critical nature. These can be an image recognition algorithm identifying criminals or a model deployed for cancer diagnosis or it can be a recommendation model that pushes certain news and products forward.

The non-transparency of the algorithms can lead to the exploitation of certain groups.

To promote explainable AI, researchers have been developing tools and techniques and here we look at a few which have shown promising results: over the past couple of years:

What-if Tool

TensorFlow team announced the What-If Tool, an interactive visual interface designed to help visualize datasets and better understand the output of TensorFlow models. To analyze the models deployed. In addition to TensorFlow models, one can also use the What-If Tool for XGBoost and Scikit Learn models.

Once a model has been deployed, its performance can be viewed on a dataset in the What-If tool.

Additionally, one can slice the dataset by features and compare performance across those slices, identifying subsets of data on which the model performs best or worst, which can be very helpful for ML fairness investigations.

LIME

Local Interpretable Model-Agnostic Explanations LIME is an actual method developed by researchers at the University Of Washington to gain greater transparency on what’s happening inside an algorithm.

When the number of dimensions is high, maintaining local fidelity for such models becomes increasingly hard. In contrast, LIME solves the much more feasible task of finding a model that approximates the original model locally

LIME incorporates interpretability both in the optimization and the notion of interpretable representation, such that domain and task specific interpretability criteria can be accommodated.

LIME, a modular and extensible approach to faithfully explain the predictions of any model in an interpretable manner. The team also introduced SP-LIME, a method to select representative and non-redundant predictions, providing a global view of the model to users

DeepLIFT

DeepLIFT is a method that compares the activation of each neuron to its ‘reference activation’ and assigns contribution scores according to the difference. It gives separate consideration to positive and negative contributions, DeepLIFT can also reveal dependencies which are missed by other approaches. Scores can be computed efficiently in a single backward pass.

DeepLIFT is on pypi, so it can be installed using pip:

pip install deeplift

Skater

Skater is a unified framework to enable Model Interpretation for all forms of model to help one build an Interpretable machine learning system often needed for real world use-case. Skater is an open source python library designed to demystify the learned structures of a black box model both globally and locally.

Shapley

The Shapley Value SHAP (SHapley Additive exPlanations) is the average marginal contribution of a feature value over all possible coalitions.

Coalitions are basically combinations of features which are used to estimate the shapley value of a specific feature. It is a unified approach to explain the output of any machine learning model.

SHAP connects game theory with local explanations, uniting several previous methods and representing the only possible consistent and locally accurate additive feature attribution method based on expectations.

AIX360

The AI Explainability 360 toolkit is an open-source library developed by IBM in support of interpretability and explainability of datasets and machine learning models. The AI Explainability 360 is released as a Python package that includes a comprehensive set of algorithms that cover different dimensions of explanations along with proxy explainability metrics.

Activation Atlases

Google in collaboration with OpenAI, came up with Activation Atlases, which was a novel technique aimed at visualising how neural networks interact with each other and how they mature with information along with the depth of layers.

This approach was developed to have a look at the inner workings of convolutional vision networks and derive human-interpretable overview of concepts within the hidden layers of a network.

Rulex Explainable AI

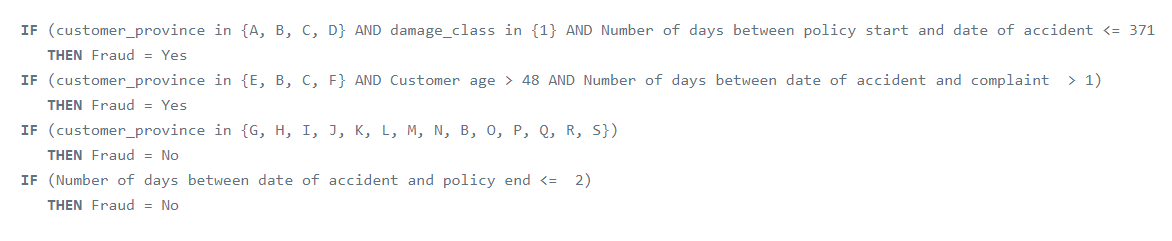

Rulex is a company that creates predictive models in the form of first-order conditional logic rules that can be immediately understood and used by everybody.

Rulex’s core machine learning algorithm, the Logic Learning Machine (LLM), works in an entirely different way from conventional AI. The product in designed so that it produces conditional logic rules that predict the best decision choice, in plain language that is immediately clear to process professionals. Rulex rules make every prediction fully self-explanatory.

And unlike decision trees and other algorithms that produce rules, Rulex rules are stateless and overlapping.

The Need For Transparency In Models

Data quality and its accessibility are two main challenges one will come across in the initial stages of building a machine learning pipeline.

But there can be problems associated with the information that is deployed into the model such as:

- an incorrect model gets pushed

- incoming data is corrupted

- incoming data changes and no longer resembles datasets used during training.

Today, developers are eve using deep learning to analyze another deep learning network, allowing them to understand the inner working of a model and the kind of influence as it flows to layers above or below and ultimately back down to the underlying data source.

Operating in the dark has made AI less trustworthy as a solution. Providing a solution to any problem is the end of the story to any ML model. However, for the practitioners it is crucial to explain their results in the most intuitive to their clients.

The European Union’s General Data Protection Regulation (GDPR), which went into effect in 2018, insists on having high level data protection for consumers and harmonizes data security regulations within the European Union (EU).

Today, the companies have to inform subjects about any personal data collection and processing and obtain their consent before collecting such data as the GDPR threatens the use of traditional machine learning AI technology for automated decisions.

According to article 14 of GDPR, when a company uses automated decision-making tools, it must provide meaningful information about the logic involved, as well as the significance and the envisaged consequences of such processing for the data subject.

For a safer, more reliable inclusion of AI, a seamless blend of human and artificial intelligence is needed. Human intervention should also be considered in developing techniques that allow practitioners to easily evaluate the quality of the decision rules in use and reduce false positives.