Facial Capture and animation have gained an increasing interest in recent years. Visual effects firms and video game companies want to create and deliver new experiences to their audiences, whether a hyper-realistic human character or a fantasy creature created by visual effects and driven by a human performer. With rapidly growing technology and finding more efficient ways of delivering high-quality animation, and the increasing visual realism of performances using high-performance systems, the sector has motivated a surge of innovative academic and industrial developments. As a result, research and development on facial animation have drawn huge intrigue from academia and the entertainment industry conglomerates—the central aspect to help with many of these developments being technical advances in computer vision and graphics.

Some of the particular advancements noted are facial tracking and motion capture, facial tuning, dense non-rigid registration of meshes, measurement of skin rendering attributes through various models and sensing technology. Photorealistic synthesis and advanced facial editing of portrait face images find many applications in several animation fields, including special effects, extended reality, virtual worlds, and next-generation communication. An artist’s control over the facial capture technology’s semantic parameters such as geometric identity, expressions, reflectance, or scene illumination is generally desired during the content creation process. Image synthesis, too, has made huge progress with the emergence of Generative Adversarial Networks (GANs). In essence, GANs learn about the domain from the image and produce new samples from the same distribution.

For such use cases, StyleGAN is one of the most popular choices for this task. Not only does it help achieve state-of-the-art visuals, but it also demonstrates fantastic editing capabilities from its organically formed disentangled latent space. Using this property of image synthesis, many methods can be tuned over the realistic editing abilities of StyleGAN’s latent space and help perform different types of image editing techniques such as changing facial orientations, expressions, or age, by traversing the learned features. Furthermore, using synthetic image data as additional training data has proved helpful even if they are graphically rendered images with a wide array of applications such as facial reconstruction, gaze estimation, human pose, shape estimation.

What is Pivotal Tuning for Images?

The current traditional advanced facial editing techniques being in use leverage the generative power of a pre-trained StyleGAN. To successfully edit an image using StyleGAN, one must first invert the sample image into the pre-trained generator’s domain. As it turns out, StyleGAN’s latent space induces an inherent tradeoff between the image’ distortion and editability; for instance, it fiddles between maintaining the original appearance and altering some of its attributes convincingly. Practically, this means it is still challenging to apply identity-preserving facial editing techniques to faces that are out of the generator’s domain. Pivotal Image Tuning is an approach to bridge this gap. The Pivotal Image Tuning technique only slightly alters the generator so that an out-of-domain image is successfully mapped into an in-domain latent space. The key idea of pivotal tuning is making use of a brief training process that preserves the editing quality of an image while changing its portrayed identity and appearance.

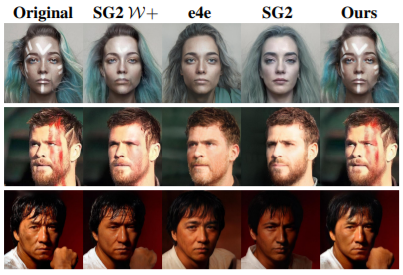

Using Pivotal Tuning Inversion, also known as PTI, an initial inverted latent model that serves as a pivot, the generator is fine-tuned. At the same time, a regularization method keeps nearby identities between images intact to maintain the effect. This training process ends up altering appearance features that represent mostly identity without affecting the editing capabilities of the model. Pivotal tuning can also adjust the generator to accommodate many faces while introducing negligible to no distortion on the rest of the image domain. It has been validated through inversion and editing metrics to show better performance compared to the current state-of-the-art methods. Using the PTI Method, advanced edits such as pose, age, or expression can be implemented to numerous images of well-known and recognizable identities. It is resilient to even harder cases, such as images including heavy make-up, elaborate hairstyles or headwear. The method can reconstruct even out-of-domain visual details, such as face paintings or hands, significantly better than state-of-the-art methods.

Here we can see an example of Real Images edited using PTI’s Multi-ID Personalized StyleGAN. All depicted images here are generated by the same model, fine-tuned on political and industrial world leaders. As seen, by applying various edit operations on these newly introduced and highly recognizable identities, The model still preserves the facial features well.

Using such a technique can be a boon to the image processing and photography industry, as every required aspect can be customised according to the needs without compromising on quality or having the bother to reshoot for images!

Getting Started With Pivotal Tuning for Images

We will try to implement a pivotal tuning model on an image using Pivotal Tuning Inversion. The Input image will be automatically edited with different edit metrics such as applying inversion, aging, changing or applying smile, and changing the pose of the image. This will show us the different capabilities of the PTI model and how effective the editing techniques are in making auto-edits without degrading the quality of the images. The creators of PTI partially inspire the following code, and their GitHub repository can be accessed from here.

Installing the Libraries

The first step would be to install our required libraries. We will use two libraries initially named, Weights & Biases, which will help us maintain our machine Learning model and provide faster and effective visualization. The other one is the lpips library, which will provide us with a perceptual similarity metric dataset to help train our model on different image perspectives. You can use the following code to install them

!pip install wandb !pip install lpips

Importing the StyleGan model,

!wget https://github.com/ninja-build/ninja/releases/download/v1.8.2/ninja-linux.zip

!sudo unzip ninja-linux.zip -d /usr/local/bin/

!sudo update-alternatives --install /usr/bin/ninja ninja /usr/local/bin/ninja 1 --force

Importing the PTI Model Weights,

import os

os.chdir('/content')

CODE_DIR = 'PTI'

!git clone https://github.com/danielroich/PTI.git $CODE_DIR

Installing Dependencies and Pretrained Models

Next up we will install all our other dependencies required to create our model, here we are also making use of Pytorch and calling Image editing functions from PTI.

import os import sys import pickle import numpy as np from PIL import Image import torch from configs import paths_config, hyperparameters, global_config from utils.align_data import pre_process_images from scripts.run_pti import run_PTI from IPython.display import display import matplotlib.pyplot as plt from scripts.latent_editor_wrapper import LatentEditorWrapper

Importing pretrained weights for our StyleGAN,

## Download pretrained StyleGAN on FFHQ 1024x1024

downloader.download_file("125OG7SMkXI-Kf2aqiwLLHyCvSW-gZk3M", os.path.join(save_path, 'ffhq.pkl'))

Downloading the Dlib tool for setting alignment and preprocessing images before feeding input to the PTI,

#Download Dlib tool for alingment

downloader.download_file("1xPmn19T6Bdd-_RfCVlgNBbfYoh1muYxR", os.path.join(save_path, 'align.dat'))

Setting up the Model Configuration

Now we will arrange and set up our model from everything that was called or imported before.

image_dir_name = 'image'

# If set to true download the desired image from the given url.If set to False, assumes you have uploaded personal image to

# 'image_original' dir

use_image_online = True

image_name = 'personal_image'

use_multi_id_training = False

global_config.device = 'cuda'

paths_config.e4e = '/content/PTI/pretrained_models/e4e_ffhq_encode.pt'

paths_config.input_data_id = image_dir_name

paths_config.input_data_path = f'/content/PTI/{image_dir_name}_processed'

paths_config.stylegan2_ada_ffhq = '/content/PTI/pretrained_models/ffhq.pkl'

paths_config.checkpoints_dir = '/content/PTI/'

paths_config.style_clip_pretrained_mappers = '/content/PTI/pretrained_models'

hyperparameters.use_locality_regularization = False

Image Preprocessing

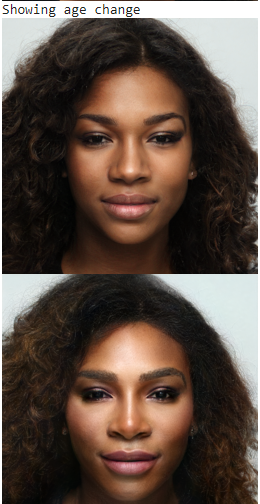

Now we will perform the first steps to pre-process our input image; we will correct the alignment of the input image before feeding it as an input to our model to achieve better results. Here I am using an image of Serena Williams to set and correct the head alignment.

## Download real face image

## If you want to use your own image skip this part and upload an image/images of your choosing to image_original dir

if use_image_online:

!wget -O personal_image.jpg https://static01.nyt.com/images/2019/09/09/opinion/09Hunter1/09Hunter1-superJumbo.jpg ## Photo of Sarena Wiliams

original_image = Image.open(f'{image_name}.jpg')#open original image

original_image

pre_process_images(f'/content/PTI/{image_dir_name}_original')

aligned_image = Image.open(f'/content/PTI/{image_dir_name}_processed/{image_name}.jpeg')

aligned_image.resize((512,512)) #setting alignment

Corrected Image :

Invert images using PTI

Training our created model

os.chdir('/content/PTI')

model_id = run_PTI(use_wandb=False, use_multi_id_training=use_multi_id_training)

Visualizing our results,

def display_alongside_source_image(images):

res = np.concatenate([np.array(image) for image in images], axis=1)

return Image.fromarray(res)

#loading image generator

def load_generators(model_id, image_name):

with open(paths_config.stylegan2_ada_ffhq, 'rb') as f:

old_G = pickle.load(f)['G_ema'].cuda()

with open(f'{paths_config.checkpoints_dir}/model_{model_id}_{image_name}.pt', 'rb') as f_new:

new_G = torch.load(f_new).cuda()

return old_G, new_G

generator_type = paths_config.multi_id_model_type if use_multi_id_training else image_name

old_G, new_G = load_generators(model_id, generator_type)

# performing image synthesis

def plot_syn_images(syn_images):

for img in syn_images:

img = (img.permute(0, 2, 3, 1) * 127.5 + 128).clamp(0, 255).to(torch.uint8).detach().cpu().numpy()[0]

plt.axis('off')

resized_image = Image.fromarray(img,mode='RGB').resize((256,256))

display(resized_image)

del img

del resized_image

torch.cuda.empty_cache()

w_path_dir = f'{paths_config.embedding_base_dir}/{paths_config.input_data_id}'

embedding_dir = f'{w_path_dir}/{paths_config.pti_results_keyword}/{image_name}'

w_pivot = torch.load(f'{embedding_dir}/0.pt'

old_image = old_G.synthesis(w_pivot, noise_mode='const', force_fp32 = True)

new_image = new_G.synthesis(w_pivot, noise_mode='const', force_fp32 = True)

print('Lower image is the inversion before Pivotal Tuning and the upper image is the product of pivotal tuning')

plot_syn_images([old_image, new_image])

As we can notice, with the help of PTI, a completely new face can be generated from an input image!

Performing different editing techniques on the Image

We will now further apply different edit metrics such as applying inversion, changing or applying smile, and changing the pose of the image.

latent_editor = LatentEditorWrapper()

latents_after_edit = latent_editor.get_single_interface_gan_edits(w_pivot, [-2, 2])

for direction, factor_and_edit in latents_after_edit.items():

print(f'Showing {direction} change')

for latent in factor_and_edit.values():

old_image = old_G.synthesis(latent, noise_mode='const', force_fp32 = True)

new_image = new_G.synthesis(latent, noise_mode='const', force_fp32 = True)

plot_syn_images([old_image, new_image])

EndNotes

In this article, we have explored what exactly PTI or Pivotal Tuning Inversion technique is. We also tried to get a better understanding and created a hands-on implementation for the model. Therefore, you can try it with more complex images and notice the difference in processing. The link to the above colab implementation can be accessed from here.

Happy Learning!