BART (Bayesian Additive Regression Tree) is an ensemble technique based on the Bayes theorem which is used to calculate the posterior probability. Fitting and inference by this model are accomplished via an iterative Bayesian backfitting Monte Claro and Markov chain algorithm that generates samples from a posterior. Based on prior and likelihood, the output/predictions are generated. Full posterior inference can be performed using this approach, including point and interval estimates of the unknown regression function as well as the marginal effects of potential predictors. In this article, we will have a detailed introduction to BARTs with their working mechanism. Following are the points and plots that this article will cover.

Table of contents

- The Posterior Probability

- What is BART?

- How sum of trees are built?

- Why priors are regularized?

- The back fitting MCMC algorithm

- How to use BART for classification?

Ensemble techniques have become popular for both regression and classification problems. To understand BART first you need to understand posterior probability in Bayesian statistics.

The Posterior probability

The probability that an event will occur before new data is collected is known as posterior probability. The distribution of this probability defines the prior probability and the likelihood of occurrence of new data. The posterior probability is the probability of event A occurring given that event B has occurred and in arithmetic terms, it is stated as:

PA|B=P(A)P(B|A)P(B)

where,

P(A) = the prior probability of A occurring

P(A|B)= the conditional probability of A given that B occurs

P(B|A) = the conditional probability of B given that A occurs

P(B) = the probability of B occurring

Are you looking for for a complete repository of Python libraries used in data science, check out here.

What is BART?

BART stands for Bayesian Additive Regression Trees. It is a Bayesian approach to nonparametric function estimation using regression trees. Regression trees rely on recursive binary partitioning of predictor space into a set of hyperrectangles to approximate some unknown function.

- Hyperreactangles are high dimensional rectangular regions. In simple language, it is a cuboid. It is better than the 2d rectangles used by regular trees as now, with the 3rd dimension, it could precisely classify data.

The summation of trees is fundamentally a multivariate additive model. These multidimensional components are more easily able to incorporate interaction effects than generalized additive models based on sums of low dimensional smoothers And compared to a single tree model, the sum-of-trees can more easily incorporate additive effects.

By keeping individual tree effects small, we can regularize the fit by imposing a prior on the sum-of-trees model. The inferences obtained from BART are based on successive iterations of the back fitting algorithm which are effectively an MCMC sample from the induced posterior probability over the sum-of-trees model space. Building a sum of trees and regularization of prior are the two major things that define the BART model.

How sum of trees are built?

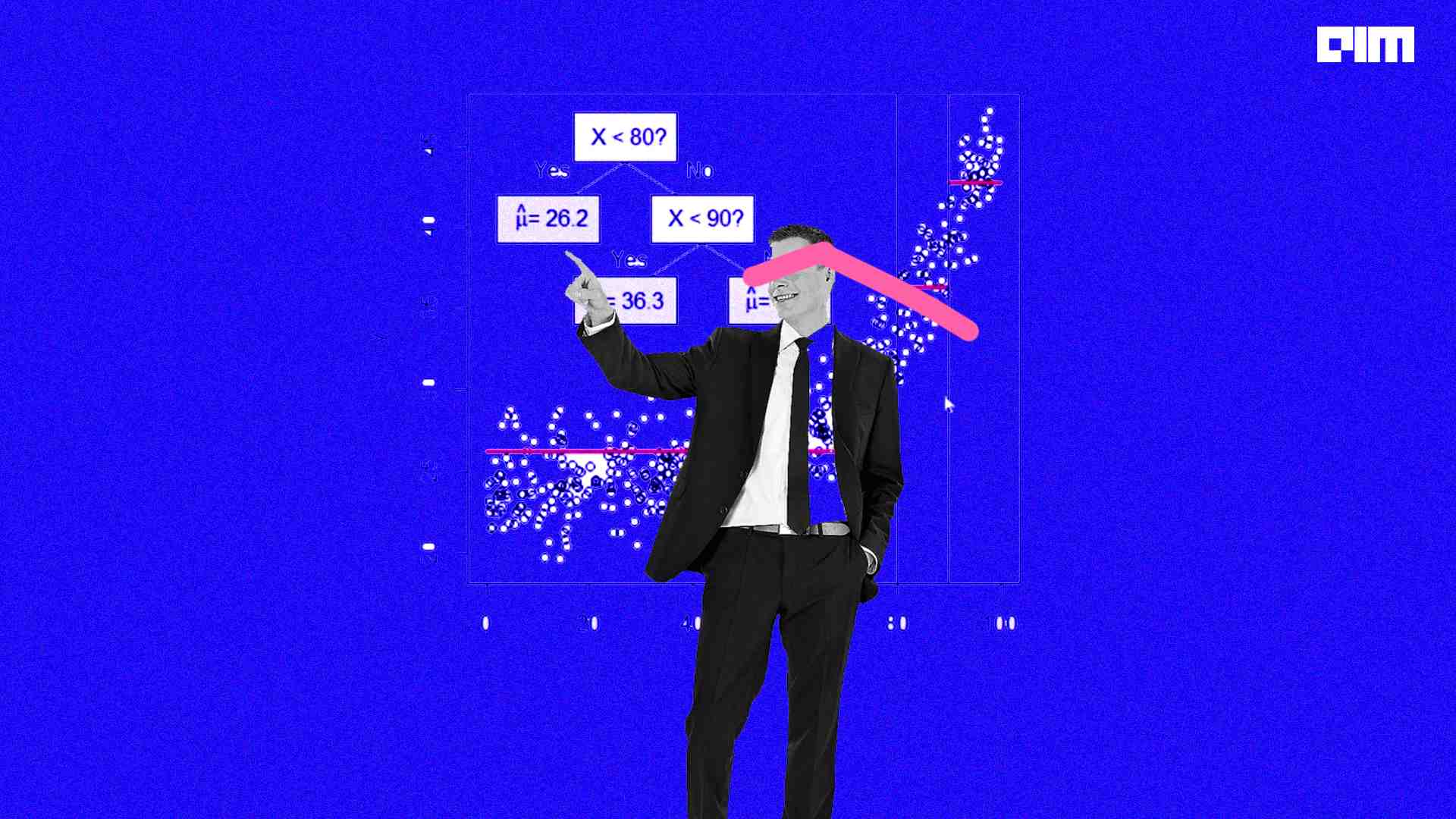

Let’s see what is the math behind the creation of a single in BART which is then added up to multiple trees. Let us assume that a set of binary trees consisting of a set of root nodes with parent nodes and a set of terminal nodes denoted as T, the terminal nodes are denoted by b and M denote a set of parameter values associated with each of the terminal nodes of the binary tree.

The parent node is binary splits of the predictor space where A is a subset of the range of continuous components associated with each terminal node (x) denoted as of the form {x ∈ A} vs {x /∈ A}. After the association is done each association is assigned a value represented by μ.

μ=g(x;T,M)

Y = μ + ε

where,

μ= the value assigned to all the association,

ε ~ N(0,σ2) the distribution of the data with mean 0 and variance as calculated (approximately normal distribution).

Hence a single tree is formed now by adding all the trees a sum of trees is formed.

Y = j=1mμj + ε

μj=g(xj;Tj,Mj)

where,

μj= the value assigned to all the associations for jth tree

ε = the distribution of the data for jth tree (approximately normal distribution)

The above diagram shows a binary tree with the root node, a two-parent node split from the root node, the parent node is further split into two terminal nodes, one leaf node and one terminal.

Why priors are regularized?

Large tree components would overwhelm the rich structure of trees, thereby limiting the advantages of the additive representation both in terms of function approximation and computation. To overcome this effectively regularize the fit by keeping the individual tree effects from being unduly influential. There are five major objectives of regularization of priors:

- The terminal node parameters of every tree should be independent.

- There should not be any correlation between trees

- Variance should differ

- Approximate normal distribution

- To decide the number of trees

All these are regularized so that the backfitting MCMC algorithm could work at its maximum capabilities. Let’s understand backfitting MCMC algorithm.

The backfitting MCMC algorithm?

MCMC algorithm consists of two probability techniques: Monte Carlo simulation technique and the Markov Chain technique. Let’s understand these two fancy techniques of sampling probabilities.

- Monte Carlo works on the principle of randomness to solve any problem having a probabilistic interpretation that is deterministic in the property. Mathematically this technique could be explained as the approximation of the average value of the random variable X, which is equal to the sum (the Σ sign) of the data randomly chosen from that population (the samples), divided by the sample size

Average(X)=1Nn=1Nxn

where,

N = sample size

xn = nth data

When the probability distribution is parametric then this technique of randomness can’t be used so the Markov Chain of sampling comes into play.

- Markov Chain states that the probability of transitioning to any given state is solely determined by the current state and the amount of time elapsed. It uses Markov property to derive this conclusion which is stated as it is sufficient to know the probability distribution of the previous state to determine the probability distribution of the current state. Markov property can be mathematically expressed as:

where,

P(Xn+1|Xn) = Probability of future occurrence of data based in previous data

When the algorithm initializes the chain with a certain number of simple single node trees, and then iterations are repeated until satisfactory convergence is obtained. At each iteration, each tree may increase or decrease the number of terminal nodes by one, or change one or two decision rules. Each µ (refer to the above equation of tree building) will change (or cease to exist or be born), and σ (variance) will change.

It is not uncommon for a tree to grow large and then subsequently collapse back down to a single node as the algorithm iterates. The sum-of-trees model, with its abundance of unidentified parameters, allows for “fit” to be freely reallocated from one tree to another. Because each move makes only small incremental changes to the fit. Simply imagine sculpting a sculpture by adding and subtracting small dabs of clay, that is the same thing happening here.

Ultimately these two techniques are used by the MCMC algorithm to derive the posterior probabilities and use those probabilities to predict the outcome.

How to use BART for classification?

BART is ready to be used for a regression problem where the output is in a continuous format. However, for a binary problem where output is a categorical variable (= 0 or 1), it needs to be changed to achieve classification. For this extension of BART, we need to impose a regularization before μ (refer above equations) to implement a Bayesian backfitting algorithm for posterior computation. By shrinking the μ we can regularize the value and the backfitting MCMC algorithm can further be used to classify the binary data.

Final Verdict

The essential components of BART are the sum-of-trees model, the regularization prior and the backfitting MCMC algorithm. This is accomplished with a regularization prior that shrinks the tree effects towards a simpler fit.

References