By the emergence of deep learning in the large size of data, the true usage of the data has been boosted and deep learning does this by traversing those data on millions of parameters. But this has made an additional requirement of the computation resources such as GPU and these resources are not available at the cutting edge devices such as mobile phones. To counter this issue researchers have introduced many compression techniques such as Knowledge Distillation which is the process of converting complex model behaviour to smaller one in terms of a number of parameters. So in this article, we will take a look at knowledge distillation and will discuss its context briefly. Below are the major points listed that are to be discussed in this article.

Table of contents

- What is knowledge distillation?

- Need for knowledge distillation

- Major parts of the technique

- Types of knowledge distillation

- Modes of distillation

Let’s start the discussion by understanding Knowledge Distillation.

What is knowledge distillation?

In machine learning, knowledge distillation refers to the process of transferring knowledge from a large model to a smaller one. While huge models (such as very deep neural networks or ensembles of multiple models) have larger knowledge capacity than small models, this capacity may not be utilized to its full potential.

Even if a model only employs a small percentage of its knowledge capacity, evaluating it can be computationally expensive. Knowledge distillation is the process of moving knowledge from a large model to a smaller one while maintaining validity.

Smaller models can be put on less powerful hardware because they are less expensive to evaluate (such as a mobile device). Knowledge distillation has been utilized successfully in a range of machine learning applications, including object detection.

As illustrated in the figure below, knowledge distillation involves a small “student” model learning to mimic a large “teacher” model and using the teacher’s knowledge to achieve similar or superior accuracy.

Need for knowledge distillation

In general, the size of neural networks is enormous (millions/billions of parameters), necessitating the use of computers with significant memory and computation capability to train/deploy them. In most cases, models must be implemented on systems with little computing power, such as mobile devices and edge devices, in various applications.

However, ultra-light (a few thousand parameters) models may not provide us with good accuracy. This is where Knowledge Distillation comes into play, with assistance from the instructor network. It essentially lightens the model while keeping accuracy.

Major parts of the technique

The Teacher and Student models of Knowledge Distillation are two neural networks techniques.

Teacher model

An ensemble of separately trained models or a single very large model trained with a very strong regularizer such as dropout can be used to create a larger cumbersome model. The cumbersome model is the first to be trained.

Student model

A smaller model that will rely on the Teacher Network’s distilled knowledge. It employs a different type of training called “distillation” to transfer knowledge from the large model to the smaller Student model. The student model is more suitable for deployment because it will be computationally less expensive than the Teacher model while maintaining the same or better accuracy.

Types of knowledge distillation

According to Knowledge Distillation: A Survey research paper there are three major types of knowledge distillation I,e response-based, feature-based, and relation-based distillation. Let’s discuss them briefly.

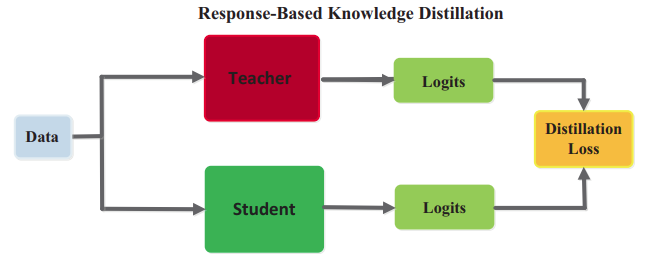

Response based distillation

Response-based knowledge is the focus of the teacher model’s final output layer. The student model will learn to mimic the teacher model’s predictions, according to the hypothesis. This can be done using a loss function known as the distillation loss, which captures the difference between the logits of the student and teacher models, as shown in the diagram below. The student model will become more accurate in making predictions similar to the teacher as this loss is reduced over time.

Feature-based distillation

Deep neural networks excel at learning multiple levels of feature representation as abstraction increases. A trained teacher model also captures data knowledge in its intermediate layers, which is particularly important for deep neural networks. The intermediate layers learn to discriminate between specific features, which can then be used to train a student model.

The goal, as illustrated in the figure below, is to train the student model to learn the same feature activations as the teacher model. This is accomplished by minimizing the difference between the feature activations of the teacher and student models.

Relation based distillation

Response-based and feature-based knowledge both use the outputs of specific layers in the teacher model. Relationship-based knowledge enlarges the connections between different layers or data samples. A flow of solution process (FSP) defined by the Gram matrix between two layers was used to investigate the relationships between different feature maps.

The feature map pairs’ relationships are summarized in the FSP matrix. It is calculated by taking the inner products of the features of two layers. Singular value decomposition is used to distil knowledge, with correlations between feature maps serving as the distilled knowledge. This relationship can be summarized as a relationship between feature maps, graphs, similarity matrices, feature embeddings, and probabilistic distributions based on feature representations. The paradigm depicts in the below figure.

Modes of distillation

The distillation modes (i.e. training schemes) for both teacher and student models are discussed in this section. The learning schemes of knowledge distillation can be directly divided into three main categories, depending on whether the teacher model is updated simultaneously with the student model or not.

Offline distillation

The majority of previous knowledge distillation methods operate offline, with a pre-trained teacher model guiding the student model. The teacher model is first pre-trained on a training dataset in this mode, and then knowledge from the teacher model is distilled to train the student model.

Given recent advances in deep learning, a wide range of pre-trained neural network models that can serve as the teacher, depending on the use case, are freely available. Offline distillation is a well-established technique in deep learning that is also simple to implement.

Online distillation

Despite the fact that offline distillation methods are simple and effective, some issues have arisen. To overcome the limitations of offline distillation, online distillation is proposed to improve the performance of the student model even further, especially when a large-capacity high-performance teacher model is not available. In online distillation, both the teacher model and the student model are updated at the same time, and the entire knowledge distillation framework is trainable from beginning to end.

Self distillation

In self-distillation, the same networks are employed for the instructor and student models. This is a sort of online distillation in which knowledge from the network’s deeper levels is distilled into the network’s shallow layers. Knowledge from the early epochs of the teacher model can be transferred to its later epochs to train the student model.

Final words

Through this post, we have discussed what is knowledge distillation and briefly seen its need, major parts of the technique, types of knowledge distillation, and lastly the mode of distillation. On the Keras official website, there is the practical implementation of the knowledge distillation where the code simulates the same behaviour of the teacher-student model as we discussed in this post. For more hands-on details on this process, I recommend you go through this implementation.