Machine learning in embedded systems allows the use of that data in automated business processes to make more educated predictions. Running machine learning models on embedded devices is generally known as embedded machine learning. Machine learning leverages a large amount of historic data to enable electronic systems to learn autonomously and use that knowledge for analysis, predictions, and decision making. Devices such as these can fulfill many tasks in the industry. Such devices allow machine learning algorithms on low-power devices like microcontrollers.

Machine learning in embedded devices has many benefits. It eliminates the need for transferring and storing data on cloud servers, which reduces data breaches and privacy leaks involved in transferring data. It also reduces the theft of Intellectual property, personal data, and business secrets. The execution of ML models eliminates the need of transferring data to a cloud server, this economizes the bandwidth and network resources. Using embedded devices that run on ML-based models is also sustainable as it has a far lower carbon footprint. The low carbon footprint is attributed to the fact that microcontrollers used in the device are power efficient. Embedded systems are much more efficient than cloud-based systems. This is due to the fact that there is no need to transfer a large amount of data to the cloud which contributes to significant network latency.

Brief overview of ML on the edge

Edge ML is the solution to congestion in cloud networks. It allows data to be processed locally on local servers or at device levels. The process using machine learning and deep learning algorithms. Devices dependent on Edge Machine learning send data to the cloud when needed. Such devices do not need every information to be sent to the cloud. The algorithm is capable of executing some tasks at the ground level. This reduces the strain on the cloud network. It enables the processing of data in real-time that was previously not possible. Many platforms such as Amazon Echo and Google Home currently use this technology.

Whereas TinyML, which has excited enthusiasts since the inception of the concept. It is the latest technology. It brings the technology of microcontrollers almost everywhere. The privacy offered by the platforms has made it an excellent alternative to the cloud and edge-based platforms. The technology is known for its low-power consumption models. The models can work around the tight-on chip memory constraints. Its ability to run complex deep learning models embedded with an electronic device opens up numerous avenues for innovation. TinyML doesn’t need an edge, cloud, or server; it can run locally on a microcontroller that can manage actuators and sensors.

The innovation market has been hot since the inception of embedded technology and many products have captured the attention of enthusiasts. Here are a few devices that indicate a bright future for ML on embedded systems:

1)NVIDIA Jetson Xavier NX-based Industrial AI smart camera

Adlink technology has come up with the industry’s first industrial smart camera that comes with NVIDIA’s Jetson Xavier NX. This camera is a high-performance and small form factor camera. It will open the door to AI innovation in manufacturing, logistics, healthcare, agriculture, and many other business sectors. The product is about ten times more efficient than its predecessor. It is a hassle-free, compact, reliable, and powerful product for edge AI applications and also the best match for AI software providers.

2) NVIDIA Jetson TX2 NX

NVIDIA has unveiled another single-board computer that embodies AI performance for entry-level embedded and edge products. It is faster than its predecessor Jetson Nano and shares form factor and pin compatibility with it. Its speed of development along with a unique combination of form factor, performance, and power advantage makes it the ideal choice as an AI product platform.

3) Google’s Edge TPU

Google has purposely built this to run AI at the edge. Edge TPU complements cloud TPU and Google cloud services to provide end-to-end, cloud-to-edge, hardware+software infrastructure for facilitating the deployment of customer’s AI-based solutions. It has a low carbon footprint and consumes very little power. Users can leverage the Edge TPU to deploy high-quality machine learning inference at the edge, using the coral platform that is built for ML at the edge.

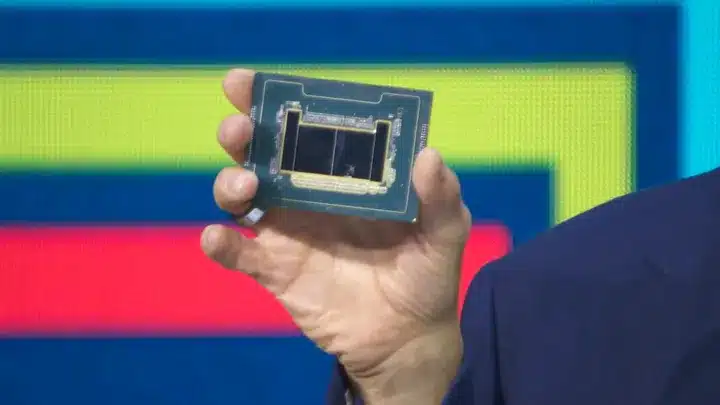

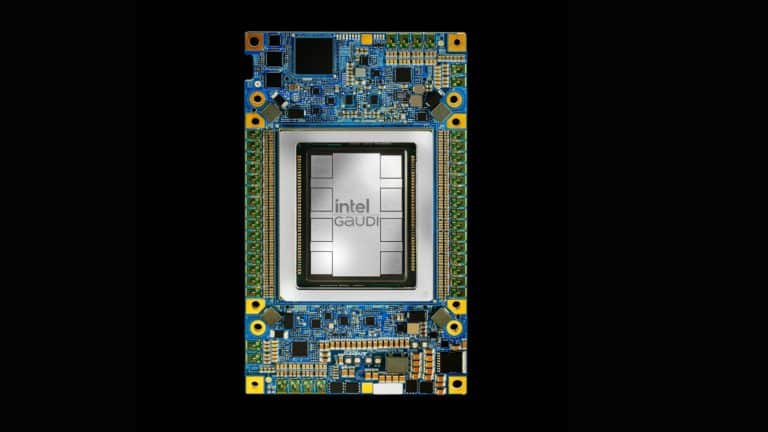

4) Intel Movidius

Intel Movidius VPUs enable demanding computer vision and edge AI workloads with extreme efficiency. It minimizes data movement by coupling parallel programmable compute with workload-specific hardware acceleration in a novel technological architecture. It allows intelligent cameras, edge servers, and AI appliances with deep neural networks and computer vision-based applications that can be of great advantage in industrial automation.

5) Apple’s Xnor

Apple has been synonymous with innovation and introduced customised AI chips that have been mounting AI workloads on their flagship devices. To further its efforts, Apple acquired Xnor.ai for a massive amount of $200 million. It has introduced a tiny device that works like a solar-powered calculator and runs state-of-the-art object recognition. It can operate independently of the cloud for tasks such as facial recognition, natural language processing while safeguarding the privacy of users.

The tech giants have increased their spending on developing edge and tiny ML systems. There is even a massive search for talent ranging from embedded software engineers to embedded hardware engineers.