When deep learning is combined with NLP, a variety of interesting applications get developed. Language translation, sentiment analysis, name generation, etc., are some of these interesting applications. Masked language modelling is also one of such interesting applications. Masked image modelling is a way to perform word prediction that was originally hidden intentionally in a sentence. In this article, we will discuss masked image modelling in detail, along with an example of its implementation using BERT. The major points to be discussed in the article are listed below.

Table of content

- What is masked language modelling?

- Applications of masked language models

- Masked language modelling using BERT

Let’s start with understanding masked language modelling.

What is masked language modelling?

Masked language modelling and image modelling can be considered similar to autoencoding modelling which works based on constructing outcomes from unarranged or corrupted input. As the name suggests, masking works with these modelling procedures which means we mask words from a sequence of input or sentences and the designed model needs to predict the masked words to complete the sentence. We can compare this type of modelling procedure to the process of filling in the blanks in an exam paper. The below example can explain the working masked image modelling.

Question: what is ______ name?

Answer: what is my/your/his/her/its name.

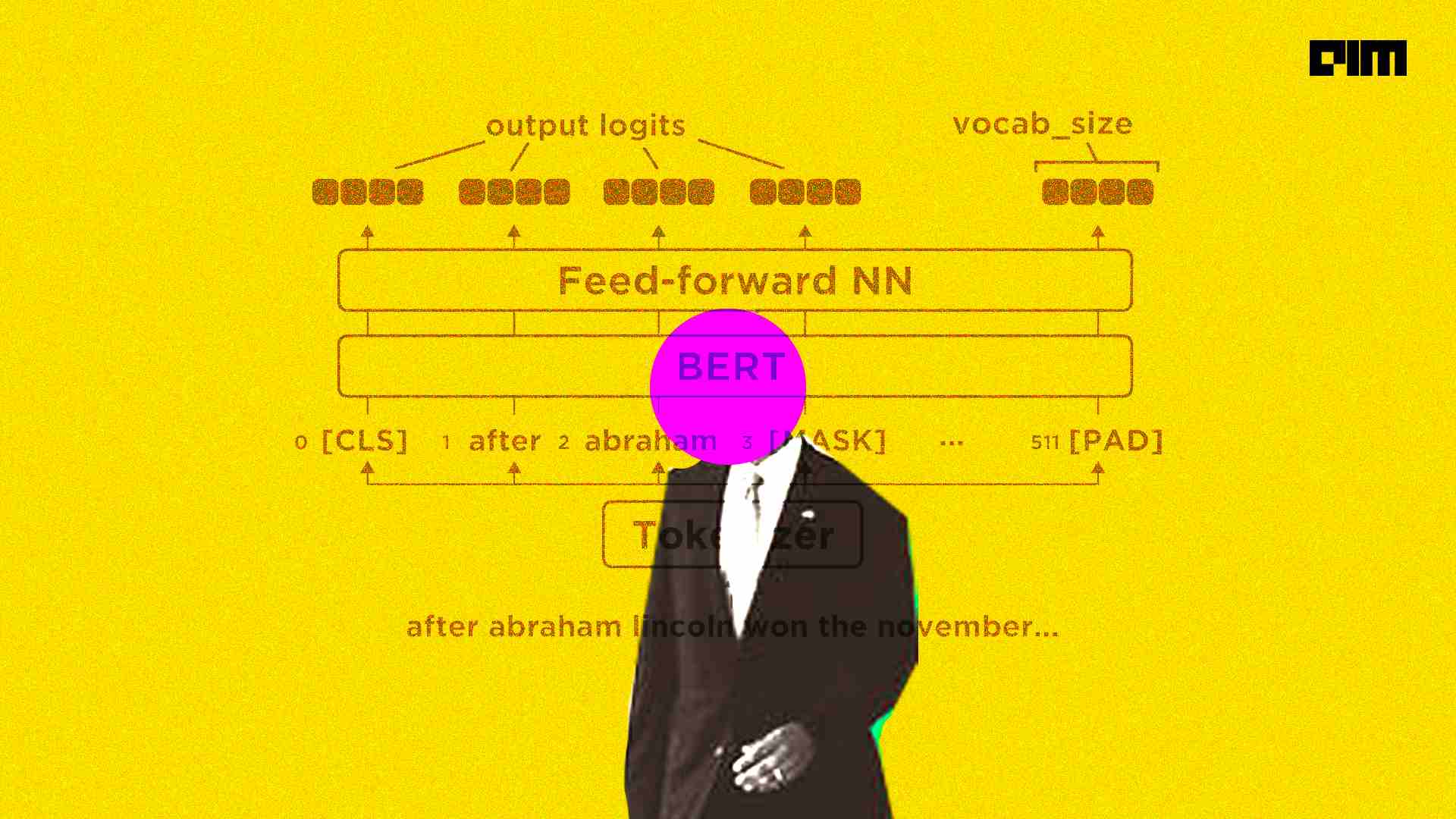

When we talk about the working of a model, the model needs to learn the statistical properties of sequences of words. Since the procedure can need to predict one or more than one word but not the whole sentence or paragraph it needs to learn certain statistical properties. The model needs to predict words using the other words that are presented in a sentence. The below image represents the working of a masked language model.

Here in the above, we have seen what a masked language model is. Let’s see where we can use them.

Applications of masked language models

Talking about the places where we should use masked language models, we find that we should use these models where we are required to predict the context of words. Since the words can have different meanings in different places the model needs to learn deep and multiple representations of words. These models have shown improved performance levels in the downstream tasks such as syntactic tasks that require lower layer representation of certain models in place of a higher layer representation. We may also find their use in learning the deep bidirectional representations of words. The model should be able to learn the context of words from the start of the sentence as well as from the behind.

Here we have seen where we can find the requirement of masked image modelling. Let’s look at the implementation of masked language modelling.

Implementation

In this article, we are going to use a BERT-based uncased model for masked language modelling. These models are already trained in the English language using the BookCorpus data that consists of 11,038 books and English Wikipedia data where list tables and headers are excluded from the data to perform masked language modelling objectives.

For masked language modelling, BERT based model takes a sentence as input and masks 15% of the words from a sentence and by running the sentence with masked words through the model, it predicts the asked words and context behind the words. Also one of the benefits of this model is that it learns the bidirectional representation of sentences to make the prediction more precise.

This model is also capable of predicting words using the two masked sentences. It concatenates two masked words and tries to predict. So that if two sentences are correlated to each other it can predict more precisely.

We can get this model using the transformer library that can be installed using the following lines of codes:

!pip install transformers

After installation, we are ready to use the pre-trained models available in the pipeline module of the transformer model.

Let’s import the library.

from transformers import pipeline

Instantiating the model:

model = pipeline('fill-mask', model='bert-base-uncased')

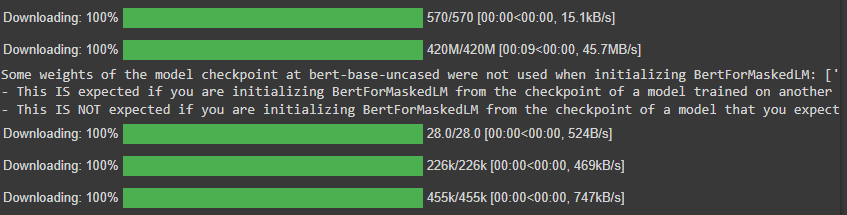

Output:

After instantiation, we are ready to predict masked words. This model requires us to put [MASK] in the sentence in place of a word that we desire to predict. For example:

pred = model("What is [MASK] name?")

pred

Output:

In the above output, we can see how precise are the predictions as we have thought in the above. With prediction, we also get scores and take count of the predicted word.

We can also use this model to get the feature of any text in the following ways.

Using PyTorch

#importing library

from transformers import BertTokenizer, BertModel

#defining tokenizer

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

#instantiating the model

model = BertModel.from_pretrained("bert-base-uncased")

#defining text

text = "What is your name?"

#extracting features

encoded_input = tokenizer(text, return_tensors='pt')

model(**encoded_input)Output:

Using TensorFlow

#importing library

from transformers import BertTokenizer, TFBertModel

#defining tokenizer

tokenizer = BertTokenizer.from_pretrained('bert-base-uncased')

#instantiating the model

model = TFBertModel.from_pretrained("bert-base-uncased")

text = "What is your name?"

#extracting features

encoded_input = tokenizer(text, return_tensors='tf')

model(encoded_input)Output:

One thing that comes under the limitation of the model is that it gives biased predictions even after training the model using fairly neutral data. For example:

model = pipeline('fill-mask', model='bert-base-uncased')

pred = model("he can work as a [MASK].")

pred

Output:

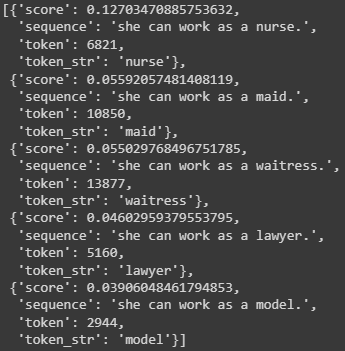

pred = model("She can work as a [MASK].")

pred

Output:

Here we can see the biased results of the model. So this is how we can build and use a masked language model using BERT transformer.

Final word

In the article, we have gone through the general introduction of the masked image modelling with the details where we can find the use of them. Along with this, we have gone through the implementation of a BERT base uncased model for masked language modelling.

References