Explainability and interpretability in machine learning are important tasks that help non-tech users to understand the modelling procedure and results. It also helps the developers to figure out the working procedure of the black-box algorithms. There will always be a need for explainability and interpretability in modelling. Auto-ViML is a library that can be used for effective explainability and interpretability in machine learning model building. In this article, we will have an introduction to the Auto-ViML through its practical applications. The major points to be discussed in this article are listed below.

Table of contents

- What is Auto-ViML?

- Why is Auto-ViML useful?

- Implementation with Auto-ViML

Let’s start with understanding the Auto-ViML.

What is Auto-ViML?

Auto-ViML is a library for building high-performance interpretable machine learning models that are built using the python language. The name Auto-ViML can be separated into automatic variable interpretable machine learning. The word variable is in the name because using this library we can use multiple variables of the dataset with multiple models in an automatic way. The interpretable stands for interpreting the modelling procedure.

When summing up all the main features of the library we can say that using this library we can build interpretable models using the least number of features that are most necessary for the procedure. Based on many trials we can say that Auto-ViML can help in building models with 20 to 99% fewer features than a traditional model using all features with the same performance.

Why is Auto-ViML useful?

Using this library we can utilize the following things of modelling procedures:

- Data cleaning: Using this library we can have suggestions of changes in the dataset like missing values removal, formatting and adding variables, etc.

- Variable classification: Auto-ViML can also classify variables automatically, this library has the functionality of recognizing what are values inside the variable such as numeric, categorical, NLP, and date-time.

- Feature engineering: Auto-ViML has the facility of automatic feature selection. From a large dataset with a low amount of knowledge about the domain, this library can help us in finding relevant features from the dataset for the required process.

- Graph representation of results: Auto-ViML can produce graphs and visualization on the results that help us in understanding the performance of the models.

By looking at the above points we can say that this library can be very helpful in a variety of modelling procedures. This library is built using scikit-learn, NumPy, pandas, and Matplotlib. While talking about the interpretability generation of the model this library uses the SHAP library. We can install this library in our environment using the following line of codes.

pip install autoviml

or

pip install git+https://github.com/AutoViML/Auto_ViML.git

After installation, we are ready to use this library for implementation.

Implementation with Auto-ViML

This implementation is an example that presents the working nature of Auto-ViML. For this, we will be performing data analysis and modelling using the titanic data. This dataset is one of the most famous datasets in the field of data science. We can download the dataset from here.

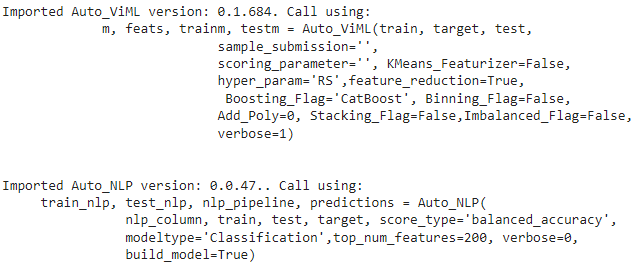

Before going into the implementation we are required to check the version of our library. The version check can be done using the following lines of code.

from autoviml.Auto_ViML import Auto_ViML

Output:

Now let’s call the dataset.

import pandas as pd

df = pd.read_csv('https://web.stanford.edu/class/archive/cs/cs109/cs109.1166/stuff/'+'titanic.csv')

df

Output:

Most of the time using the data set we perform the survival analysis. In this article, we will do the same but one different thing is that we are using the Auto-ViML library for this.

Let’s split the dataset into training and test data.

num = int(0.9*df.shape[0])

train = df[:num]

print(train.shape)

test = df[num:]

print(test.shape)Output:

Modelling

In the above, we are just required to import the Pandas library to import the dataset and only one module from the Auto-ViML library. Using only this module we can perform various steps of modelling. Let’s start the procedure.

Defining some parameter variables

target = 'Survived'

sample_submission=''

scoring_parameter = 'balanced-accuracy'

Modelling using XGBoost

m, feats, trainm, testm = Auto_ViML(train, target, test, sample_submission,

scoring_parameter=scoring_parameter,

hyper_param='GS',feature_reduction=True,

Boosting_Flag=True,Binning_Flag=False,

Add_Poly=0, Stacking_Flag=False,

Imbalanced_Flag=False,

verbose=1)

The above lines of code will give us various analyses of data and results. Let’s see them one by one.

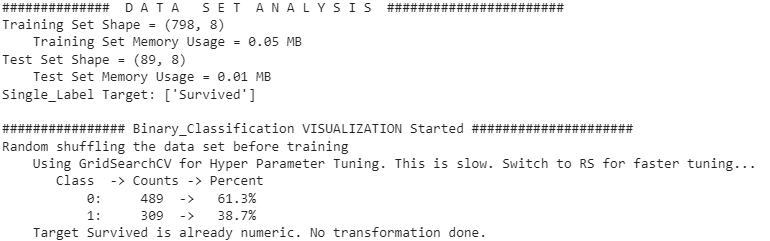

The above output is our first output which tells us about the shape of training and test set, after that we can see that we have results of binary classification which tells us about the status of hyperparameter tuning and shuffling of the data. This output tells us about the history of data and changes that we need to perform on data. Let’s move toward the second output.

The above output is about the variable classification, this can tell us the type of features in the dataset and in what number they are available in the dataset. Also, a basic feature selection removes variables or features that have low relevancy or low information level with the availability of working devices like CPU and GPU. Let’s proceed to the next output.

Here in the above output, we can see details about data preparation where this library is checking for missing values in test and train datasets and the process is removing features that are highly correlated and separating float and categorical variables. Let’s move towards our next output.

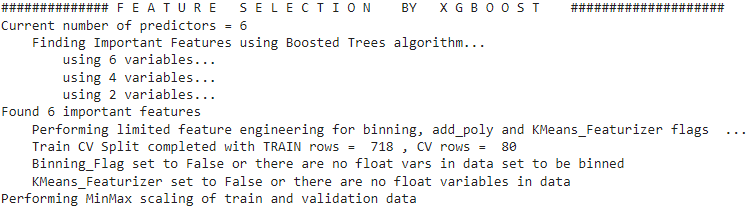

Here in the above output, we can see that we have details of the feature selection process where we used XGBoost and 6 features. Let’s move toward the next output.

Here in the above output, we can see the model-building procedure where we have used the XGBoost model. Let’s move toward the visualization of the results of modelling.

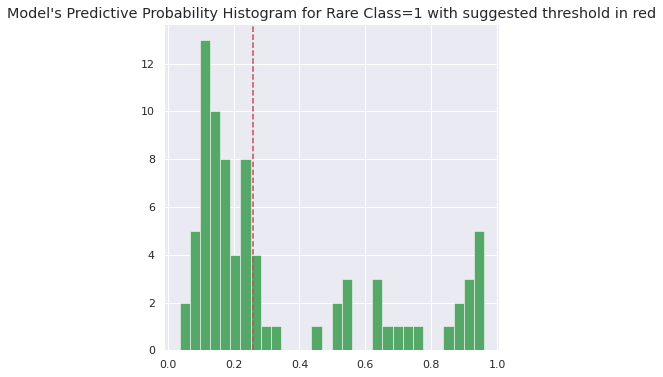

In the above, we can see the predictive probability of the model and we can say that we have got a threshold for F-1 score at around 0.26 to 0.28. For cross-checking, the above output library checks accuracy scores that are given in the below output.

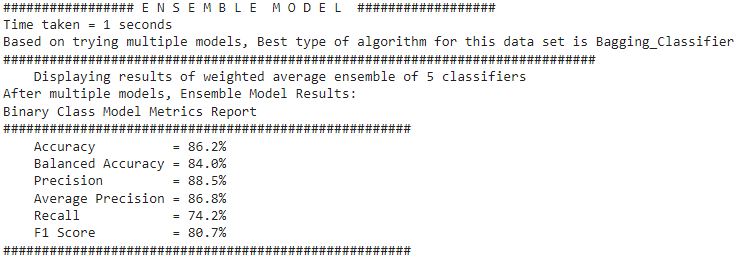

Now, this library also runs ensembling model techniques to improve the results. Let’s look at the next output.

This output tells us about the best model that the library observed with the scores that can help in optimizing the model.

Here we can see the final optimal result from the library. We also get the visualization of results. Let’s look at the next output:

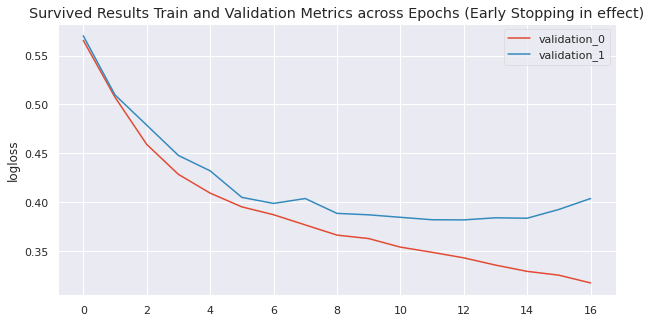

Here in the above output, we can see the models that are being applied to the data and their scores. We can also see that the highest accuracy model is in the light colour. With these visualizations, we also get visualizations of the confusion matrix, ROC AUC curve, PR AUC Curve, and classification report. Let’s move on to the log loss graph across epochs.

Here we can see that with different epochs how loss has been covered.

In the above output, we can see how much time it took to complete the procedure with the directory where the results of the procedure are saved with the graph of feature importance.

Final words

In this article, we have gone through the detailed introduction of the Auto-ViML library that makes the end-to-end modelling procedure easy and interpretable. Using very few lines of code we have covered most of the procedures which need to be followed in data modelling. Also, we had a proper interpretation of every step to understand the key concepts.