Many machine learning packages require string characteristics to be translated to numerical representations in order to the proper functioning of models. Categorical string characteristics can represent a wide range of data (e.g., gender, names, marital status, etc.) and are notoriously difficult to preprocess mechanically. This post will go over one such Python-based framework that is designed to automate the preparation tasks that are required when dealing with textual data. The following are the main points to be discussed in this article.

Table of Contents

- The Problem with Automated Preprocessing

- Challenges Addressed by the Framework

- The Methodology

- Evaluation Results

- Python Implementation

Let’s start by discussing general problems we face when we aim to automate the process.

The Problem with Automated Preprocessing

Real-world datasets frequently contain category string data, such as zip codes, names, or occupations. Many machine learning techniques require such string features to be translated to a numerical representation in order to function properly. The specific processing is necessary depending on the type of data. Latitudes and longitudes, for example, may be the ideal way to convey geographical string data (e.g., addresses).

Data scientists must manually preprocess such raw data, which takes a large amount of time, up to 60% of their day. There are automated data cleaning technologies available, however, they frequently fail to meet the enormous range of category string data.

John W. van Lith and Joaquin Vanschoren provide a framework titled “From string to Data Science” for systematically identifying and encoding various sorts of categorical string features in tabular datasets. We will also examine an open-source Python implementation that will be tested on a dataset.

Challenges Addressed by the Framework

This approach handles a variety of issues. First, type identification seeks to discover preset ‘types’ of string data (for example, dates) that necessitate additional preparation. Probabilistic Finite-State Machines (PFSMs) are a viable approach that can generate type probabilities and is based on regular expressions. They can also detect missing or out-of-place entries, such as numeric values in a string column.

The framework also addresses Statistical type inference, which uses the intrinsic data distribution to predict a feature’s statistical type (e.g., ordinal or categorical), and SOTA Encoding techniques, which convert categorical string data to numeric values, which is difficult due to small errors (e.g., typos) and intrinsic meaning (e.g., a time or location).

Now let’s see in detail the methodology of the framework.

The Methodology

The system is designed to detect and appropriately encode various types of string data in tabular datasets, as demonstrated in Fig. To begin, we use PFSMs to determine whether a column is numeric, has a known sort of string feature (such as a date), or contains any other type of standard string data. To correct inconsistencies, relevant missing value and outlier treatment algorithms are applied to the complete dataset based on this first categorization.

Columns with recognized string types are then processed in an intermediate type-specific manner, while the other columns are categorized according to their statistical type (e.g., nominal or ordinal). Finally, the data is encoded using the encoding that best fits each feature.

String Feature Inference

The initial stage was to build on PFSMs and the probability type library by developing PFSMs based on regular expressions for nine different types of string features: coordinates, days, e-mail addresses, file paths, months, numerical strings, phrases, URLs, and zip codes.

Handling Missing Values and Outliers

Following that, missing values are imputed using mean/mode imputation or a multivariate imputation technique based on the data’s missingness according to Little’s test (missing at random, missing not at random, missing totally at random). To ensure robustness in the subsequent processes, minor mistakes are rectified using string metrics, and data type outliers are corrected if appropriate.

Processing String Features

Then, for all of the string types detected by the PFSMs, we do intermediate processing. To begin, we reduce the strings by removing all nouns from sentences, for example. Second, we assign or conduct certain encoding techniques, such as substituting a date with values for the year, month, and day. Third, we provide extra information, such as zip code latitude and longitude values.

Statistical Type Prediction

String features that the PFSMs don’t recognize are labelled as standard strings. It determines the statistical type of these by determining if they contain ordered (ordinal) or unordered (nominal) data.

The prediction is based on eight features retrieved from the feature, as shown in Fig above, including the string values’ uniqueness, whether the column name or values suggest ordinality, and whether a GloVe word embedding of the string values reveals clear links between the values. To forecast the statistical type, these features are given into a gradient boosting classifier. This classifier was learned using manually annotated real-world information.

Encoding

Finally, for each categorical string feature, appropriate encoding is applied. A preset encoding is applied to the string types identified by the PFSMs. Because of their tolerance to morphological variants and large cardinality features, it uses category encoders and target encoding for nominal data.

When the cardinality is below 30, below 100, and at least 100, it uses the similarity, Gamma-Poisson, and min-hash encoders, respectively. A text sentiment intensity analyzer (FlairNLP) that converts string values (e.g., very poor, very excellent) to an ordered encoding defines the ordering for ordinal data.

Evaluation Results

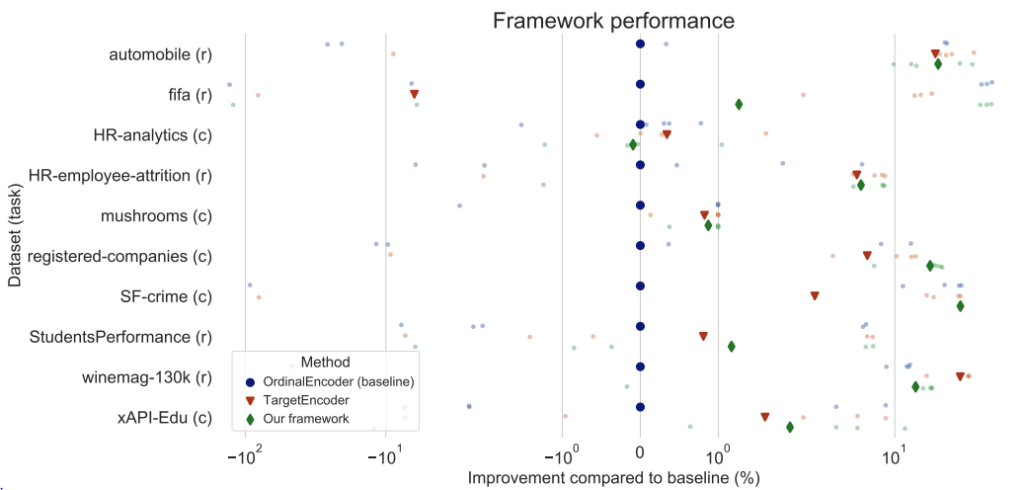

The framework and its separate components were tested on a variety of real-world datasets containing categorical string features. They looked at how well gradient boosting models trained on encoded data performed downstream. Boosting models are inherently resistant to high-dimensional data, allowing for a more accurate comparison. For classification tasks, we employed stratified 5-fold cross-validation, while for regression tasks, we used MAE.

To begin, the complete framework has been assessed as a whole and compared to a baseline in which the data is manually preprocessed with mean/mode imputation and ordinal or target encoding for categorical string attributes.

The relative performance differences for each of the five folds, as well as their mean, are shown in the graph above. These findings show that the framework works effectively with real-world data. The automated encoding performs poorly on the winemag-130k dataset, which is likely due to the heuristics applied.

Python Implementation

In this section, we will implement the above frameworks the official repository can be found here from where you can test it. We are using the Women’s Clothing E-Commerce dataset centring around clients through its reviews. Its nine helpful features provide an excellent environment for parsing out the test in various dimensions.

By using this framework we are going to try cleaning and encoding the dataset. Follow the installation instructions mentioned in the repo.

First, we will have a few instances of our dataset and will check the missing values.

import pandas as pd

data = pd.read_csv('/content/drive/MyDrive/data/Womens Clothing E-Commerce Reviews.csv')

print(data.head())

print(data.info())

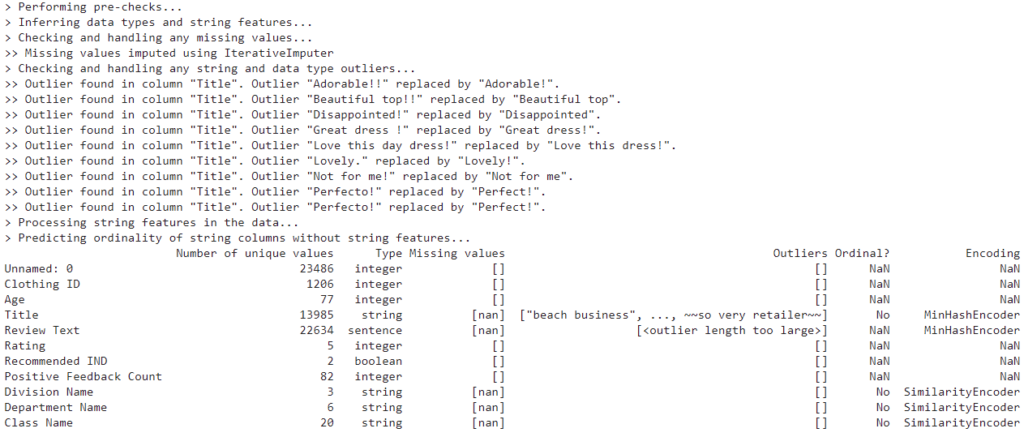

As we can see there are a total of 23486 instances and 11 attributes out of which 5 attributes have missing values. Now let’s try the framework. We are directly gonna use the complete execution script which will clean and encode the dataset. First, we will clean the dataset by setting encoding=False and will later encode the dataset.

from auto_string_cleaner import main x = main.run(data,encode=False)

x.head()

As we can see the frameworks have processed all necessary preprocessing steps like imputation of the missing value, outlier detection, and removal. Below you can see the encoded categorical features.

Final Words

We have seen a framework that combines cutting-edge techniques with innovative components to enable automated string data cleaning in this post. This system yields encouraging results, and some of its innovative components (e.g., string feature type inference, ordinality detection, and encoding utilizing FlairNLP) perform well, particularly when it comes to recognizing and processing categorical string data.