Named entity recognition (NER) is one of the crucial parts of any NLP project. We can see various examples of implementation of NER, but when it comes to an understanding of how the process of NER worked in the background or how the process is behaving with the data, it needs more explainability. In this article, we will try to make named entity recognition explainable and interpretable which will help in understanding its working concept. The major points to be covered in this article are listed below.

Table of contents

- What is named entity recognition (NER)?

- Extracting data from Kaggle

- Loading data

- Data preprocessing

- NER modelling

- Explaining prediction

Let’s begin with understanding the named entity recognition first.

What is named entity recognition (NER)?

In one of our articles, we have discussed that named entities are the word in any text data that are objects which exist in the real world. Some of the examples of these real-world objects are the names of any person, place, or thing. These objects have their own identity in any text data. For example, Narendra Singh Modi, Mumbai, Plasto water tank, etc. This named entity has its own class like Narendra Modi is the name of a person. Named entity recognition(NER) can be considered as a process of making a machine to recognize the objects with their class and other specifications. Also with this information about named entity recognition we have discussed how we can implement NER using libraries spaCy and NLTK.

Talking about the applications of NER, we find that we can utilize the process in Information summarization from the documents, Optimizing search engines algorithm, identification of different Biomedical subparts, and Content recommendations.

Using this article we aim to make the implementation process of NER more explainable. For this purpose, we will be using the Keras and lime libraries. In addition, we will be discussing how we can extract data from the Kaggle in the google Colab environment. Let’s start by extracting data

Extracting data from Kaggle

For extracting the data from Kaggle we are required to have an account on the Kaggle website. To make an account we use this link. After making an account we are required to go to the account page. For example, my account page address is

https://www.kaggle.com/yugeshvermaaim/account

Where yugeshvermaaim is my account name. After reaching the account page we are required to scroll down the page to the API section.

In this section, we are required to click on the Create New API Token button. This button is responsible for providing us with a JSON file named kaggle.json. After extracting this file we are required to upload it in the google Colab environment. We can find a shortcut for uploading a file in the left section of the google collab notebook.

The first button on this panel is for uploading a file using which we are required to upload the kaggle.json file. After uploading the JSON file we can use the following command to install the Kaggle in the environment.

! pip install kaggle

After the installation, we are required to make a directory. Using the following command we can make it:

! mkdir ~/.kaggle

Using the following command we can copy the kaggle.json in the above-made directory as,

! cp kaggle.json ~/.kaggle/

The below-given code is required to give permission for file allocation.

! chmod 600 ~/.kaggle/kaggle.json

Now in the implementation of NER, we are using a data ner_datasset from Kaggle which can be found in this link. This data includes annotated corpus for named entity recognition and can be utilized for entity classification.

To extract this data we are required to copy the API command, which can be gathered from the three-dotted panels on the right side of the new notebook panel.

For our data, it has the following API command:

kaggle datasets download -d abhinavwalia95/entity-annotated-corpus

This command can be run on the Google Colab.

!kaggle datasets download -d abhinavwalia95/entity-annotated-corpus

Output:

This gathered file is a zip file that can be unzipped using the following command.

! unzip entity-annotated-corpus

Output:

In the above, we can make changes according to our interest in extracting files from the Kaggle. After this data extraction, we are ready to implement NER. Let’s start by loading the data.

Loading data

import pandas as pd

data = pd.read_csv("ner_dataset.csv", encoding="latin1").fillna(method="ffill")

Checking the data

data.head()

Output:

data.describe()

Output:

In the above description, we can find that there are 47959 sentences and 35178 unique words in the sentences where 42 parts of speech and 17 categories of objects or tags are available.

The above image is an example of sentence 4 from the dataset. To make it work, I have defined a function that can add all the words to make a sentence according to its number. Interested readers can find the function here.

Let’s check the tags of these words:

print(labels[3])

Output:

Data preprocessing

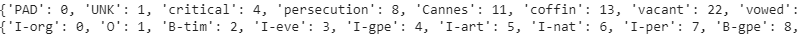

In this section, we will be starting with building a vocabulary of some common 10000 words. The below image is a showcase of the vocabulary.

print(vocabulary)

len(vocabulary)Output:

The second step of this section will be padding the sequence to a common length.

print(word2idx)

print(tag2idx)Output:

After making the pad sequence of common length we are ready to split our data.

from sklearn.model_selection import train_test_split

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.1, shuffle=False)

After these data preprocessing steps we are ready for NER modelling.

NER modelling

In the NER modelling, we have used a bidirectional LSTM model with 0.1 recurrent dropouts and in compilation, I have used the root mean squared propagation as an optimizer. In fitting the model we have used 5 epochs. The whole code for the procedure can be found here.

Using the following code we can train our network.

history = model.fit(X_train, y_train.reshape(*y_train.shape, 1),

batch_size=32, epochs=5,

validation_split=0.1, verbose=1)After training the model we are ready to explain the predictions.

Explaining prediction

As discussed above we are going to make the NER process more explainable using the LIME library. This library is an open-source library for making artificial intelligence and machine learning more explainable. Before going to explain the NER we are required to install this library. We can also use the eli5 library for using the function constructed in the lime library. Installation can be done using the following lines of codes.

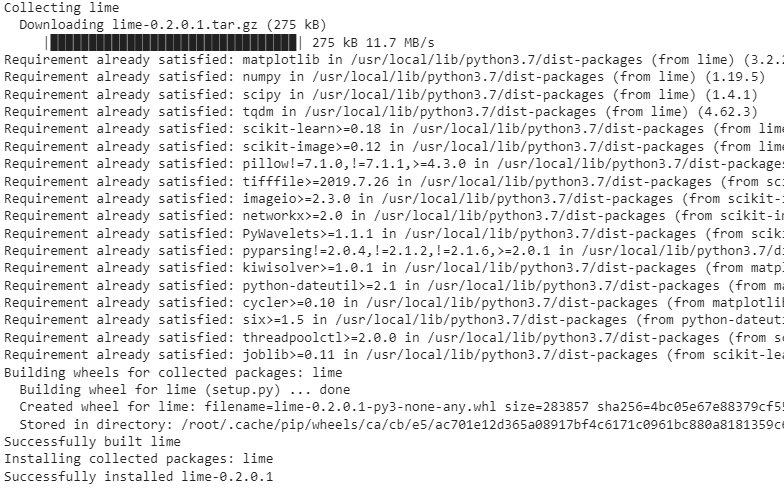

!pip install lime

Output:

!pip install eli5

Output:

After the installation, we are ready to explain the prediction from the NER modelling. To explain the NER using the LIME we are required to make our problem a multiclass classification problem.

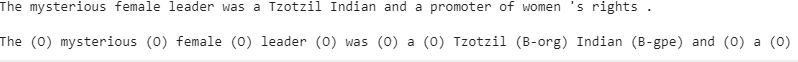

Let’s take a look at the 100th sample of our text data.

index = 99

label = labels[index]

text = sentences[index]

print(text)

print()

print(" ".join([f"{t} ({l})" for t, l in zip(text.split(), label)]))

Output:

Let’s start explaining the prediction from the NER modelling. For this, we have defined a class named NERExplainerGenerator in which we have defined a function named get_predict_function which will provide the predictions to the LIME libraries functions TextExplainer.

From the above example, we will use the 6th word and generate the respective prediction function. Using the MaskExplainer module from the LIME we can initialize a sampler for the LIME algorithm.

from eli5.lime.samplers import MaskingTextSampler

word_index = 6

predict_func = explainer_generator.get_predict_function(word_index=word_index)

sampler = MaskingTextSampler(

replacement="UNK",

max_replace=0.7,

token_pattern=None,

bow=False

)

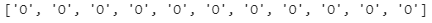

Printing the sample using the sampler.

samples, similarity = sampler.sample_near(text, n_samples=6)

print(samples)Output:

Initializing TextExplainer module from LIME to explain the prediction.

from eli5.lime import TextExplainer

te = TextExplainer(

sampler=sampler,

position_dependent=True,

random_state=42

)

te.fit(text, predict_func)

After fitting it we are ready to use the TestExplainer.

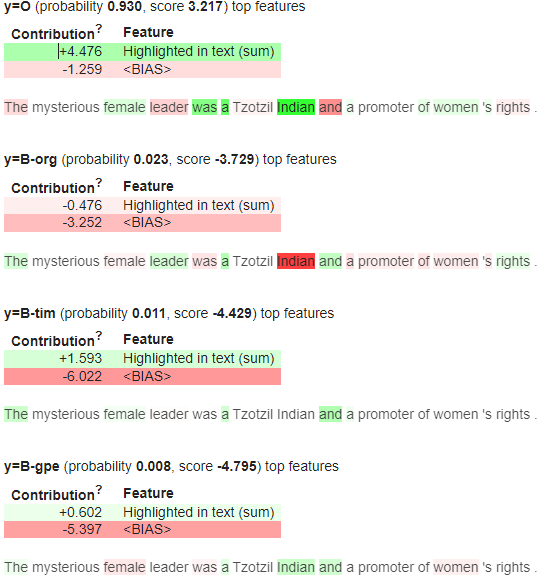

te.explain_prediction(

target_names=list(explainer_generator.idx2tag.values()),

top_targets=4

)

Output:

In the above output, we can see that we have made the NER modelling more explainable. In the output, we can see that the word Indian is strongly highlighted which means there is a higher probability of India as the name of any object and the dataset possesses India often as the name of a country.

Final words

In this article, we have discussed how we can perform named entity recognition using the Keras library and how we can use the LIME library to make it more explainable. We got to know how named entity recognition works and how it behaves with the data.

References: