In the field of machine learning, we have witnessed successes in a wide range of application areas. One of the most important tasks on which many tasks are dependent is choosing the correct value of the hyperparameter. Finding the best combination of hyperparameters can be considered as hyperparameter optimization which is a crucial task in the machine learning procedure. In this article, we will discuss a hyperparameter optimization technique named Hyperband. We will also go through a python package for hyperparameter optimization named HpBandSter and we will use this package for implementing hyperparameter optimization. The major points to be discussed in this article are listed below.

Table of Contents

- Hyperparameter Optimization

- Multi-Fidelity Optimization

- Successive Halving

- Hyperband

- What is HpBandSter?

- Implementation of Hyperband

Let’s begin the discussion by understanding what hyperparameter optimization is.

Hyperparameter Optimization

In machine learning, we can see various models which use various hyperparameters that are distinct and complex. These hyperparameters are the reason for the generation of an enormous search space while modelling. As we move towards deep learning models from traditional machine learning models, we find an increment in the size of search space. Tuning the hyperparameter in a massive search space is a challenging task to perform.

Hyperparameter optimization algorithms are those algorithms that help in tuning the hyperparameter in a massive search space. The below image is a representation of approaches for hyperparameter optimization.

In one of our previous articles, we have discussed Bayesian optimization and seen some of its advantages. Bayesian optimization can operate very well with the black box function and it is data-efficient and robust with noise. An implementation of Bayesian optimization using the HyperOpt package can be found here. Also, we have seen that Bayesian optimization is a sequential process so using Bayesian optimization we can not work well in a parallel situation and the objective function in Bayesian optimization becomes very expensive when performing the estimation on it. So now we are required to find a way to estimate the objective function cheaper which can be done using multi-fidelity optimization. Now let’s understand this approach.

Multi-Fidelity Optimization

Multifidelity optimization can be considered as a way to increase the accuracy of the model estimation by minimizing the cost associated with the estimation of the objective function. This optimization method leverages both high and low fidelity data. Success halving and hyperband are two types of Multi fidelity optimization. Let’s see what is successive-halving.

Successive Halving

We can consider successive halving optimization as a technique for hyperparameter optimization where competition between candidate parameter combinations helps in hyperparameter optimization. It is also an iterative procedure where all the parameter combinations are used at the first iteration for evaluation and the number of resources should be below. Only a few combinations are evaluated in the second iteration with an increase in resources. The below image is a representation of successive halving.

In the above image, we can see that as the iterations are increasing the number of candidates is decreasing and the number of resources increases. The main motive behind the successive halving is to keep the best-fit halves and remove the halves that are not important. This is a good way for hyperparameter tuning but because of the trade-off between configuration and cuts it becomes a problem for this technique and Hyperband can be used to solve this problem. Let’s move toward the hyperband algorithm which is also a part of multi-fidelity optimization.

We can perform this using the scikit-learn provided module HalvingGridSearchCV and HalvingRandomSearchCV.

Hyperband

This method can be considered as an extension of the successive halving method; the motive behind the Hyperband is to perform successive halving frequently so that the trade-off between the number of configurations and allocation of resources can be solved. Also, using successive halving we can identify the best combination in lower time.

We can also say that using the Hyperband, we can perform an evaluation with many combinations on the smallest budget and very conservative runs on the full budget. In real life implementation performance of hyperband is well in every range of budget. The below image can be a representation of Hyperband advantages over random search.

In the next section of the article, we will introduce HpBandSter, A tool that can be used for implementing hyperband optimization.

What is HpBandSter?

HpBandSter is a python package framework that can be used for distributed hyperparameter optimization. In the above section, we have seen the importance of hyperparameter optimization and using this framework we can perform a variety of cutting-edge hyperparameter algorithms including the HyperBand. Using this tool we can initiate a random search to find out the best fit parameter combination in less time.

Using the below code we can install this framework.

!pip install hpbandsterOutput:

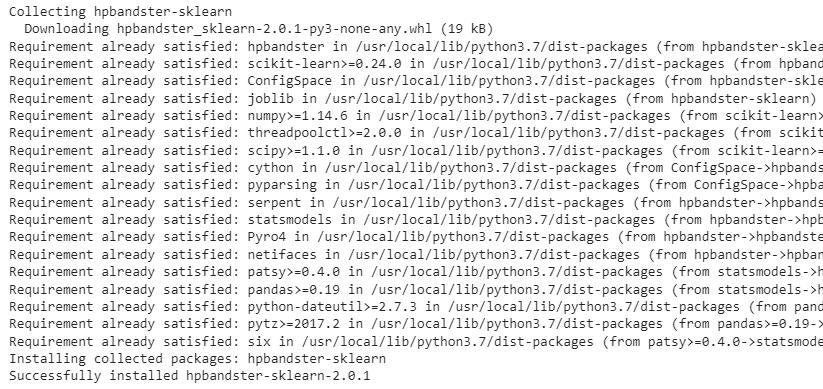

We can also use the Sci-Kit Learn wrapper hpbandster-sklearn for HpBandSer. Using the below code we can install it.

!pip install hpbandster-sklearn

Output:

Implementation of Hyperband

In this section, we will discuss how we can implement HyperBand using the scikit learn wrapper for HpBandStar. Let’s start by calling the libraries:

import numpy as np

from sklearn.datasets import load_iris

from sklearn import tree

from sklearn.utils.validation import check_is_fitted

from hpbandster_sklearn import HpBandSterSearchCV

Making data and model instances:

X, y = load_iris(return_X_y=True)

clf = tree.DecisionTreeClassifier(random_state=0)

np.random.seed(0)

Defining a search space:

search_space = {"max_depth": [2, 3, 4], "min_samples_split": list(range(2, 12))}

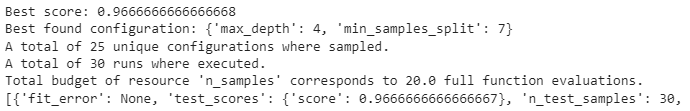

Fitting the model, data, and search space in the optimization module:

search = HpBandSterSearchCV(clf,

search_space,

random_state=0, n_jobs=1, n_iter=10, verbose=1

optimizer = 'hyperband').fit(X, y)

Output:

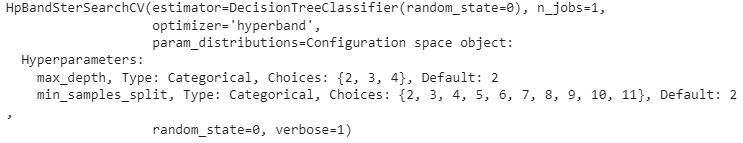

Here we can see the results of hyperparameters optimization with the details. Lets check the instance which we created for optimization.

search

Output:

Lets check the best parameter combination from the search instance:

search.best_params_

Output:

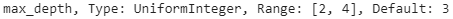

We can also use the configuration space instead of a dictionary for defining the search space.

import ConfigSpace as CS

import ConfigSpace.hyperparameters as CSH

search_space = CS.ConfigurationSpace(seed=42)

search_space.add_hyperparameter(CSH.UniformIntegerHyperparameter("min_samples_split", 2, 11))

search_space.add_hyperparameter(CSH.UniformIntegerHyperparameter("max_depth", 2, 4))

Output:

Fitting model data and search space in the module.

search = HpBandSterSearchCV(clf,

search_space,

random_state=0, n_jobs=1, n_iter=10, verbose=1,

optimizer = 'hyperband').fit(X, y)

Output:

Since ultimately we are performing successive halving we are required to perform early stopping so that we can configure the resources and budget. Configuration can be done in the following way.

search = HpBandSterSearchCV(

clf,

search_space,

resource_name='n_samples',

resource_type=float,

min_budget=0.2,

max_budget=1, optimizer = 'hyperband'

)

Let’s check the final instance as,

search.get_params()

Output:

In the above output, we can see all the details of the module we are using for hyperparameter optimization.

Final Words

In the article, we have gone through the introduction of hyperparameter optimization, Bayesian optimization, successive halving, and hyperband optimization. Then we have discussed a framework HpBandSter for hyperparameter optimization which also includes hyperband optimization.

References