In the last few years, we have seen that self-supervised learning methods are emerging rapidly. It can also be noticed that models using self-supervised learning methods have solved many of the problems regarding unlabeled data. Uses of these methods in fields like computer vision and natural language processing have shown many great results. In this article, we are going to discuss the self-supervised learning methods used in the field of computer vision. We will also discuss the contrastive learning used as a self supervised approach to address the data labelling problems in computer vision. The major points to be discussed in this article are listed below.

Table of Contents

- Why Self-supervised Learning is Needed?

- The Contrastive Learning

- Contrastive Predictive Coding (CPC)

- Instance Discrimination Methods

Let us begin the discussion by understanding why self supervised learning is needed.

Why Self-supervised Learning is Needed?

We require a lot of labeled data when working on the supervised learning techniques. Most of the time data labelling becomes very costly, and time consuming especially in the field of computer vision where tasks such as object detection are required to perform. Image segmentation tasks are also there in the computer vision tasks which require every small detail to be well-annotated and labeled. And we also know that we can make available the unlabeled data in abundance.

The basic idea behind Self-supervised learning is to make a model learn only the important representation of the data from the pool of unlabelled data. In this learning method the models are trained as they can supervise themselves and after supervising they can provide a few labels on the data so that supervised learning tasks can be performed on it. If we are talking about computer vision the supervised learning task can be the simplest image classification task or it can also be semantic segmentation which is a complex task in the computer vision field.

In the field of natural language processing, the transformer models such as BERT and T5 are providing a lot of fruitful results. These models are also built on the idea of self-supervised learning where they are already trained with a large amount of unlabelled data and then they apply some fine-tuned supervised learning models with few labeled data. Similarly in the field of computer vision, there are some models which follow the idea of self-supervised learning. In this article we are going to introduce some of them.

In computer vision, the basic idea behind self-supervised learning is to create a model which can solve any fundamental computer vision task using the input data or image data and by the time model is solving the problem it can learn from the structure of the objects presented in the image. There can be many self-supervised learning methods but in the case of computer vision, one method named the contrastive method seems to be more successful than the others. Hence, In the next section of this article, we are going to introduce the contrastive learning method.

The Contrastive Learning

To understand contrastive learning refer to the below image wherein the model using the contrastive learning method has a function f() which takes the input a and gives the output f(a).

Let’s say there can be two types of inputs, positive and negative. So if there are two similar inputs (in the below image we are considering a1 and a2c as similar positive input ) to the function f() then their output should be the same and this output should be dissimilar to the output of the opposite input. Above can be considered as the statement of any contrastive learning approach.

Positive or similar input can be two sections of the same image or two frames from the same video and the negative or dissimilar input can be a part of different computer vision data or section from the different image.

Contrastive Predictive Coding (CPC)

The general idea behind the contrastive predictive coding (CPC) is extracting a few upper rows from the coarse grid of the images ad the task is to predict a few lower rows of the image by the time of generating a prediction of the lower rows model need to learn the structural behaviors and the objects of the images. For example, by seeing the face of the cat, the model can predict that the cat has four legs in the lower part of the image.

Let us suppose a basic computer vision modeling task is divided into three parts as:

- Divide the image into the grids such as if the size of image is given 256 x 256, it can divide into 7×7 grids where if the cell size is 64px and 32px it can overlap with each neighbor cell.

- Encode the grid cell into a vector such that if the size of the given image is similar to the first point the grid cells can be encoded into a 1024 dimension vector so that the whole image can be converted into the 7x7x1024 tensor.

- An autoregressive generative model can be used to predict the lower rows of the image using the grid cells converted into the tensor of the upper row. For example, the upper 3 rows converted into the 7x7x1024 tensor can be used for the generation or prediction of the last 3 rows. The PixelCNN model can be used for the above process.

The below image can be a representation of the above-given steps.

To train such a model we are required to introduce a loss function that can calculate the dissimilarity between the patch predictions more formally saying a measure of similarity if between the positive pairs and negative pairs. Mostly the loss function uses the set X of N patches., where the set X can be considered as the set of N-1 samples which are negative and 1 positive sample. This loss function can be calculated by estimating the difference between noise and contrast. And the loss function can also be called the infoNCE function where NSE stands for noise-contrastive estimation.

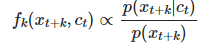

Optimizing this loss will result in a function for estimating the density ratio, which is:

The above-given function is very similar to the log softmax function which is often used for calculating the similarity between the prediction and actual values or original values. In the paper “Representation learning with contrastive predictive coding,” the idea of CPC has been introduced, where we can find a comparison between the accuracy of models using different techniques. Which is shown in the below table.

| Methods | Accuracy |

| Motion Segmentation | 27.6 |

| Exemplar | 31.5 |

| Relative Position | 36.2 |

| Colorization | 39.6 |

| Contrastive Predictive Coding(CPC) | 48.7 |

In the above table, we can see the performance of the CPC method for representation learning which is still far from the many of the supervised learning models like ResNet-50 with 100% labels on the Imagenet has 76.5% top-1 accuracy. An update on the CPC is used for increasing the accuracy of the model which can be called Instance Discrimination Methods. The next section of the article is an introduction of the Instance Discrimination Methods

Instance Discrimination Methods

As we have seen in the CPC, the method was applied to the part of the images. In comparison to the CPC, the Instance discriminative methods have the basic idea to apply the CPC in the whole image. Images with their augmented version can make a positive pair and they should have a similar representation and either image with its augmented version should have a different representation.

The main motive of the image augmentation is that if any representation has been learned from the model that should not be varied and the augmented image can be horizontal flip, random crop, different color channel, etc. by putting an augmented image as input can change the image but there class information learned by the model should not be changed.

In this method the process of the model can be divided into three basic steps:

- Give the input to the model as an image with its randomly augmented version to make a positive pair and also feed the model with negative samples with their augmented version.

- Encode the image pair using any encoder and get the labels or representation on the image and also use the encoder for rep[resentation of the negative samples.

- Apply InfoCPC to cross-check the similarity level between the positive pair and the dissimilarity level between the positive and negative pairs.

There are two papers SimCLR and Momentum Contrast (MoCo) which have wired on the instance discrimination methods and the major difference between them is how they handle the negative samples. We can use their techniques for making self-supervised learning models in the field of computer vision.

Final Words

In this article, we have seen that in the field of computer vision the self-supervised learning is a representation learning method where we can use the supervised learning models to make the data labeled which can be very helpful in reducing the cost, time, and effort in the labeling of the data. There are various models based on contrastive learning like MoCo and SimCLR which can be used in computer vision for self-supervised learning.