For any supervised learning problem, there are two features one is the dependent variable and the other is an explanatory variable. These explanatory variables could be qualitative which is often known as dummy variables. A dummy variable can either be a binary or non-binary categorical variable. One method of quantifying these attributes is by constructing artificial variables that take on the value of either 0 or 1. In the article, we could learn the effects of artificial variables on the algorithms. Following are the topics that would be discussed.

Table of contents

- About dummy variable

- About multicollinearity

- About dummy variable trap

- Solution for dummy variable trap

Let’s start with the understanding of the concept of a dummy variable.

About dummy variable

In regression analysis, a dummy variable represents subgroups of the sample numerically. In the simplest case, a dummy variable where a category is given a value of 0 if the category is in the control group or 1 if the category is in the treated group. These variables are useful since a single regression equation can be applied to multiple groups.

Therefore, it is not necessary to write separate equation models for each subgroup. In an equation, the dummy variables act as ‘switches’ that toggle various parameters on and off. Another advantage of a 0,1 dummy-coded variable is that it can be treated statistically as an interval-level variable even though it is a nominal-level variable.

- A Nominal Scale is a measurement scale in which the numerical labels are only used to identify or label objects. The scale is usually used to measure variables that are not numerical (quantitative) or where no numerical values exist.

- An interval scale is a numerical scale that labels and orders variables based on a known, evenly spaced interval between each value. For example, interval data is the temperature in Celius, where the difference between 10 and 20 degrees Celius is the same as the difference between 50 and 60 degrees Celius. It is extremely reliable to calculate the distance between variables using the measures used.

Let’s understand the problem with these dummy variables that happens due to multicollinearity.

Are you looking for for a complete repository of Python libraries used in data science, check out here.

About multicollinearity

Multicollinearity is a statistical calculation in which the independent or explanatory variables are interrelated to each other. Due to this multicollinearity, the model algorithm can not calculate the true relationship between dependent and explanatory variables as the outcome of the prediction has errors.

Where is it found?

In this problem, multicollinearity is found between the dummy variables created from the original variables. For example, there is a categorical variable with different age groups labelled child, adult and senior. This categorical variable is needed to be encoded for prediction purposes, now using the dummy variable concept three dummy variables would be created containing the same data from the categorical variable but now encoded based on the presence and absence, but the data is still the same only thing that changed is this has been encoded and divided into three different columns. But these three different columns are derived from the parent categorical variable.

About dummy variable trap

The problem occurs when all dummy variables / one-hot-encoded features are used to train the model. Due to multicollinearity, it is not possible to train a model that can provide accurate results. It is a trap because weights for each feature are present in the equation of the model so every time the model predicts the output comes with high errors and hence the model is stuck in the trap of error term while producing the output.

Let’s take an example to understand this phenomenon.

For, example there is data related to the employee and there is a department explanatory variable in which there are four different categories listed IT, Accounts, Logistics and Customer Care. Since these are in object data types, I need to convert them into numerical data types to use these as an explanation for the relationship. So, created dummies for these variables named Deparment_IT, Department_Accounts, Deparment_Logistics and Department_CustomerCare. These variables contain 0 and 1 as if there is a presence of that particular variable it will be labelled as 1 otherwise 0.

The problem with these dummy variables is that they are multicollinear because there is no baseline for these dummy variables. In easier words, Department_IT could be predicted with the use of the other three departments since they are all correlated. So need to make k-1 dummy variables instead of k (number of categories) variables.

Let’s understand the process of saving the model from this trap.

Solution for dummy variable trap

To avoid the problem of multicollinearity in the summy variables use a baseline on which these dummy variables are created. So in the above example, take the IT department as a baseline by which this problem could be overcome.

Let’s implement this theoretical solution in python.

Implementation

Import commonly used libraries

import pandas as pd import numpy as np

Reading the data

df=pd.read_csv("student-por.csv")

df_cat=df.select_dtypes(include=object)

df_cat.head()

The data is related to the performance of the student achievement in secondary education. Selected only categorical features for this purpose and this dataset is referred from a Kaggle repository which is linked in the reference.

Create dummies without the baseline

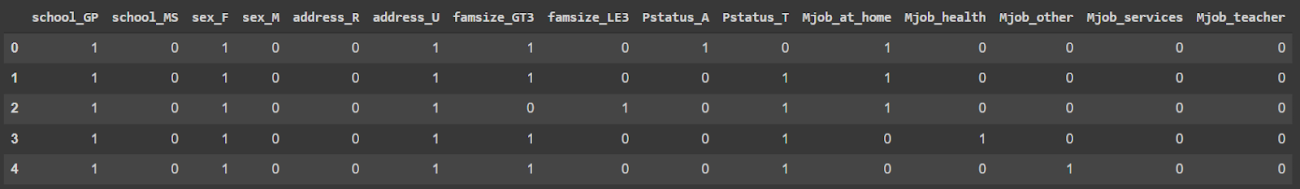

dum_trap=pd.get_dummies(data=df_cat) dum_trap.iloc[:5,:15]

Here using the Pandas function get_dummies for creating dummy variables. Since there would be a lot of dummy variables so just for display purposes taking only 15 features.

There are a total of 43 dummy variables created but the problem with these variables is multicollinearity. Because there is no base value for any of these. Let’s set a baseline for every dummy variable by using the function drop_first.

Creating dummies with baseline

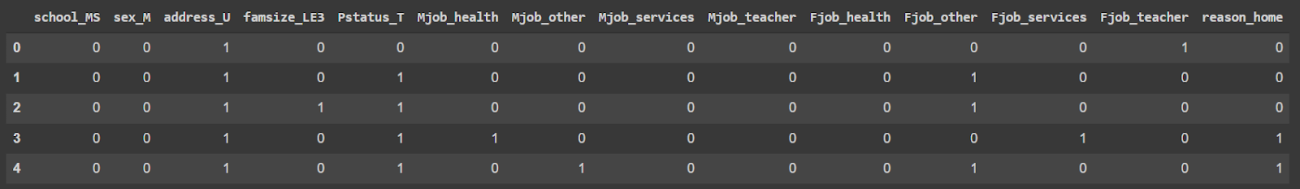

dum=pd.get_dummies(data=df_cat,drop_first=True) dum.iloc[:5,:14]

The total number of dummy variables created is reduced to 26 from 43 and the problem of multicollinearity is also reduced.

Verdict

A dummy variable trap is a multicollinearity problem due to which the algorithm predicts the output with a lot of errors and increases the possibility of overfitting. It is a common mistake to not set a baseline for a dummy variable while creating artificial variables. In this article, we have covered a hands-on discussion on the dummy variable trap and the solution to it.