|

Listen to this story

|

The Linear Discriminant Analysis (LDA) is a method to separate the data points by learning relationships between the high dimensional data points and the learner line. It reduces the high dimensional data to linear dimensional data. LDA is also used as a tool for classification, dimension reduction, and data visualization. The LDA method often produces robust, decent, and interpretable classification results despite its simplicity. In this article, we will have an interdiction to LDA specifically focusing on its classification capabilities. Below is a list of points that we will cover in this article.

Table of contents

- Why was Linear Discriminant Analysis introduced?

- About Linear Discriminant Analysis (LDA)

- LDA for Binary Classification Python

Let’s understand why Linear Discriminant Analysis (LDA) was introduced for classification.

Why was LDA introduced?

To understand the reason for the introduction of LDA first we need to understand what went wrong with Logistic Regression. There are the following limitations faced by the logistic regression technique:

- Logistic Regression is traditionally used for binary classification problems. Though it can be extrapolated and used in multi-class classification, this is rarely performed.

- Logistic Regression can lack stability when the classes are well-separated. So, there is instability when classes are well separated.

- Logistic Regression is unstable when there are fewer features parameters to be estimated.

All the above reasons for the failure of logistic regression have been rectified in LDA. Moving further in the article let’s have a brief introduction to our benchmark classifier the Linear Discriminant Analysis (LDA).

Are you looking for a complete repository of Python libraries used in data science, check out here.

About Linear Discriminant Analysis (LDA)

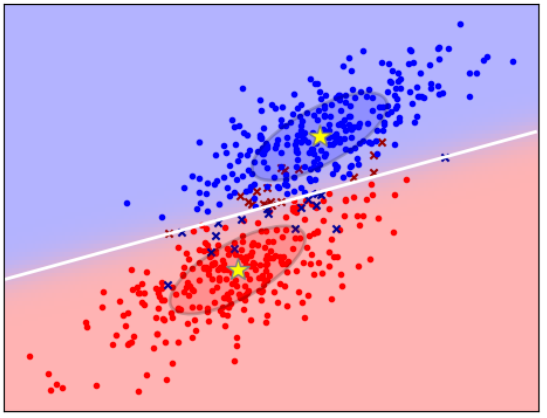

Linear Discriminant Analysis is a technique for classifying binary and non-binary features using and linear algorithm for learning the relationship between the dependent and independent features. It uses the Fischer formula to reduce the dimensionality of the data so as to fit in a linear dimension. LDA is a multi-functional algorithm, it is a classifier, dimensionality reducer and data visualizer. The aim of LDA is:

- To minimize the inter-class variability which refers to classifying as many similar points as possible in one class. This ensures fewer misclassifications.

- To maximize the distance between the mean of classes, the mean is placed as far as possible to ensure high confidence during prediction.

In the above representation, there are two classifications created with data having a fixed covariance. It is explained with the help of a linear relationship line.

Let’s implement LDA on the data and observe the outcomes.

LDA for Binary Classification Python

The Linear Discriminant Analysis package is present in the sklearn library. Let’s start with importing some libraries that are going to be used for this article.

Importing library

import pandas as pd import numpy as np import matplotlib.pyplot as plt import seaborn as sns from sklearn.model_selection import train_test_split from sklearn.discriminant_analysis import LinearDiscriminantAnalysis from sklearn.datasets import make_classification from sklearn.metrics import ConfusionMatrixDisplay,precision_score,recall_score,confusion_matrix import warnings warnings.filterwarnings('ignore')

Reading the data

df=pd.read_csv("/content/drive/MyDrive/Datasets/heart.csv")

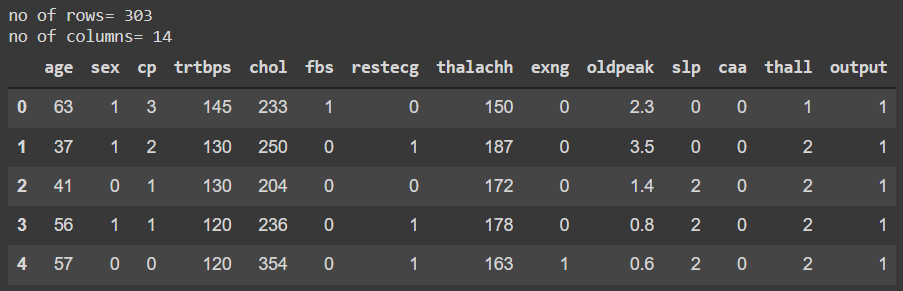

print("no of rows=",df.shape[0],"\nno of columns=",df.shape[1])

df.head()

There are a total of 14 features including the dependent variable “output”. This data is related to the prediction of chances of having a heart attack.

Preparing data for training

X=df.drop('output',axis=1)

y=df['output']

X_train, X_test, y_train, y_test = train_test_split( X, y, test_size=0.30, random_state=42)

Split the data in train and test with a ratio of 70:30 respectively. The shape of the train and test dependent and independent variables data is shown above.

Fitting LDA with data

LDA = LinearDiscriminantAnalysis() LDA.fit(X_train, y_train) LDA_pred=LDA.predict(X_test)

The model is now trained on the training data and it has predicted values using the test data. Let’s see the performance factors for the precision of prediction.

Creating Confusion Matrix

tn, fp, fn, tp = confusion_matrix(list(y_test), list(LDA_pred), labels=[0, 1]).ravel()

print('True Positive', tp)

print('True Negative', tn)

print('False Positive', fp)

print('False Negative', fn)

ConfusionMatrixDisplay.from_predictions(y_test, LDA_pred)

plt.show()

With help of the above confusion matrix, we can calculate the precision and recall score. We will be using the precision score for this problem because it is the positive predicted value so in this case, the positive is how many patients are suffering from a heart attack.

Calculating precision score

print("Precision score",precision_score(y_test,LDA_pred))

Precision score 0.82

Our model has a precision of 82% in predicting the patient is suffering from a heart attack. This score is not good because in medical-related problems the model needs to be 99% confident about the decision. So, for improving the model performance the goal is to reduce the False-negative and False positive which is also known as type 1 and type 2 error respectively. In this case, we should focus more on False-negative reduction because they are those patients who are or will be suffering from a heart attack but the model has classified them as negative (non-heart attack patients).

Final verdict

Linear Discriminant Analysis uses distance to the class mean which is easier to interpret, uses linear decision boundary for explaining the classification and it reduces the dimensionality. With a hands-on implementation of this concept in this article, we could understand how Linear Discriminant Analysis is used in classification.