Transfer Learning is not a new concept. Ever since us humans began to train machines to learn, classify and predict data, we have looked for ways to retain what the machine has already learnt.

Learning is not an easy process, not for humans and not for machines either. It is a heavy-duty, resource-consuming and time-consuming process and hence it was important to devise a method that would prevent a model from forgetting the learning curve that it attained from a specific dataset and also lets it learn more from new and different datasets.

Transfer learning is simply the process of using a pre-trained model that has been trained on a dataset for training and predicting on a new given dataset.

“A pre-trained model is a saved network that was previously trained on a large dataset, typically on a large-scale image-classification task.“

In this article, we will use transfer learning to classify the images of cats and dogs from Machinehack’s Who Let The Dogs Out: Pets Breed Classification Hackathon.

Getting the dataset

Head to MachineHack, sign up and start the Who Let The Dogs Out: Pets Breed Classification Hackathon. The datasets can be downloaded on the assignment page. The training set consists of 6206 images of both cats and dogs of different breeds. We will use these images and their respective classes provided in the train.csv file to train our classifier to categorize a given image as either the image of a cat or a dog.

As an introductory tutorial, we will keep it simple by performing a binary classification. That is, we will only predict whether a given image is that of a cat or a dog. If you wish to do Multi-Label classification by also predicting the breed, refer Hands-On Guide To Multi-Label Image Classification With Tensorflow & Keras.

Transfer Learning With MobileNet V2

MobileNet V2 model was developed at Google, pre-trained on the ImageNet dataset with 1.4M images and 1000 classes of web images. We will use this as our base model to train with our dataset and classify the images of cats and dogs.

Lets code!

Importing Tensorflow and necessary libraries

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Dense, Conv2D, MaxPooling2D, Flatten

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

print(tf.__version__)

The print function will return your TensorFlow version.

Preparing the training data

#Read the train.csv file from the right location

training_data = pd.read_csv("Cats_and_Dogs/Dataset/train.csv")

#Appending the file extension to the image names

training_imgs = ["{}.jpg".format(x) for x in list(training_data.id)]

#Creating a new dataframe with updated images names

training_labels_1 = list(training_data['class_name'])

training_data = pd.DataFrame( {'Images': training_imgs,'Animal': training_labels_1})

#Changing the type of categorical variable(from int to str)

training_data.Animal = training_data.Animal.astype(str)

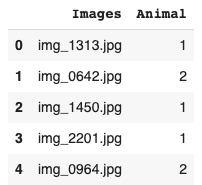

Before feeding the data into the network, we will load and prepare it for processing. We will read the train.csv file and update it by adding the file type(.jpg) to each of the images names.We will also change the type of the categorical variable class_name which is later renamed as ‘Animal’ from integer to string.

Let’s have a look at the new dataset.

training_data.head()

Output:

The label 1 refers to the image of a cat and label 2 refers to the image of a dog.

Creating training and validation sets

from sklearn.model_selection import train_test_split

training_set, validation_set = train_test_split(training_data, random_state = 0, test_size = 0.2)

We will split the training data into two different datasets, a training set to train the model and a validation set to evaluate the performance of the model.

Preprocessing the Image data

from tensorflow.keras.preprocessing.image import ImageDataGenerator

train_dataGen = ImageDataGenerator(rescale = 1./255,

shear_range = 0.2,

zoom_range = 0.2,

horizontal_flip = True)

validation_datagen = ImageDataGenerator(rescale=1./255)

train_generator = train_dataGen.flow_from_dataframe(dataframe = training_set, directory="Cats_and_Dogs/Dataset/images_train/",x_col="Images", y_col="Animal", class_mode="binary", target_size=(160,160), batch_size=32)

validation_generator = validation_datagen.flow_from_dataframe(dataframe= validation_set, directory="Cats_and_Dogs/Dataset/images_train/", x_col="Images", y_col="Animal", class_mode="binary", target_size=(160,160), batch_size=32)

We will use the ImageDataGenerator from TensorFlow to generate batches of tensor image data. First, we will initialize the ImageDataGenerator object for both training_set and validation_set with a set of parameters like rescale, shear_range, zoom_range, horizontal_flip. These parameters will help in transforming the image vectors for maximum feature extraction. We will then use these objects to generate tensors from the actual images.

The flow_from_dataframe method uses the data frame to load the images. The directory parameter specifies the exact location of the images. x_col and y_col are the independent and dependent variables, in this case, the images and the labels. class_mode=”binary” specifies that the data consists of only 2 distinct classes which are cats and dogs. target_size=(160,160) will generate an image of size 160 x 160. Batch size is the number of images sampled at once

On running the above code blocks you will receive an output like this :

Initializing the base model

image_size = 160

IMG_SHAPE = (image_size, image_size, 3)

#Create the base model from the pre-trained model MobileNet V2

base_model = tf.keras.applications.MobileNetV2(input_shape=IMG_SHAPE,

include_top=False,

weights='imagenet')

The base model is the model that is pre-trained. We will create a base model using MobileNet V2.

We will also initialize the base model with a matching input size as to the pre-processed image data we have which is 160×160. The base model will have the same weights from imagenet. We will exclude the top layers of the pre-trained model by specifying include_top=False which is ideal for feature extraction.

The above code block will download the pre-trained model and initializes it with the given parameters. We should also prevent the weights of the convolution from being updated before the model is compiled and trained. To do this we set the trainable attribute to false.

base_model.trainable = False

Adding Extra layers to Pre-trained Model

Since the pre-trained model is trained to classify into 1000 classes, we will manually set the output layers to adapt to our problem. Here we need a single node output layer as we have a binary classification problem. We will add the final layers to the base_model network as follows:

model = tf.keras.Sequential([

Base_model,

keras.layers.GlobalAveragePooling2D(),

keras.layers.Dense(1, activation='sigmoid')])

Compiling the model

Its time to compile our new model by initializing the right optimizer, loss function and metrics.

model.compile(optimizer=tf.keras.optimizers.RMSprop(lr=0.0001),

loss='binary_crossentropy',

metrics=['accuracy'])

Training the model

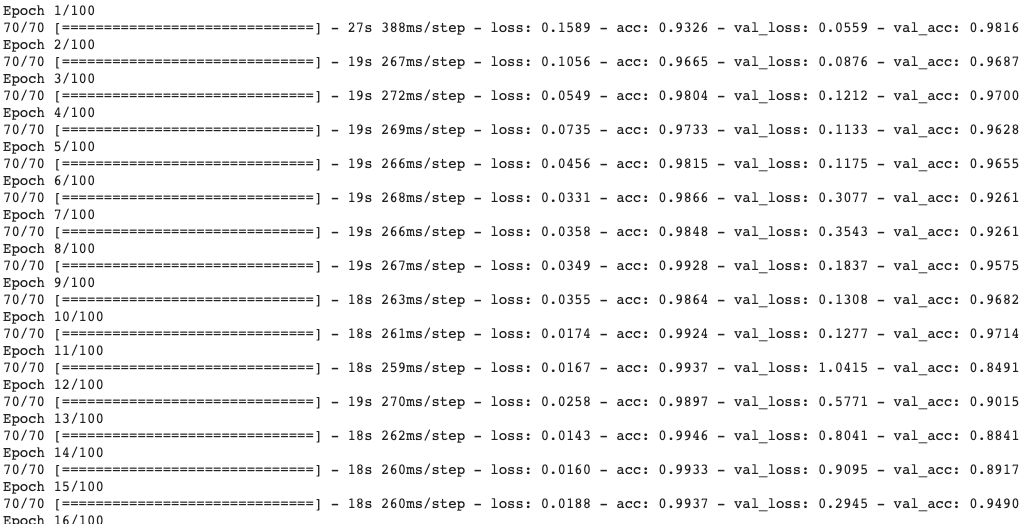

Finally, it’s time to train the model with the image data we have. We will train the model for a 100 epochs, each set with 70 steps_per_epoch for both training and validation.

steps_per_epoch is the number of times the weights are updated for each cycle of training. (The ideal value for steps_per_epoch is the number of samples per batch)

We will fit the training and validation data generators and specified parameters to the model. On executing the below code block, the model will actually start to train.

epochs = 100

steps_per_epoch = 70

validation_steps = 70

history = model.fit_generator(train_generator,

steps_per_epoch = steps_per_epoch,

epochs=epochs,

workers=4,

validation_data=validation_generator,

validation_steps=validation_steps)

Output:

Visualizing the training and Validation performance

Execute the below code blocks to plot graphs for varying training and validation accuracies and losses.

acc = history.history['acc']

val_acc = history.history['val_acc']

loss = history.history['loss']

val_loss = history.history['val_loss']

plt.figure(figsize=(8, 8))

plt.subplot(2, 1, 1)

plt.plot(acc, label='Training Accuracy')

plt.plot(val_acc, label='Validation Accuracy')

plt.legend(loc='lower right')

plt.ylabel('Accuracy')

plt.ylim([min(plt.ylim()),1])

plt.title('Training and Validation Accuracy')

plt.subplot(2, 1, 2)

plt.plot(loss, label='Training Loss')

plt.plot(val_loss, label='Validation Loss')

plt.legend(loc='upper right')

plt.ylabel('Cross Entropy')

plt.ylim([0,max(plt.ylim())])

plt.title('Training and Validation Loss')

plt.show()

Output:

We can see huge variations in the loss and accuracy of the validation set. Although the model gradually seems to improve, it may not behave in a similar way when used to actually classify the images as a result of overfitting.

The above model was able to predict with an accuracy of 56% on the validation set. You can try to tune up the model to give a better result and to reduce overfitting

You may look into the following article to see how to make predictions for a test_set.

Hands-On Guide To Multi-Label Image Classification With Tensorflow & Keras

Happy Coding !!