There are almost all the websites over the internet which display ads. The companies who wish to advertise their products, choose these web places as a medium of advertisement. The challenge is that if the company has a range of advertisement versions, which among these versions can get the highest conversion rate, i.e. the maximum number of clicks on the ad.

In this article, we will discuss reinforcement learning in Python for Click-Through-Rate (CTR) prediction of web advertisements. We will see the practical implementation of Upper Confidence Bound (UCB), a method of reinforcement learning applied in this task. Using this implementation, one can be able to find the best version of the advertisement from a set of available versions that can get a maximum number of clicks by the visitors on the website.

The Upper Confidence Bound (UCB) Method

The Upper Confidence Bound (UCB) algorithm belongs to the family of Reinforcement Learning algorithms. This method is applied in action selection where it uses uncertainty in the action value estimates for balancing exploration and exploitation. This method is popularly used in solving the Multi-Arm Bandit Problem. For more details on the UCB algorithm, please read the article “Reinforcement Learning: The Concept Behind UCB Explained With Code”.

The Dataset

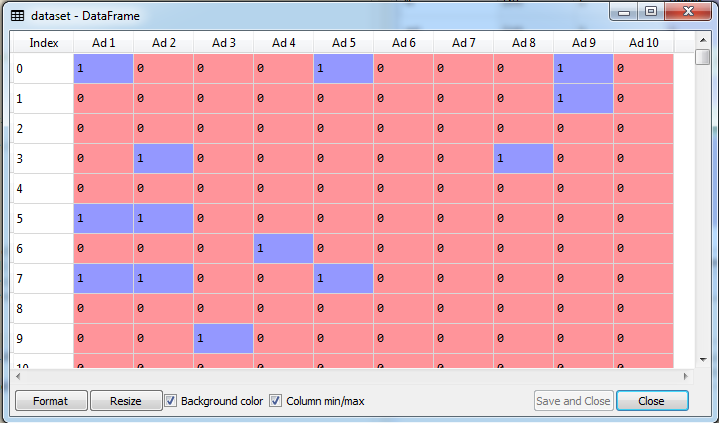

In this experiment, we have used the Ads CTR Optimization dataset that is publically available on Kaggle. This dataset comprises the response of 10,000 visitors to 10 advertisements displayed on a web platform. These 10 advertisements are actually the 10 ad versions of the same product. The responses are represented in terms of rewards given to those 10 ads by visitors. If the visitor has clicked on an ad, the reward is 1 and if the visitor has ignored the ad, the reward is 0. Now, based on these rewards, the task is to identify which among the 10 ads has the highest CTR so that the ad with the highest conversion rate should be placed on the web platform.

Implementation of Upper Confidence Bound (UCB)

In this reinforcement learning in python implementation, we will compare two approaches – Random selection of ads and selection using UCB method so that we would be able to conclude the effectiveness of UCB method. First, we need to import the required libraries and then the dataset that we have downloaded from the Kaggle.

# Importing the libraries

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

# Importing the dataset

dataset = pd.read_csv('Ads_CTR_Optimisation.csv')

As we can see the dataset in the above picture, it comprises values 1 or 0 for 10 ads that are displayed on the website. Each row of the dataset represents a visitor to the website. If the visitor has clicked on the ad, the value for that ad is 1 and if the visitor has ignored the ad, the value for that ad is 0. There are 10,000 such records in the dataset.

Using Random Selection Method

To see the difference, first, we need to see how random selection of ad versions works. From the set of 10 ad versions, one ad is selected at random and displayed to the visitor.

# Implementing Random Selection

import random

N = 10000

d = 10

ads_selected = []

total_reward = 0

for n in range(0, N):

ad = random.randrange(d)

ads_selected.append(ad)

reward = dataset.values[n, ad]

total_reward = total_reward + reward

# Visualising the results

plt.hist(ads_selected)

plt.title('Histogram of ads selections')

plt.xlabel('Ads')

plt.ylabel('Number of times each ad was selected')

plt.show()

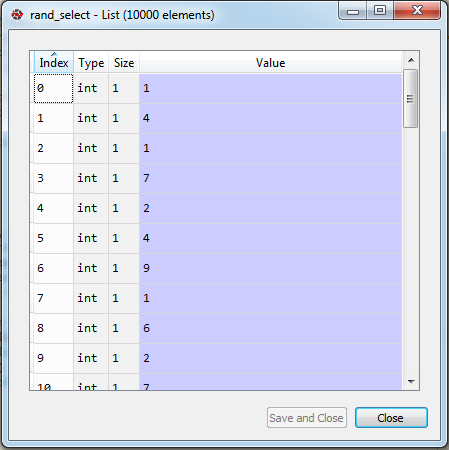

Once the above code snippet is executed, the above algorithm selects one ad at a random and displays before the visitor. If the visitor clicks on the ad, the reward 1 is added and if the visitor ignores it, the rewarded 0 is added. See in the below screenshot this random selection of ads.

As we can see in the above picture, for the first user, the second ad was displayed (keep in mind the indices in python) and rewarded 0 was added. For the second user, 5th ad was selected and reward 1 was added. In the same way, this random selection and addition of reward continue till the last, 10,000th visitor. In this way, the total reward value is calculated iteratively.

![]()

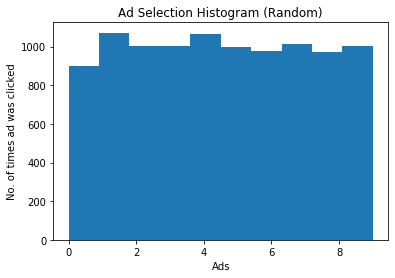

So, as we can see, the total reward using the random selection method is 1196. Now, we visualize through the histogram the number of times each ad was clicked.

Please note that this entire process of random ad selection including the above histogram and total reward will vary at each run of the program.

Using the UCB Method

In the above section, we have seen the random selection of ads and rewards received. Now we will see the implementation of Upper Confidence bound (UCB) in the same task. Please refer to the formulae in the above-referred article for better understanding.

# Upper Confidence Bound

import math

N = 10000

d = 10

ads_selected = []

numbers_of_selections = [0] * d

sums_of_rewards = [0] * d

total_reward = 0

for n in range(0, N):

ad = 0

max_upper_bound = 0

for i in range(0, d):

if (numbers_of_selections[i] > 0):

average_reward = sums_of_rewards[i] / numbers_of_selections[i]

delta_i = math.sqrt(3/2 * math.log(n + 1) / numbers_of_selections[i])

upper_bound = average_reward + delta_i

else:

upper_bound = 1e400

if upper_bound > max_upper_bound:

max_upper_bound = upper_bound

ad = i

ads_selected.append(ad)

numbers_of_selections[ad] = numbers_of_selections[ad] + 1

reward = dataset.values[n, ad]

sums_of_rewards[ad] = sums_of_rewards[ad] + reward

total_reward = total_reward + reward

# Visualising the results

plt.hist(ads_selected)

plt.title('Histogram of ads selections')

plt.xlabel('Ads')

plt.ylabel('Number of times each ad was selected')

plt.show()

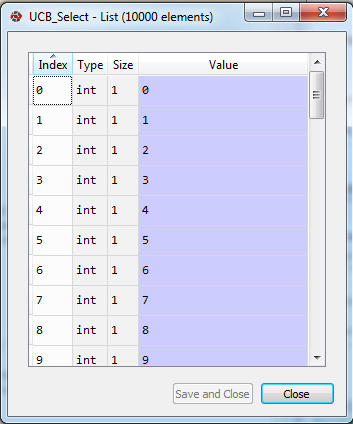

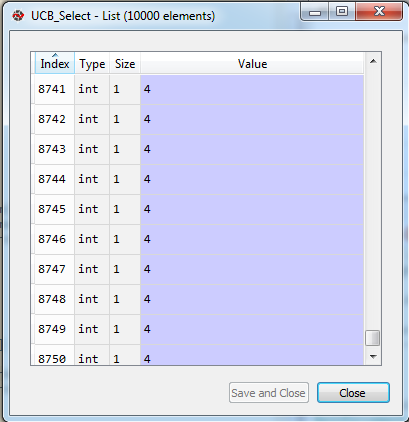

When the above code snippet will be executed, first we will see the selection of ads to be displayed before each visitor.

For the first visitor, the first ad is displayed and reward 1 is added. This process continues as we have seen in the above section. To the last visitors, you can see that 4th as is displayed most of the time. This is because the 4th ad is mostly rewarded positively by the visitors. So the algorithm leant that trend and displays 4th ad most of the time.

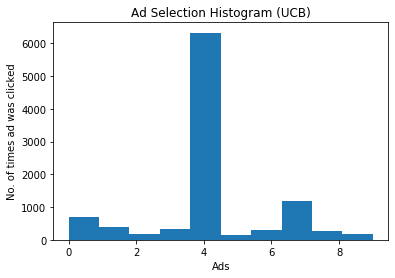

The same trend can be seen in the histogram given below where 4th ad has the highest number of clicks.

Finally, we will see the total reward when using UCB algorithm.

![]()

The total reward received when using UCB algorithm is nearly double the total reward received in random selection. Finally, we can conclude that the Upper Confidence Bound (UCB) algorithm helps in finding the best ad from a set of ad versions to be displayed to visitors so that maximum click and highest conversion rate can be obtained. Using this number of clicks on each of the ads and using the number of impressions, one can easily find out the Click-Through Rate (CTR) of these ads. The CTR can be obtained as (Total No.of Clicks / Total Impression) x 100.

Hope this implementation of Reinforcement Learning in python helps you in learning how it helps in predictive analytics.