Over the last few years, there have been significant advancements in the research of Chinese natural language understanding (NLU) and natural language processing (NLP). In order to make robust Chinese NLP models, various high-quality corpora of speech, text and others have been built by the researchers.

The General Language Understanding Evaluation (GLUE) benchmark which was introduced in 2018 is a collection of resources for training, evaluating, and analyzing natural language understanding systems. These systems consist of a benchmark of sentence-pair language understanding tasks, a diagnostic dataset, and a public leaderboard for tracking performance along with a dashboard for visualising the performance of models on the diagnostic sets.

Taking these two points into consideration — Chinese natural language processing (CNLP) and General Language Understanding Evaluation (GLUE) — researchers at Tsinghua University, Zhejiang University, and Peking University developed a model, known as ChineseGLUE.

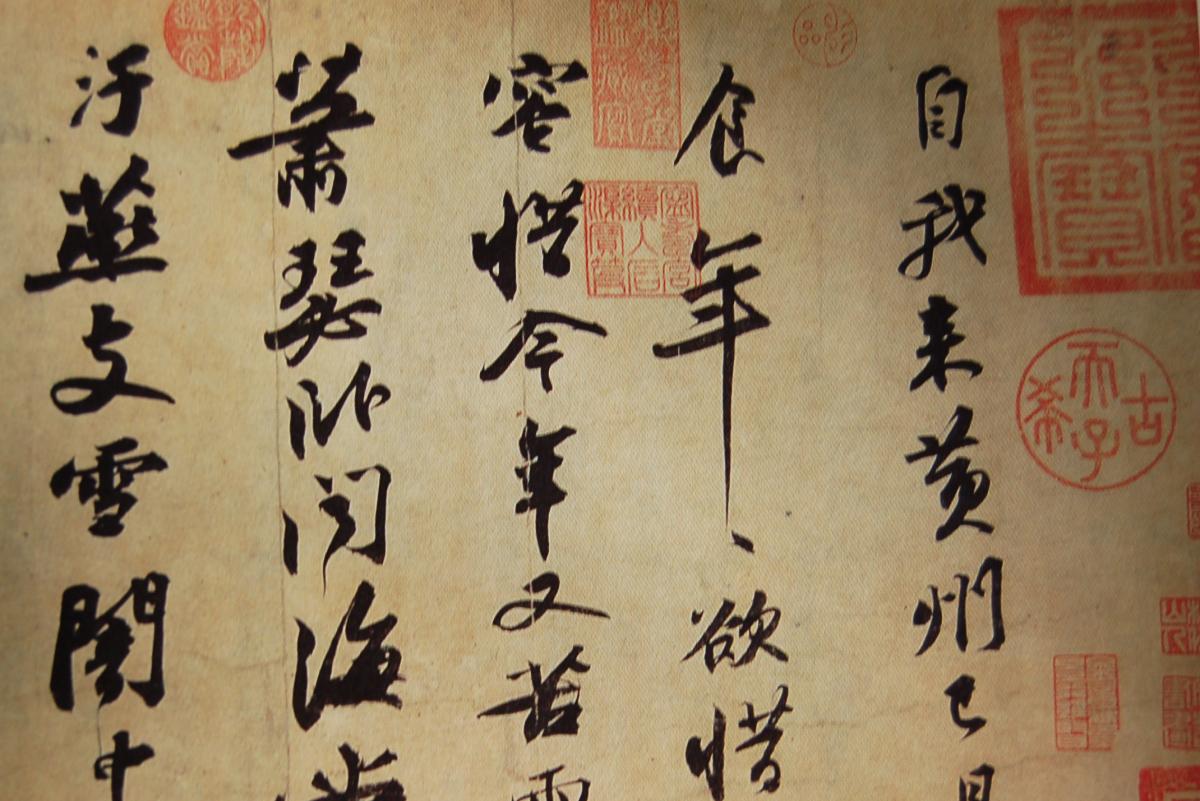

Currently, the ChineseGLUE includes datasets for Natural Language Inference, Sentiment Analysis for Internet News, Short Text Classification for News, Reading Comprehension for Traditional Chinese, etc. such as LCQMC colloquial description semantic similarity task, XNLI language inference task, TNEWS Today’s headline Chinese news (short text) classification, Delta Reading Comprehension Dataset (DRCD) Traditional Reading Comprehension and INEWS Internet sentiment analysis task.

This Language Understanding Evaluation benchmark for Chinese (ChineseGLUE) consists of some specific features which bridge the gap between the popular GLUE and the Chinese language.

- Benchmarking of Chinese Tasks, Covering Multiple Language Tasks of Different Degrees: This model includes a benchmark of several sentences or sentence pair language understanding tasks. Currently, the datasets used in these tasks are collected from the public and further, the researchers will include datasets with a private test set.

- Open Leaderboard: ChineseGLUE consists of a public leaderboard for tracking performance. One can easily submit the prediction files on these tasks where each task will be evaluated and scored.

- Baseline Model, Including The Starting Code, Pre-Training Model: The baseline models of ChineseGLUE tasks will be available in TensorFlow, PyTorch, Keras, and PaddlePaddle.

- Corpus For Language Modeling, Pre-Training Or Generative Tasks: The researchers aim to include a large volume of the raw corpus for pre-train or language modelling research purposes. Also, it has been said that they will include at least 30G raw corpus by the end of 2020. One can use it for general purpose or domain adaption, or even for text generating purposes.

Why ChineseGLUE

There are several crucial reasons which the researchers put forward as to why this model is necessary. They are mentioned below

- The Chinese language is a large language with its own specific and numerous applications.

- Comparing to the English dataset, there are relatively few publicly available datasets in the Chinese language.

- Language understanding has evolved to the current stage, and the pre-training model has greatly promoted natural language understanding. In this case, if there is a benchmark for Chinese tasks, it includes a set of data sets that can be widely used and evaluated by the public, the characteristics of Chinese-language tasks, and the development of current world technologies.

Wrapping Up

GLUE is a popular benchmark for evaluating natural language processing but it does not work for the evaluation of Chinese NLP models. Thus, the researchers developed this model in order to evaluate natural language understand for Chinese. ChineseGLUE has similar properties as GLUE, i.e. datasets, baselines, pre-trained models, corpus and leaderboard.

The motives behind this model are to serve better Chinese language understanding, tasks, and industry, as a supplement to the common language model assessment and to promote the development of the Chinese language model by improving the way the Chinese language understands the infrastructure.