Despite the tremendous success of artificial intelligence applications in image recognition, speech recognition and tackling numerous well-defined problems, AI has its own set of limitations. Many of these problems were pointed out by Gary Marcus in his now well-cited research paper Deep Learning: A Critical Appraisal. In view of AI’s limitation and the growing threat to the job landscape, a group of eminent AI researchers met at Oxford in February 2017. Their goal was to survey the AI landscape and consider the malicious use of superhuman AI and contemplate its prevention. At the end of the two-day conference, the 26-member team published a 100-page report that dived into the potential malicious uses of AI, its harmful applications, new threats and high-level defences that should be incorporated.

Here Are The Most Alarming Threats Posed By The Misuse Of AI:

1) DeepFake And Social Manipulation: One of the biggest indicators of the misuse of AI was the now-banned DeepFake app which utilised machine learning tools for face-swapping. The research paper that kick-started the DeepFake trend talked about how neural network algorithms were used to make fake videos. Basically, neural networks “learn” to perform tasks by considering examples, generally without being programmed with any task-specific rules. Motherboard’s Samantha Cole first broke the story about DeepFake when she discovered a Reddit user posting videos made with a machine learning algorithm and trained of on hundreds of images of an individual’s face. Some of the early victims of the fake videos were female celebrities such as Taylor Swift and Gal Gadot.

Threat: The growing use of AI led to DeepFake — a video manipulation tool that resulted in realistic-looking pornographic videos which were subsequently banned. A major risk posed by the technology is that the images culled from social media accounts can potentially end up as a subject of fake videos. In an era of fake news, fake apps is also a growing threat to user privacy and a major threat to cybersecurity. Fake news is also a common tool for social manipulation.

2) Autonomous Weapons: AI-enabled autonomous weapons have been a topic of discussion for a long, long time. In fact, as reported in AIM more than 2,400 individuals and 150 companies from 90 different countries vowed to play no part in the construction, trade, or use of autonomous weapons in a pledge at the 2018 International Joint Conference on Artificial Intelligence in Sweden.

Threat: Now, the use of LAWs can have a serious impact, especially in the case of authoritarian states. It can also cause risks of controllability in cases where there is no human-in-the-loop requirement.

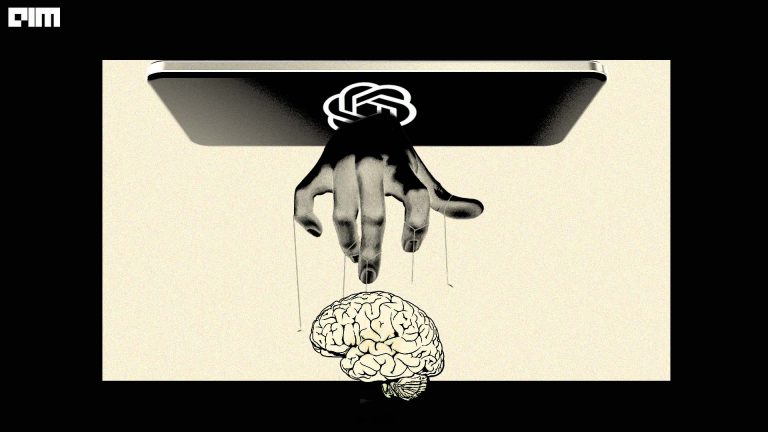

3) AI For Mass Surveillance: There is also AI’s growing use for surveillance and analysing collected data for creating targeted propaganda. AI military tech was a cause of concern recently over Project Maven which saw Google employees threatening to quit over military drone technology used by Pentagon. Similarly, employees at Microsoft posted a letter to CEO over the use of technology by law enforcement agencies. One of the biggest voices in this debate has been Tesla’s Elon Musk, who has rallied against the use of killer robots.

Threat: As per the Malicious AI report, the use of AI for surveillance purpose is broadening and there are new threats emerging in the form of social manipulation and privacy invasion. For example, state surveillance is extended in authoritarian states such as China where the state agencies are allowed to analyse mass data and use against its citizens to thwart dissent.

4) Swarm Attacks: As per the technical definition, swarm applies to a group of drones that can respond to conditions autonomously. However, in this case, swarm attack can apply to a network of autonomous robotic systems, that are leveraged for ubiquitous surveillance aimed at large areas and can execute commands and synchronised attacks.

Threat: Swarm attacks can be initiated by an agency or a single entity to target large areas with weaponised autonomous drones. Terrorists can use autonomous drones for launching co-ordinated attacks, better selection and targeting and evading security systems.

Conclusion

AI researchers have repeatedly emphasised using the tech for good and are striving to promote a culture of responsibility. In fact, many tech organisations have taken a definitive stance on political and security threats caused by AI and have laid down frameworks to build a security landscape for an AI-enabled future. In the light of the dual use of AI and ML technologies, eminent institutes such as MIT, Stanford and Oxford have published risk-assessment technical studies on the dual use of AI technologies. Non-profit research companies such as Open AI and the Partnership of AI are also striving ahead in pushing research for social good.