|

Listen to this story

|

Apple’s M1 chip made waves in the tech world when it was released in 2020, mainly because it was a discrete chip developed from the ground up by Apple. The chip’s Neural Engine was of particular note, being the first dedicated AI inference chip in a consumer product. The company also built up a strong developer infrastructure around the chip in the past two years, and has now scripted a success story that other companies wish to replicate.

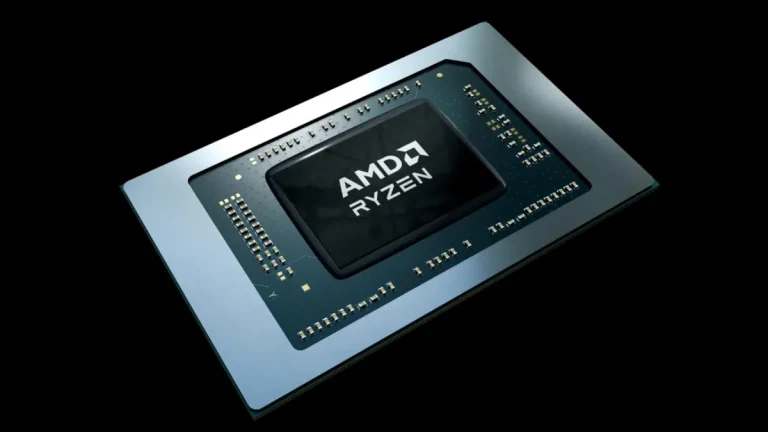

At CES 2023, AMD released a new series of laptop CPUs in direct competition to Apple’s M1 chips. The Ryzen 7000 series for laptops is a part of AMD’s push to challenge Intel’s, and now Apple’s, dominance in the laptop CPU market. However, one set of chips in the new lineup stands out; the Ryzen 7040 series. The lineup comprises the Ryzen 5 7640HS, the Ryzen 7 7840HS, and the Ryzen 9 7940HS.

This new set of laptop CPUs have a dedicated on-chip AI engine, similar to Apple’s Neural Engine, and are the first x86 processors to build out this capability. This killer feature of 7040 series will allow it to directly compete with Apple’s M2 lineup, as it claims to offer similar battery life numbers and can enable on-device AI inference.

This might be the beginning of a new trend in consumer hardware, where hardware manufacturers are including on-device inference capabilities to better handle new AI workloads. After seeing the precedent set by Apple’s Neural Engine, Microsoft has also seen the light when it comes to integrating AI functions into their operating system. Panos Panay, EVP and Chief Product Officer At Microsoft, said this at AMD’s CES presentation,

“AI is going to reinvent how you do everything on Windows, quite literally. These large generative models, think language models, cogent models, image models, these models are so powerful, so delightful, so useful, personal, but they’re also very compute intensive. So, we haven’t been able to do this before.”

Let’s delve deeper into the industry trend of bringing AI to the edge using dedicated AI hardware on consumer devices.

AMD’s Big Bet

To understand why AMD’s AI chip is such a big deal, we must first understand the challenges associated with creating a dedicated AI inference engine on a CPU. While Apple took the relatively easier path of licencing an SoC design from ARM, AMD has built their AI engine into an x86 processor, marking the first time a chipmaker has done this.

ARM and x86 refer to the instruction sets and architectures used in modern CPUs. Most mobile chips and the Apple M1 chip use ARM architecture, while AMD and Intel CPUs use x86, previously x64, for their CPUs. NVIDIA uses their proprietary Turing architecture for their GPUs.

The in-house design for AMD’s AI engine is based on an architecture that AMD has termed ‘XDNA adaptive AI architecture’, previously seen in their Alveo AI accelerator cards. The chipmaker claims that their new chips outperform the Apple M2 by up to 20% while being up to 50% more energy efficient. The engine is based on a field programmable gate array; a kind of processor that can be reconfigured to the silicon level even after the manufacturing process. Reportedly, the new chips will be used for use-cases such as noise reduction in video conferencing, predictive UI, and preserving the security of the device.

This AI push comes after AMD acquired Xilinx for $49 billion early last year. Xilinx is a chipmaker that offers FPGAs, adaptive systems-on-chip (SoCs), and AI inference engines. When looking at the 7040 series of chips, it is clear that AMD has integrated Xilinx’s decades of hardware know-how into their newest chips, with a lot more to come down the line.

AMD showed off new AI-powered features integrated into Microsoft Teams, such as auto-framing and eye gaze correction. The Microsoft team also showed off the noise reduction feature in Teams in a separate keynote. While all of these AI-based features already exist in the market with solutions like NVIDIA Broadcast and Krisp AI, the new set of CPUs can do it with 0% load on the CPU or GPU due to their dedicated inference hardware.

AMD also claims that the inference chip will result in smarter battery consumption, resulting in a longer overall battery life for the device. Apple’s M series users have been enjoying these optimisations unbeknownst for the past two years. However, now that other companies are catching up, Apple cannot afford to fall behind in the wave of AI at the edge.

AI at the edge

While edge computing has been a dream for many tech giants for years now, the rise of dedicated inference hardware on every device might actually make it a reality. Apple’s Neural Engine showed that it was possible to do meaningful amounts of AI inference on-device without needing to send data back to the cloud.

Edge AI allows low-powered devices like laptops and phones to process data in real-time, providing a seamless user experience powered by AI. Apple’s move sparked a response from competitors like AMD and Intel, which released dedicated AI accelerators aimed at speeding up training and inferencing tasks on the cloud, and now, at the edge.

However, AMD’s new set of chips fights Apple on their own terms, providing capable AI inferencing services on low-powered devices. As per AMD’s claims, their offering is also superior to the current generation of Apple’s chips, both in terms of power and efficiency. Panoy summed up the significance of these chips, stating,

“We are now. . . at an inflection point and this is where computing from the cloud to the edge is becoming more and more intelligent, more personal, and it’s all done by harnessing the power of AI.”

The trend towards offering powerful inferencing capabilities at the edge is very important for the future of AI. With AI becoming more pervasive in everyday tasks such as image processing, UI optimisation, smart recommendations, and more, edge AI can offer a real advantage for users. Moreover, AI at the edge also offers better data privacy and security for the end user; a paradigm which is ripe for change considering regulations for data protection.