Sometimes, the predictive modelling algorithms lose the useful information of the data that can affect the overall results. One of the important pieces of information which data consists of is the relation between the instances and inferring these relationships in the results can make the machine learning model a more powerful tool. The same concept applies to the deep learning models as well. In this article, we will be discussing an important concept of deep learning, called Deep Relational Learning, which is working on making the results and model more expressive using the relational information of the data. The major points to be covered in this article are listed below.

Table of Contents

- What is Deep Relational Learning?

- The Architecture of the Relational Model

- The Architecture of a Statistical Model

- The Architecture of Neural Networks

- General Use-Cases of Deep Relational Learning

- Statistical/Graphical Models

- Neural Networks

- Hybrid Approaches

- Applications of Deep Relational Learning

Let us start the discussion by understanding deep reinforcement learning.

What is Deep Relational Learning?

As we know, the standard deep learning models work on the technique where operation of any kind is performed on the features of the data which is consumed by the model as the input before taking them to the main algorithms. The model preprocesses the data and converts them into binary or numeric form. In the real world, most of the information in the data is relational and when the data gets converted into the required form of the model it loses the important relational information in the data.

Here we require an algorithm that can also work on the arbitrary form of the data so that important information can be taken into account. We require algorithms and logic which can represent the result with their useful means. There can be many approaches where we can make a model be expressive. For example, first-order logic (FOL) is a useful approach and is known for its expressive powers. The FOL can be considered for modelling purposes because of its ability to increase generality, express knowledge, and express reasoning.

Many of the models take the logistic approach for making deep learning models efficiently expressive. By the above, we can say that in deep learning building such models which can handle the arbitrary information of the data and represent the result in an expressive context of their mean can be considered as the deep relational learning process and we can call the models deep relational models.

The Architecture of the Relational Models

When it comes to relational learning we can say we can have two types of architecture, one where the standard statistical machine learning algorithms can be used for making the relational models and we can use the neural networks for making the models. Using both of the ways we can design a relational model.

The architecture of a Statistical Model

The models designed for providing solutions to the above-given concern can have the ability to learn the deep architecture of the data by stacking the number of generative models and inducing logical rules in the architecture of the model. Where an inductive logic programming system helps in learning the first layer of the representation. After these layers, the architecture can hold a restricted Boltzmann machine(RBM) where hypothesized rules can be used for learning purposes of the successive layers from the stack ILP. Once the representation is obtained, the classification and regression models can be used with the features learned at the highest layer.

The above image is a representation work diagram of the relational model where a logistic regression model is used with the features. Where the relation between Friend(X, Y) and kind(y) is Happy(X) is found using the logic and models in the system. Some of the examples of the statistical relational models are as follows:

- Markov logic networks

- Multi entity bayesian network

- Bayesian logic program

- Relational Markov network

In this article, our major focus is to know more about relational learning in the field of deep learning but it is better to understand relational learning from the basics because the basic processes of the deep learning models will also be similar to the statistical model. In the next section, we will be discussing the architect of neural networks.

The Architecture of Neural Networks

When we talk about the neural networks for relational modelling we can encode similar models like SVM random forest, decision trees, and logistic regression with a layer-wise structure, or using the similar approach with the neural network we can design the models where the parts of the graphical models are designed using the neural networks.

The benefit of the neural networks is that we can make a model with several relational linear layers and relational activation layers as a graph. Also, we can obtain the benefit of backpropagation for training the neural networks by adding the error layers. When feeding the data in such architectures allows the algorithm to calculate the prediction errors and using the backpropagation of derivatives we can update the parameters of the models.

The above image is a representation work diagram of the relational neural networks where the model is predicting the gender of users in a movie rating system with a layer-wise architecture.

General Use-Cases of Deep Relational Learning

In this section of the article, we will discuss some of the works dealing with graphical, neural, and hybrid representations for relational learning.

- Statistical Graphical Models

There are various languages that can be used for making graphical models. In some of the research, we see the suggestion of generative models, and various works have used discriminative models where basically the Markov logic chain has been used. And we have also seen that both of the approaches combine logic and graphical models that are probabilistic where we see in the algorithms each formula is in association with the weights. The relation information probability distribution is derived using the weights. We use the following languages for graphical models.

- Neural Networks

We can understand the limitations of the graphical models and for that, we can use the neural networks for making the model expressive to the relational information presented inside the data. Where various prediction tasks can be performed by training the learned nodes of the neural network. In some of the works, we see that the graph adjacency information is captured by the networks for the representation of the nodes. Distance between the two nodes is similar to the graph distance in the graphical models. In some of the works, we see that the nodes have textual properties which help in feature representation by learning the representation of graph relations.

The knowledge graphs can be considered as multi-relational data. When working with such data we are required to embed both nodes and edges of the graph. We can use neural networks where the algorithms learn the representation of the nodes where the relations lying inside the data can be represented as the pair-wise node relations which also benefits us in the context of representing the broader graph context. Some of the works on the graph neural networks are as follows:

- Graph Neural Nets

- Cane: Context-aware network embedding for relation modelling

- Tri-party deep network representation

- Hybrid Approaches

In the above approaches we have seen how the graphical models and neural networks can be used for deep relational learning and combining both of them we can obtain more fruitful results. Some of the approaches for hybridization of both methods are listed below.

- Lifted rules to specify compositional nets: In the above sections, we have seen how the hypothesized rules are made to learn the successive layers. Using this approach, the model can learn the relational dependencies in a data space. To specify the composition functions, the neuron of the network is used where the neuron has mapped facts and rules of the procedure by the system. Two major works on the basis of this system are listed below.

- Differentiable inference: In this type of system neural networks are used for storing the classes of logical queries and the system focuses on implementing reasoning where a series of numeric functions from the graphical approach is used. Some of the works using the approach are as follows:

- Deep classifiers and probabilistic inference: As the name suggests the systems built on using this approach have classifiers from the deep neural networks and inference of the results received by the graphical modelling. Simply saying, neural networks help in learning the probabilities for expressions. Input for this type of system is a combination of the feature vectors and facts where facts are used by the logic program like FOL. Some of the works using this approach are as follows.

- Rule Induction from data: In most of the works we find that the rules are introduced to the system by the graphical knowledge bases and then using neural networks we can make a whole system be expressive in terms of the inferences of results.

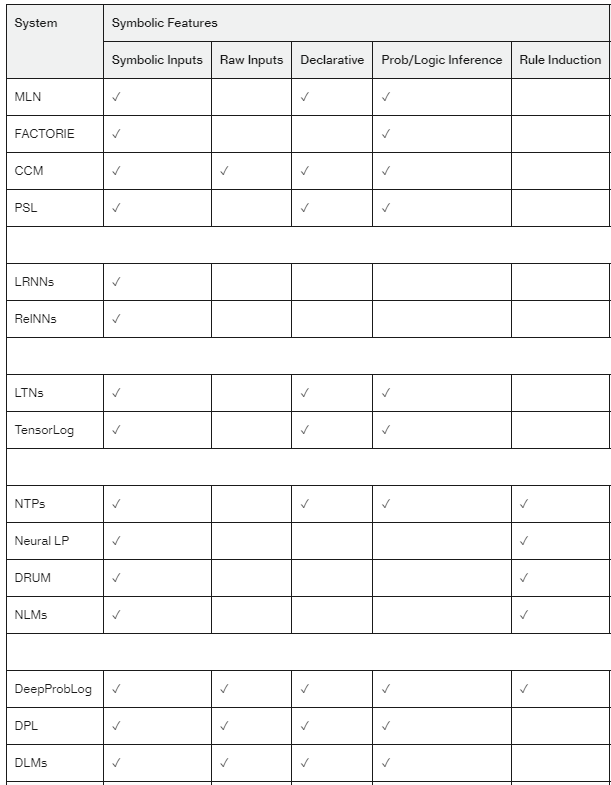

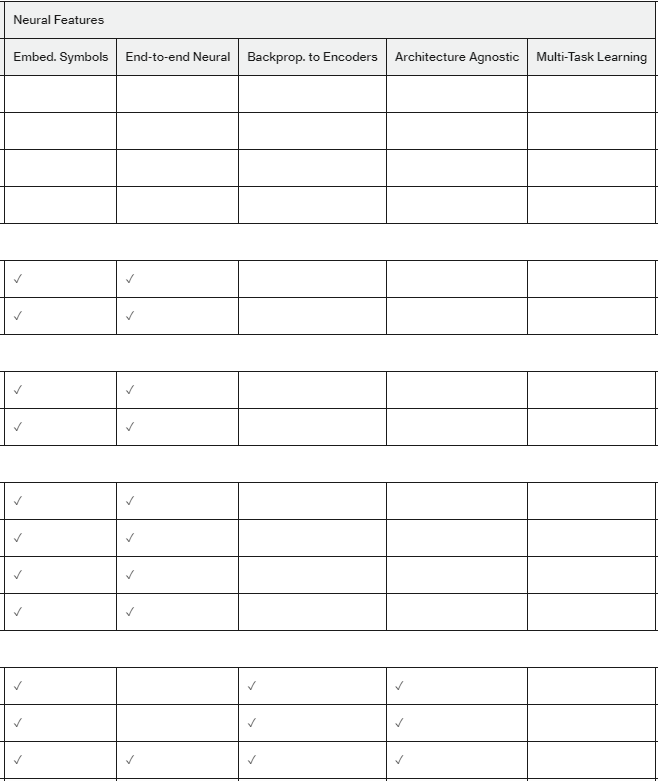

Here we have seen some of the approaches from which we can perform relational learning and deep relational learning with some of the already implemented programs in the field. Below I am using a table to compare all these main works in the field to deep relational learning.

Applications of Deep Relational Learning

As we have seen, the major goal of developing such a system is to make the results more expressive in terms of the relational information presented in the data. When we talk about the data we can say most of the time there are huge relations presented in the instances of the NLP data and time-series data. So we can say the majority of the task of such learning is in those above-given fields are as follows:

- Collective classification – Here classification of the objects in classes is required with the relation of the object with the class.

- Link prediction – Providing information about the linkage between two objects of the data and the information about their relationship.

- Link-based clustering – As we can perform the link prediction between the objects, we can use those predictions for the clustering of the data based on the linkage strength between the objects.

- We can also use the deep relational models for social network modelling.

- Entity resolution can be performed by deep relational learning where the task is to identify the similar instances presented in two or more than two datasets.

Final Words

In this article, we have seen an overview of deep relational learning along with statistical relational learning. We got to know that the basic procedures of these models are the same. We have also discussed some examples of the deep relational models with their working styles and compared them using different parameters.