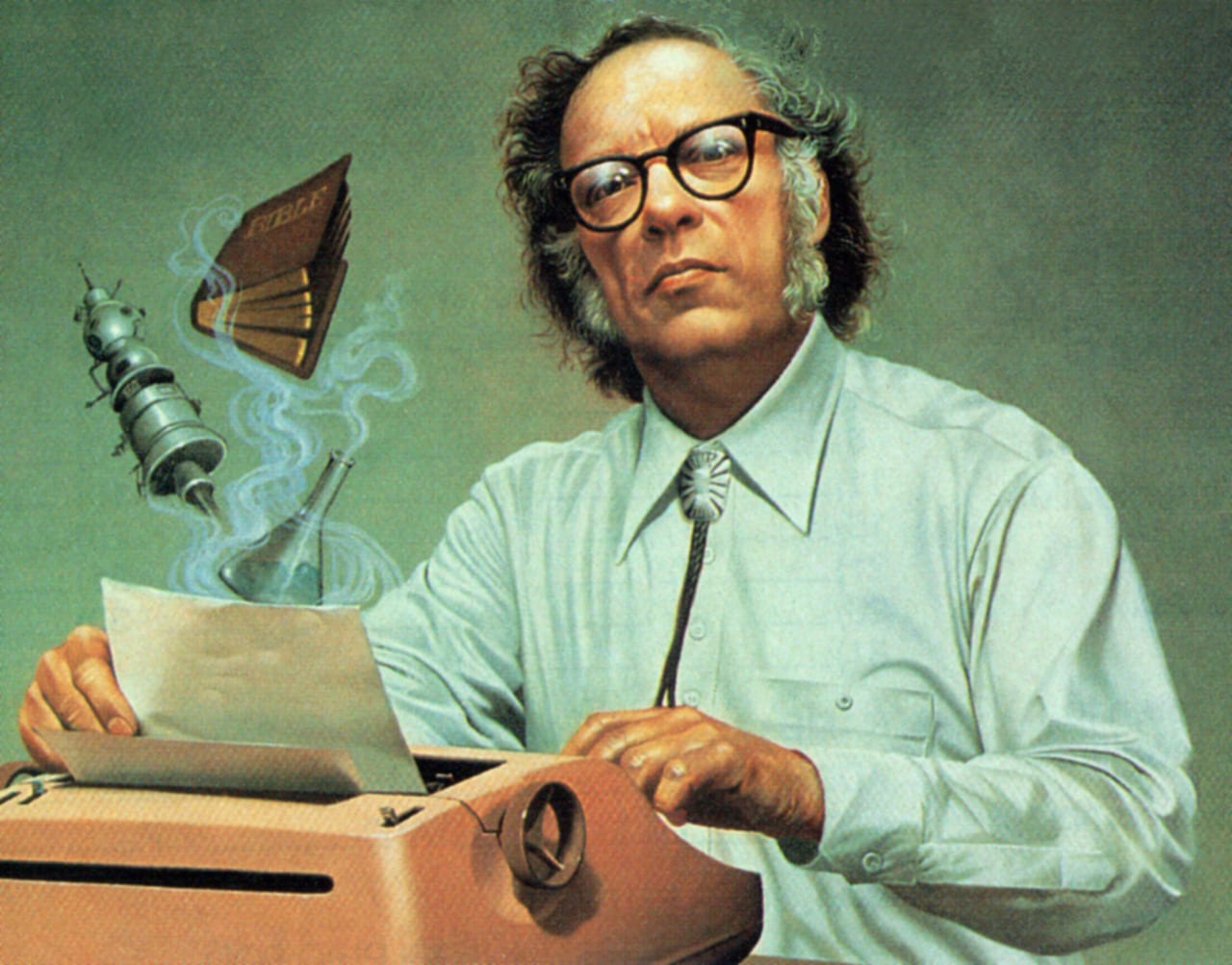

Renowned science fiction author Issac Asimov saw AI safety as the most significant aspect surrounding the technology. Asimov predicted that it would become an absolute necessity to program robots with a set of laws for protecting humankind from its own mechanical creations. He had laid down a set of three basic and mandatory AI rules back in 1942, popularly referred as Asimov Rules.

- They should not harm humans either through action or inaction

- They must always obey human command

- They must be able to secure their own existence so long as this does not violate the first two rules.

Asimov’s stressed on safe and responsible AI development process to propel the technology in a positive direction. However, as AI advances with time, a growing concern suggests that Asimov Rules might not be adequate for the 21st century.

To address this alarming concern, the Future of Life Institutes combined the minds of several experts to forge a set of 23 core principles surrounding AI development, to prevent any unprecedented apocalypse. The conference witnessed the attendance of from experts across several backgrounds such as engineers, programmers, roboticists, physicists, economists, philosophers, ethicists, and legal scholars.

The principles have since then been signed by about 2,300 people, including 880 robotics and AI researchers. Physicist Stephen Hawking, SpaceX CEO Elon Musk, futurist Ray Kurzweil, Google DeepMind founder Demis Hassabis, and Skype co-founder Jaan Tallinn are some of the notable supporters to feature in the list.

The previous attempts at establishing such a set of AI guidelines have lacked foresight or were too generalized. The 23 Asilomar AI Principles, however amalgamated the most current thinking surrounding AI, touching upon aspects pertaining to research, ethics, and foresight. These aspects ranged from data rights and research strategies to transparency issues and the risks of artificial superintelligence. Let us glance through this best practices rulebook to developing safe AI.

Principles surrounding Research

1st Principle – Research Goal: AI research should always be directed towards beneficial intelligence, and not just put into action without proper direction. The whole motto behind such initiatives is to create value.

2nd Principle – Research Funding: When investments are driven for AI, adequate measures and funding must be directed to ensure the project is beneficial. This principle includes thorny questions catering to computer science, economics, law, ethics, and social studies. The legal and ethical status of the AI research, the values it should be aligned with, need for robust AI systems in future, automation are few of the aspects these questions tend to bring out.

3rd Principle – Science Policy link: Much like the AI researchers, who hold significance in an AI initiative, the policy-makers also play a key role in ensuring that the technology is deployed or researched upon with utmost regard for safety. The policy makers stress on a stringent set of rules which focuses on better implementation of AI. This link of technology with policy is what drives best practice for AI.

4th Principle – Research Culture: An AI-based research can most of the times be critical, and we are still mostly in the learning phase of comprehending the technology, with fears surrounding the domain in hindsight. This makes it necessary to create an environment for researchers and developers, where there is an added emphasis on values such as cooperation and trust, and aspects such as transparency.

5th Principle – Race avoidance: Usually the research projects are tied with deadlines. Moreover, the competition surrounding this newer and resourceful technology often pushes developers to corner-cut through safety standards, in order to reduce time to market. This can turn out to be one of the chief reasons behind AI mishaps. It’s strictly advisable for teams developing AI systems to proactively cooperate to ensure safe measure are taken throughout.

Principles surrounding Ethics and Values

6th Principle – Safety: It is important for the developers to concentrate extensively on making such systems safe and secure throughout their operational lifetime. These systems must also be verifiable for safety, wherever applicable and feasible.

7th Principle – Failure transparency: As discussed earlier AI is still in its early stages in terms of extensive implementation of the technology, thus there are chances that an AI system can cause harm. But, effort must be taken right then to avoid such a situation in near future. Developers should spend considerable amount of time on ascertaining the reasons a particular AI system might have malfunctioned or caused harm. This transparency to failure cases helps drive better practices for AI.

8th Principle – Judicial transparency: AI projects usually combine the expertise of autonomous systems for judicial decision-making. However, it is necessary to ensure that there is a legitimate explanation to such an involvement, which can also be audited by a competent human authority.

9th Principle – Responsibility: The advanced AI systems can be used for several applications. Some of these applications could lead to abuse or misuse of the technology. Designers of these complex systems must ensure that the moral implications of utilizing these technologies remain positive. Moreover, the builders of these systems are expected to share responsibility in shaping those implications.

10th Principle – Value Alignment: Towards the end of the day, it is absolutely fair to suggest these highly autonomous AI systems are designed to assist humans. Therefore, it becomes critical for designers to built these systems in a manner, where the goals and behaviors of the AI precisely aligns with human values throughout the operation.

11th Principle – Human Values: The principles were originally created to create an AI building process which has utmost regard for human values, and doesn’t end up annihilating the human kind, as depicted in several sci-fi movies. In other words, the process must be compatible with ideals of human dignity, freedom, and cultural diversity.

12th Principle – Personal Privacy: A line should be drawn so as to ensure the AI systems designed doesn’t obstruct an individual’s personal privacy rights. Basically, human beings should have complete rights to access, manage, and control the data they generate, to prevent any chances of AI takeover in the distant future.

13th Principle – Liberty and Privacy: The AI domain has witnessed extensive application of this technology to personal data. However, things might get out of control if our liberty is violated in the process of applying AI to our personal data. Thus, steps must be taken to ensure that these systems take personal liberty as foremost consideration.

14th Principle – Shared Benefit: The aim of leveraging any technology is to make jobs easier, for a greater mass of people. These systems must be designed keeping in mind that that the technology or the system can be shared and utilized by a greater number of people.

15th Principle – Shared Prosperity: Economic prosperity is yet another important aspect that’s included under the banner of advantages that the technology proposes. However, it’s important to ensure that this progress is shared with whole of the humanity.

16th Principle – Human Control: This is one of the most significant aspects on the list of principles. Even Asimov suggested years back that that humans must always have complete and comprehensive control over AI systems, to avoid an AI apocalypse. Thus, humans must choose how and whether to delegate decisions to AI systems.

17th Principle – Non-Subversion: The potential of highly advanced AI systems can prove critical in streamlining the social and civic processes. The health of the society depends on these processes. Basically, the utilization of such systems must improve these processes, rather than subverting them.

18th Principle – AI Arms Race: Humankind already fears the rise of AI, where these human-designed systems take over their creators and obliterate humankind into nothing. The use of this technology to create arms and automated weapons will only cause more destruction, or swipe-out of human population from the face of the earth. It’s only logical that we don’t participate or avoid in arms race in the AI domain.

Principles surrounding longer-term issues

19th Principle – Capability Caution: The lack of a general consensus about the technology puts us in a pickle. Undoubtedly, the potential of AI is vast and there are probably several applications still in conception or testing stages. However, the lack of comprehensive know-how can affect us in the long run. The ideal approach is to avoid setting strong assumptions regarding upper limits on future AI capabilities.

20th Principle – Importance: The technology isn’t simply considered disruptive, but it withholds the potential to create a profound change in the history of life on Earth. Researchers and developers should make prudent plans to manage the landscape surrounding the technology with commensurate care and resources.

21st Principle – Risks: These 23 principles have been primarily created to reduce risks related to AI, and ensure safety for all. AI systems can pose risks too, which can be either be catastrophic or existential. For the smooth functioning of the technology, it’s necessary that we measure the impact of such risks in advance, so as undertake the appropriate planning and mitigation efforts in case of an impending risk scenario.

22nd Principle – Recursive Self-Improvement: A lot of complex algorithms factor into an AI interplay. Some of these systems can be designed to recursively self-improve or self-replicate in a manner which proliferates quality or quantity. Self-replication or self-improvement techniques can adversely introduce newer problems, unless subjected to strict safety and regulatory measures.

23rd Principle – Common Good: Last but not the least, such a technology should pave way for extensive growth and progress for everyone. It would be pointless and unfair if the technology resides in the hands of a few, with most of the humanity being left out from its comprehensive scope. The development of superintelligence should only take place in the service of widely shared ethical ideals, and for the benefit of all humanity.

These are the 23 AI Principles introduced at the Asilomar Conference. We are not necessarily close to any catastrophic moment, but the pace at which the wheels of AI technology are churning, and the growing intensity which current research reflects suggested that it was about time we rethought Asimov Rules on AI. The continued development of AI, guided by the 23 principles will furnish outstanding opportunities for the technology to flourish and empower people worldwide, for decades and centuries to come.