A Guide to Barlow Twins: Self-Supervised Learning via Redundancy Reduction

Barlow twins is a novel architecture inspired by the redundancy reduction principle in the work of neuroscientist H. Barlow.

Barlow twins is a novel architecture inspired by the redundancy reduction principle in the work of neuroscientist H. Barlow.

Topology is the study of shapes and their properties. Topology doesn’t care if you twist, bend, shear etc. the geometric objects. It simply deals with these shapes’ properties, such as the number of loops in them, no components, etc.

Haystack is a python framework for developing End to End question answering systems. It provides a flexible way to use the latest NLP models to solve several QA tasks in real-world settings with huge data collections.

PaddleSeg is an Image segmentation framework based on Baidu’s PaddlePaddle(Parallel Distributed Deep Learning). It provides high performance and efficiency, SOTA segmentation models optimized for MultiNode and MultiGPU production systems.

Process Mining is the amalgamation of computational intelligence, data mining and process management. It refers to the data-oriented analysis techniques used to draw insights into organizational processes.

Perceiver is a transformer-based model that uses both cross attention and self-attention layers to generate representations of multimodal data. A latent array is used to extract information from the input byte array using top-down or feedback processing

THiNC is a lightweight DL framework that makes model composition facile. It’s various enticing advantages like Shape inference, concise model representation, effortless debugging and awesome config system, makes this a recommendable choice of framework.

Pykeen is a python package that generates knowledge graph embeddings while abstracting away the training loop and evaluation. The knowledge graph embeddings obtained using pykeen are reproducible, and they convey precise semantics in the knowledge graph.

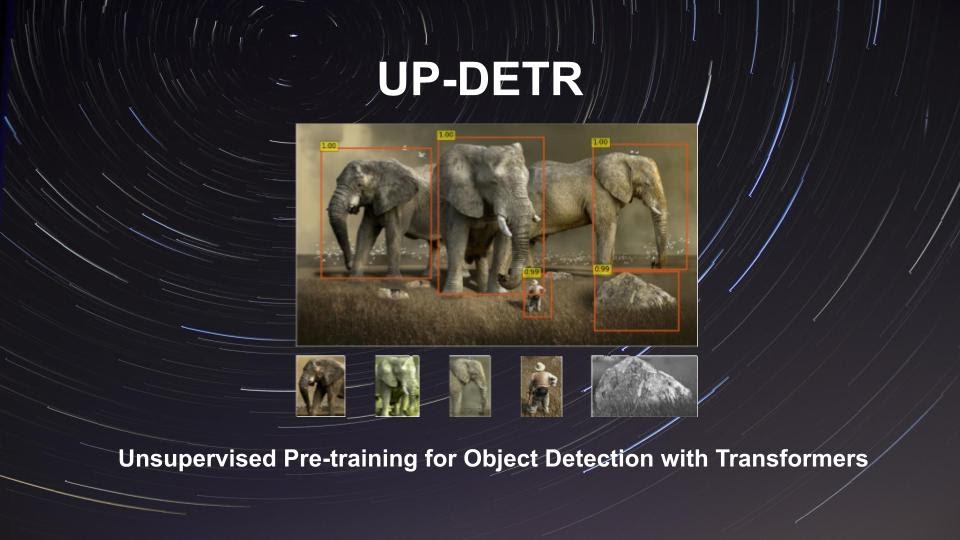

DETR(Detection Transformer) is an end to end object detection model that does object classification and localization i.e boundary box detection. It is a simple encoder-decoderTransformer with a novel loss function that allows us to formulate the complex object detection problem as a set prediction problem.

MultiSpeaker Text to Speech synthesis refers to a system with the ability to generate speech in different users’ voices. Collecting data and training on it for each user can be a hassle with traditional TTS approaches.

Quantization is the process of mapping the high precision values (a large set of possible values) to low precision values(a smaller set of possible values). Quantization can be done on both weights and activations of a model.

SDNet is a contextualized attention based deep neural network that achieved State of the Art results in the challenging task of Conversational Question Answering. It makes use of inter attention and self-attention along with Recurrent BIdirectional LSTM layers.

Transformer XL is a Transformer model that allows us to model long range dependencies while not disrupting the temporal coherence.

Medical Transformer relies on a gated position-sensitive axial attention mechanism that aims to work well on small datasets. It introduces Local-Global (LoGo) a novel training methodology for modelling image data efficiently.

BoTorch is a library built on top of PyTorch for Bayesian Optimization. It combines Monte-Carlo (MC) acquisition functions, a novel sample average approximation optimization approach, auto-differentiation, and variance reduction techniques.

XLnet is an extension of the Transformer-XL model. It learns bidirectional contexts using an autoregressive method. Let’s first understand the shortcomings of the BERT model so that we can better understand the XLNet Architecture. Let’s see how BERT learns from data.

ALBERT is a lite version of BERT which shrinks down the BERT in size while maintaining the performance.

Sense2vec is a neural network model that generates vector space representations of words from large corpora. It is an extension of the infamous word2vec algorithm.Sense2vec creates embeddings for ”senses” rather than tokens of words.

AMR is a graph-based representation that aims to preserve semantic relations. AMR graphs are rooted, labelled, directed, acyclic graphs, comprising whole sentences. They are intended to abstract away from syntactic representations.

Join the forefront of data innovation at the Data Engineering Summit 2024, where industry leaders redefine technology’s future.

© Analytics India Magazine Pvt Ltd & AIM Media House LLC 2024

The Belamy, our weekly Newsletter is a rage. Just enter your email below.