Artificial neural network is a self-learning model which learns from its mistakes and give out the right answer at the end of the computation. In this article we will be explaining about how to to build a neural network with basic mathematical computations using Python for XOR gate.

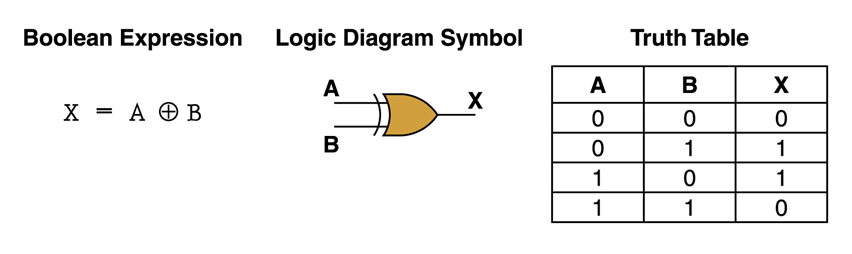

XOR Gate:

Here’s a representation of an XOR gate; with the inputs represented by A and B, and the output with a Y: These are the libraries required to build a neural network in Python, which includes graphical representation libraries used to plot the sigmoid curve later.

These are the libraries required to build a neural network in Python, which includes graphical representation libraries used to plot the sigmoid curve later.

Activation Function And Its Derivation:

The activation function we will be implementing here is the sigmoid activation function. This function will scale the values between 0 and 1 using an exponent ‘e’. It is also called as a Logistic Function.

Here is a representation of a sigmoid function: Let’s consider some examples using the above equation with the weights and see how it works:

Let’s consider some examples using the above equation with the weights and see how it works:

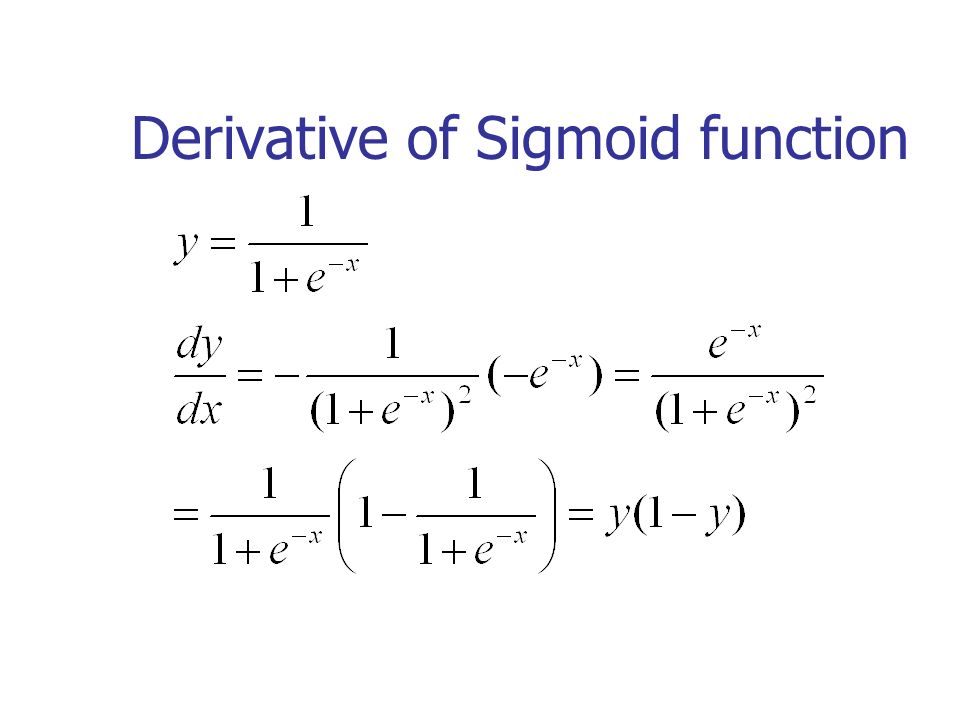

As it scales the values between 0 and 1, it helps the neural network to convert the data from linear to non-linear data. We need the derivative of the sigmoid function to calculate the error gradient required to correct it during the back propagation. We will be explaining about it during this setup:

As it scales the values between 0 and 1, it helps the neural network to convert the data from linear to non-linear data. We need the derivative of the sigmoid function to calculate the error gradient required to correct it during the back propagation. We will be explaining about it during this setup: From the above derivation we can infer that the derivative of a sigmoid function is the sigmoid function itself with the mathematical equation.

From the above derivation we can infer that the derivative of a sigmoid function is the sigmoid function itself with the mathematical equation.

Let’s write a function to implement the same in Python with its derivative:

Graphical representation of a sigmoid curve and its derivative:

Setting Up The Neural Network:

Setting Up The Neural Network:

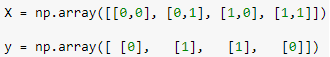

Let’s start with assigning the inputs to an object ‘X‘ which is nothing but the inputs of a XOR gate. And we’ll assign the output to the object ‘y‘.

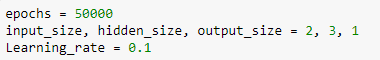

Now we need to design our neural network, since we have two values for our input ‘X’ and one value for output ‘y’. The network will have two input nodes and one output node, with a hidden layer of size 3 (you can consider any size you want here, make sure that the network is fully connected). Epochs are the number of iterations which have to back propagate with the help of learning rate to get the actual value. Since it is a simple computational model, we are opting for a high number of epochs.

Now we need to design our neural network, since we have two values for our input ‘X’ and one value for output ‘y’. The network will have two input nodes and one output node, with a hidden layer of size 3 (you can consider any size you want here, make sure that the network is fully connected). Epochs are the number of iterations which have to back propagate with the help of learning rate to get the actual value. Since it is a simple computational model, we are opting for a high number of epochs.

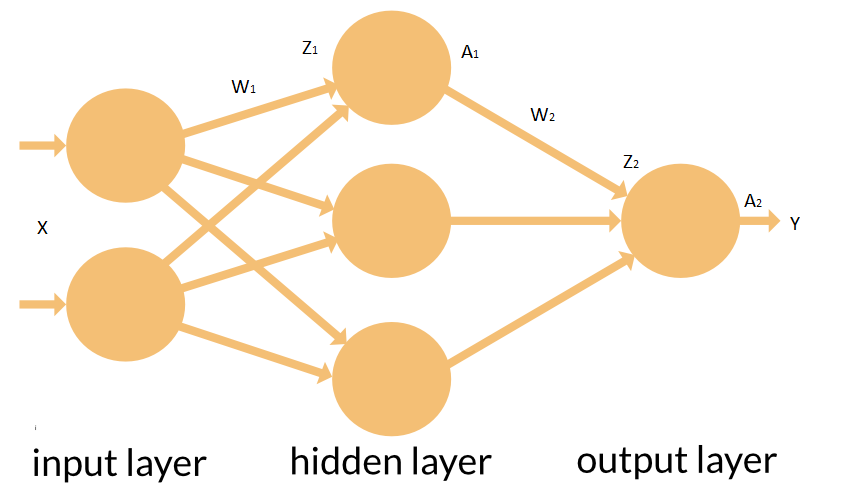

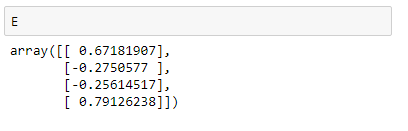

To start off, we need to initialise some weights. This is to let the neural network know where to start and how far it is from that actual output. We’ll be using some random numbers to start off with. These numbers are in the range of 0 and 1, and also follow uniform distribution. The input size, hidden size and the output size is used to obtain the shape of the matrix.

To start off, we need to initialise some weights. This is to let the neural network know where to start and how far it is from that actual output. We’ll be using some random numbers to start off with. These numbers are in the range of 0 and 1, and also follow uniform distribution. The input size, hidden size and the output size is used to obtain the shape of the matrix.

The matrix containing weights are in the shape of the sizes initialised above. The output is as follows:

Once the weights are initialised we can work on building the front propagation and the back propagation of our network.

Front Propagation:

Below are the front propagation equations from the above diagram.

During front propagation, the input X is multiplied (dot product) with the W1 to initialise the beginning of the process, and passed through the activation function ‘σ’ sigmoid to get the output of the hidden layer. This output is then multiplied by W2 and the function ‘σ’ sigmoid to obtain the output. The python code is as follows:

During front propagation, the input X is multiplied (dot product) with the W1 to initialise the beginning of the process, and passed through the activation function ‘σ’ sigmoid to get the output of the hidden layer. This output is then multiplied by W2 and the function ‘σ’ sigmoid to obtain the output. The python code is as follows:

![]() After we compute this, we get an output which looks like:

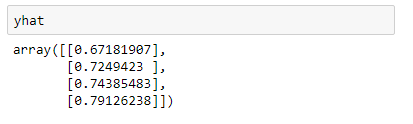

After we compute this, we get an output which looks like:

So, the yhat differs from y (actual output) by some decimal values. This is called as an error ‘E‘.

So, the yhat differs from y (actual output) by some decimal values. This is called as an error ‘E‘.

Now let us look at it by how much our output differs. All we need to find is:

E = yhat – y

Learning rate in simple words:

Learning rate in simple words:

Let’s take an example, say the y = 5 and our model gives a output yhat = 3. So we get an error of E = 2. The learning rate is the rate at which the output yhat will increase towards the actual value y. Consider the learning rate to be 0.1…

Rate = E * 0.1

= 2 x 0.1

= 0.2

…So in the first iteration our output will increase to 3.2, then in the next iteration we get 3.4, and so on, till it reaches the value 5.

Back Propagation:

This is the process in which the error is corrected in every iteration (epochs). We need to find the gradient at which the error needs to be corrected. Let us find the derivative of the output with respect to the weights. The derivative tell us by how much the we need to change the weights through each epoch in order to reach the actual output.

Let us find the derivative of Error (E ) with respect to W2 from the front propagation equations.

Now let us compute the Error (E ) with respect to W1.

Once we compute this, we can work on building the back propagation equations in Python.

Once we compute this, we can work on building the back propagation equations in Python.

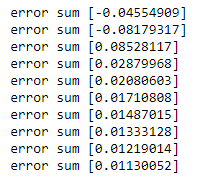

The front and back propagation equations are built. Let us see the error change through the iterations. If the error decreases, then our neural network is working towards finding the right answer.

Now let us test the model with an input and see how accurate it is.

Now let us test the model with an input and see how accurate it is.

Finally we have our result, which is very close to 1. This is how we teach a neural network to understand the mistake and make small changes in every iteration to reach the actual value. Here we have built a very simple network which has less computations in front and back propagation, which is why it is faster. As we build a more complex network like Recurrent Neural Network and Convolutional Neural Network the computations increase and the time taken to train the model is very long. When it comes to Artificial Intelligence, the longer we train the model the better are the results.

Finally we have our result, which is very close to 1. This is how we teach a neural network to understand the mistake and make small changes in every iteration to reach the actual value. Here we have built a very simple network which has less computations in front and back propagation, which is why it is faster. As we build a more complex network like Recurrent Neural Network and Convolutional Neural Network the computations increase and the time taken to train the model is very long. When it comes to Artificial Intelligence, the longer we train the model the better are the results.