A very common example of an unsupervised machine learning, clustering is the process of grouping similar data points into a cluster. Given a finite set of data points, clustering aims to find homogeneous subgroups of data points with similar characteristics.

In this article, we will learn the basics of a simple clustering algorithm called K-Means and we will also learn to implement it with the popular Scikit-learn library.

Before we begin, we introduce you to MachineHack’s practice section where beginners can learn to code a variety of problems without any overwhelming or intimidating Math or theories. MachineHack practise is a new feature of MachinHack that lets you get started with ML problems.

Clustering vs Classification

Clustering may sound similar to the popular classification type of problems, but unlike classification wherein a labelled set of classes are provided at the time of training, the idea of clustering is to form the classes or categories from the data which is not pre-classified into any set of categories, which is why clustering is an unsupervised learning algorithm.

Let’s look at a basic example to distinguish a clustering and a classification problem.

Clustering:

Classification:

In the first dataset, we do not see any labelled features that we can use to classify the data points based on any characteristics. But what clustering can do is it can provide us with classes that can categorise the given data points based on the features. For example, we can use the first dataset to group consumers into different categories based on their annual income and their spending score. In the second data set, we can use the features Age, Annual_Income and Score to predict or classify whether a consumer is male or female.

K-Means Clustering

K-means clustering is a clustering method that subdivides a single cluster or a collection of data points into K different clusters or groups.

The algorithm analyzes the data to find organically similar data points and assigns each point to a cluster that consists of points with similar characteristics. Each cluster can then be used to label the data into different classes based on the characteristics of the data.K-Means clustering works by constantly trying to find a centroid with closely held data points. This means that each cluster will have a centroid and the data points in each cluster will be closer to its centroid compared to the other centroids.

K-Means Algorithm

- Selecting an appropriate value for K which is the number of clusters or centroids

- Selecting random centroids for each cluster

- Assigning each data point to its closest centroid

- Adjusting the centroid for the newly formed cluster in step 4

- Repeating step 4 and 5 till all the data points are perfectly organised within a cluster space

Choosing The Right Number Of Clusters

The number of clusters that we choose for a given dataset cannot be random. Each cluster is formed by calculating and comparing the distances of data points within a cluster to its centroid. An ideal way to figure out the right number of clusters would be to calculate the Within-Cluster-Sum-of-Squares (WCSS).

WCSS is the sum of squares of the distances of each data point in all clusters to their respective centroids.

The idea is to minimise the sum. Suppose there are n observation in a given dataset and we specify n number of clusters (k = n) then WCSS will become zero since data points themselves will act as centroids and the distance will be zero and ideally this forms a perfect cluster, however this doesn’t make any sense as we have as many clusters as the observations. Thus there exists a threshold value for K which we can find using the Elbow point graph.

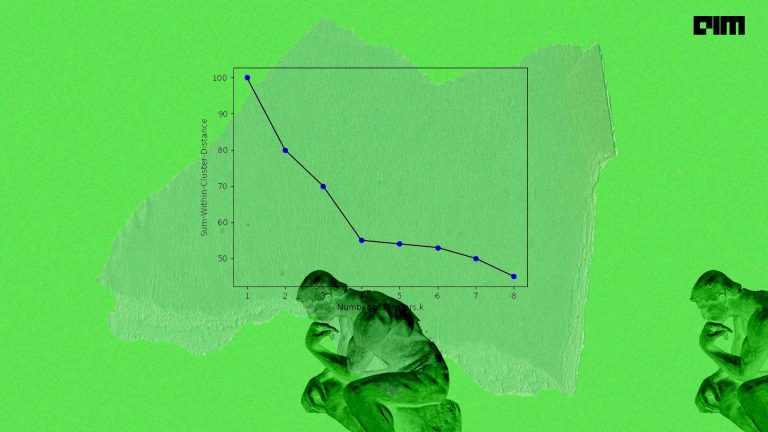

Elbow method

We can find the optimum value for K using an Elbow point graph. We randomly initialise the K-Means algorithm for a range of K values and will plot it against the WCSS for each K value.

The resulting graph would look something like what’s shown below:

For the above-given graph, the optimum value for K would be 5. As we can see that with an increase in the number of clusters the WCSS value decreases. We select the value for K on the basis of the rate of decrease in WCSS. For example, from cluster 1 to 2 to 3 in the above graph we see a sudden and huge drop in WCSS. After 5 the drop is minimal and hence we chose 5 to be the optimal value for K.

The Random Initialisation Trap

One major drawback of K-Means clustering is the random initialisation of centroids. The formation of clusters is closely bound by the initial position of a centroid. The random positioning of the centroids can completely alter clusters and can result in a random formation.

The solution is K-means++. K-Means++ is an algorithm that is used to initialise the K-Means algorithm.

K Means++

The algorithm is as follows:

- Choose one centroid uniformly at random from among the data points.

- For each data point say x, compute D(x), which is the distance between x and the nearest centroid that has already been chosen.

- Choose one new data point at random as a new centroid, using a weighted probability distribution where a point x is chosen with probability proportional to D(x)2.

- Repeat Steps 2 and 3 until K centres have been chosen.

- Proceed with standard k-means clustering.

Now we have enough understanding of K-Means Clustering. So let’s do it practically.

Hands-On K-Means Clustering

Importing Necessary Libraries

#Importing librariesimport pandas as pdimport matplotlib.pyplot as plt#For Jupyter Notebooks to show the plots%matplotlib inline

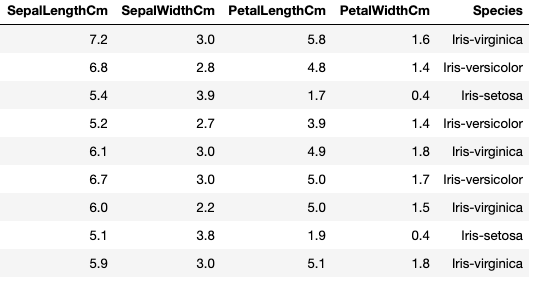

Importing The Iris Dataset

#Importing the datasetiris = pd.read_csv("Iris.csv")

Selecting The Features For Clustering

Since we are clustering the data, we don’t need the labels or classes of Iris species. We will drop this column and will use clustering to group the data points into 3 clusters based on sepal length and petal length of the flower.

#Dropping the 'Species' columniris_clustering = iris.drop(columns = ['Species'])#Selecting 2 random features from the dataset for clustering#Here we choose Sepal Length @ column 0 and Petal Length @ column 2X = iris_clustering.iloc[:, [0,2]].values

Note: We only chose 2 features as we are going to plot in 2D space. The algorithm will work finr for any number of features.

Using Elbow Graph To Find Optimum Number Of Clusters

# Using the elbow method to find the optimal number of clusters

from sklearn.cluster import KMeans

wcss = []

for i in range(1, 11):

kmeans = KMeans(n_clusters = i, init = 'k-means++', random_state = 42)

kmeans.fit(X)

#appending the WCSS to the list (kmeans.inertia_ returns the WCSS value for an initialized cluster)

wcss.append(kmeans.inertia_)

#Plotting The Elbow graphplt.plot(range(1, 11), wcss)plt.title('The Elbow Point Graph')plt.xlabel('Number of clusters')plt.ylabel('WCSS')plt.show()

Output:

We can see that after 3 the drop in WCSS is minimal. So we choose 3 as the optimal number of clusters.

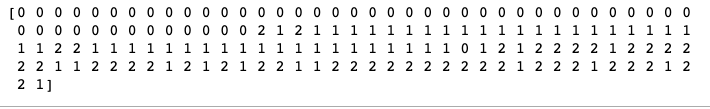

Initialising K-Means With Optimum Number Of Clusters

#Fitting K-Means to the datasetkmeans = KMeans(n_clusters = 3, init = 'k-means++', random_state = 0)

#Returns a label for each data point based on the number of clustersy = kmeans.fit_predict(X)print(y)

Output:

Visualising The Clusters

# Visualising the clusters#Scatter plotting for (x,y) with label 1 as Cluster 1 in color c = red and points in size s = 50 plt.scatter(X[y == 0, 0], X[y == 0, 1], s = 50, c = 'red', label = 'Cluster 1')#Scatter plotting for (x,y) with label 2 as Cluster 2 in color c = blue and points in size s = 50 plt.scatter(X[y == 1, 0], X[y == 1, 1], s = 50, c = 'blue', label = 'Cluster 2')#Scatter plotting for (x,y) with label 3 as Cluster 3 in color c = green and points in size s = 50 plt.scatter(X[y == 2, 0], X[y == 2, 1], s = 50, c = 'green', label = 'Cluster 3')

#Scatter plotting the centroids with label = 'Centroids' in color c = cyan and points in size s = 100 plt.scatter(kmeans.cluster_centers_[:, 0], kmeans.cluster_centers_[:, 1], s = 100, c = 'cyan', label = 'Centroids')

plt.title('Iris Flower Clusters')plt.xlabel('Sepal Length in cm')plt.ylabel('Petal Length in cm')plt.legend()plt.show()

Output:

Happy clustering!