Have you ever noticed that when we search for any sentence in a search engine and start filling up the widget, it shows the rest of the thing automatically or gives us the recommendation for the rest of the queries? How do they do these things? Here in the picture, one thing comes into the part relation extraction. Relation extraction models are specially made for completing the task of predicting attributes for entities and relationships between the words and sentences. For example, extracting entities from the sentence “Elon Musk founded Tesla” – “elon musk” “founded” “Tesla” to understand the sentence “Elon Musk is founder of Tesla” is similar to the first sentence. Thus, relation extraction is one of the key components of some of the natural language processing tasks.

Knowledge graph construction plays a huge part; we can find many relation facts by using relation extraction. Using those facts, we can expand the knowledge graph, a path for machine learning models to understand the human world. Also, instead of NLP, it can be used for question answering, recommendation systems, search engines, and summarization applications.

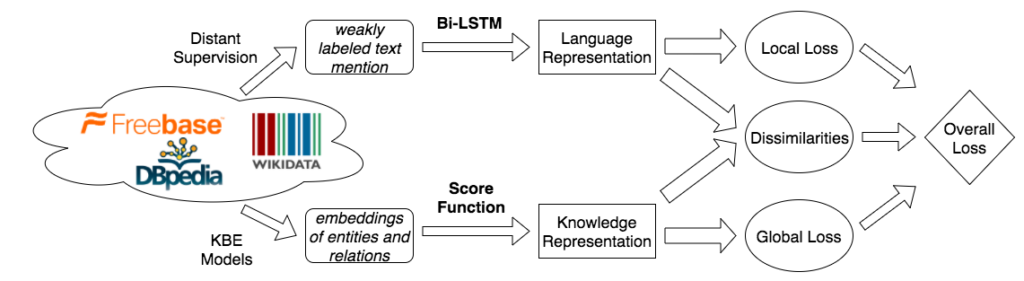

There are various types of data sets available for this type of modeling like DocRED, TACRED, ACE 2005. And also, various models and frameworks are already trained on them like COKE, SSAN etc. A basic structure of any simple architecture, which we call HRERE (Heterogeneous Representation for Neural Relation Extraction):

Image source

Roughly there can be four kinds of relation extraction tasks we can perform using different algorithms.

- Sentence level relation extraction is the most commonly occurring relation extraction task. In the given sentence, we find the relation between two tagged entities using a predefined relation set. TACRED is the most commonly used dataset for this task.

Image source.

- Bag-Level relation extraction – in this task, we use existing knowledge graphs to find the relationship between the entities. There are many knowledge graphs available like freebase and wikidata. Which already consists of the relation between the entities. The most common data we use in this kind of task are NYT10.

Image source.

- Few-Shot Relation Extraction – this type of learning is used to adapt a modelfaster to the new relationship. We can also say it works on the concept of the learning process of humans by using very small dataset’s samples. FewRel is largely used as data in this kind of task of relation extraction. The following image can understand the basic methodology.

Image source

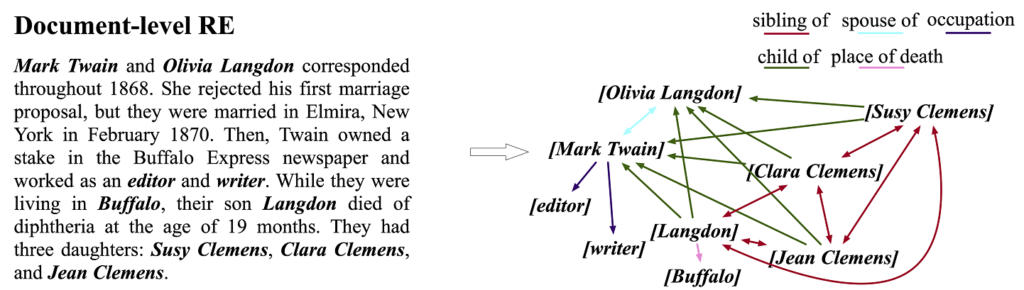

- Document-level relation extraction- this task focuses on extracting intra sentence relations for single entity pairs to accelerate learning. In DocRED we can find the datasets for this type of method. The methodology can be shown in the following image.

Image source

What is OpenNRE?

OpenNRE is an open-source toolkit to perform neural relation extraction. The following image can understand the basic algorithm of NRE.

Image source

OpenNRE provides a framework for the implementation of relation extraction models as an open-source and extensible toolkit. This package provides facilities to

- New people working on relation extraction.

- Developers by providing an easy-to-use environment which can be deployed in any production without training of the model. And also, the performance of the models is very high.

- Researchers can easily use the packages for their experiment purposes.

In the package, there are various models which are trained on wiki80, wiki180, TACRED datasets. To know more about the datasets, readers can go through this link. This package provides high performing models based on convolutional neural networks and BIRT algorithms.

Code Implementation: OpenNRE

Let’s get a quick start of the OpenNRE using google colab.

Before installing the toolkit, we will need to start a GPU for the notebook; this will help us provide speed to our programs. To start the GPU, we can directly go to the runtime button; after this, click on the change runtime type. Next, you will get the widget hardware accelerator, select the GPU and click on the save.

Here in the colab we are going to clone the package; that’s why we need to mount our drive in the notebook. The below example will show you how to mount the drive on your runtime using an authorization code.

from google.colab import drive

drive.mount('/content/drive')Output:

Now we can start our trial of the package:

We can clone the OpenNRE repository using the following command.

!git clone https://github.com/thunlp/OpenNRE.gitoutput:

To use the models we have cloned, we will need to direct the notebook to the folder where our package is available.

cd OpenNREOutput:

Installing the package using its requirement.txt file.

!pip install -r requirements.txt

Output:

Now we are prepared to use OpenNRE.

Importing the OpenNRE.

import opennre

Loading a pre-trained model from the package mode with CNN encoders on Wiki180 datasets

model = opennre.get_model('wiki80_cnn_softmax')

Output:

This will take a few minutes to load the model. After loading it, we will use the model using the inferred command for relation extraction.

model.infer({'text': 'He was the son of ashok, and grandson of rahul.', 'h': {'pos': (18, 46)}, 't': {'pos': (78, 91)}})

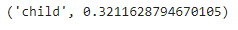

Output:

Here we can see the model’s prediction is a child and the confidence score of about 32%; the score is low, but the prediction is almost satisfying because the model has told us the son is related to the child.

Let’s check for another model of the package.

Loading the model.

model = opennre.get_model('wiki80_bert_softmax')

Output:

Here I have loaded a model from the package, which is made with the birt encoder trains on the wiki180 data.

Checking the performance of the model.

model.infer({'text': 'He was the son of ashok, and grandson of rahul.', 'h': {'pos': (18, 46)}, 't': {'pos': (78, 91)}})

Output:

Here we can see that it has predicted the right again, and with high confidence it has told us that Rahul is a father of Ashok and Ashok is a father of him. And the confidence of the model is around 99% which is also very satisfying.

One more model is inbuilt with the package named wiki180_bertentity_softmax, which is also made with a BIRT encoder.

Loading the model:

model = opennre.get_model('wiki80_bertentity_softmax')

Output:

Checking the performance of the model.

model.infer({'text': 'He was the son of ashok, and grandson of rahul.', 'h': {'pos': (18, 46)}, 't': {'pos': (78, 91)}})

Output:

This model also says that the relationship of the son word is with the child word and the confidence of the prediction is also good.

Here we have seen in the article that all the pre-trained models of the OpenNRE package are high performing and the model’s predictions are also good. Unlike the other packages, it is very easy to use. If we go to the example folder of the package, we will see there are various models available, which we can edit and use according to our requirements. Because it is open-source, there will be no charges in the deployment of the pretrained model. I encourage you to go through the toolkit’s documentation and try to perform more with the OpenNRE.

References:

- Documents of the OpenNRE.

- Google colab for codes.