There has been tremendous progress in the domain of Artificial Intelligence and the field is rapidly evolving itself by developing AI systems that can learn from humongous amounts of carefully labelled data. This paradigm of supervised learning has a proven track record for training special models that perform extremely well on the task they were trained to do with great accuracy. Unfortunately, there is also a limit to how far the field of AI can go with just supervised learning alone. Properly developed AI systems can create a deeper, more nuanced understanding of reality beyond what is specified in the training data set and hence be more useful, ultimately bringing AI closer to human-level intelligence.

Artificially intelligent programs can now recognize faces and objects in photos and videos, help transcribe the audio in real-time, detect cancer years in advance, putting shoulder-to-shoulder with humans for some of the most complicated and repetitive tasks. The only backlog being when created from scratch, deep learning models require access to vast amounts of data and compute resources for a better understanding. Moreover, it also takes a long time to train deep learning models to perform tasks, which is not suitable for use cases that have a short time budget. Thanks to techniques such as Transfer learning, the method of using the knowledge gained from one trained AI model to another, can help solve such problems with ease.

What is Transfer Learning?

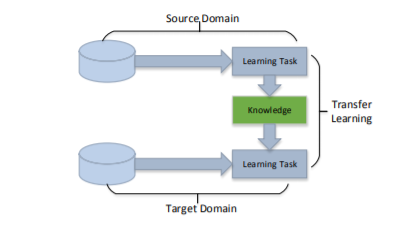

Transfer learning is a technique for predictive modelling on a different yet similar problem that can then be reused partly or wholly to accelerate its training and eventually improve the performance of the model for the problem. It is the reuse of a pre-trained model on a new problem. This technique is currently becoming very popular in deep learning because it can train deep neural networks with comparatively little data and in less time. Finding its use in the data science field as most real-world problems typically do not have millions of labelled data points to train such complex models. Features from a model that has learned to identify something can become useful to kick-start a model meant to identify another thing.

Transfer learning is usually used for tasks where the datasets have too little data to train a full-scale model from scratch. Transfer learning is basically an optimization method that allows rapid progress or improved performance when modelling for the second task. Transfer learning can also help with problems such as multi-task learning and concept drift which are not exclusively an area of study for deep learning. The weights in one or more layers from a pre-trained network model are reused in a new model and either keeping the weights fixed, fine-tuning them, or adapting the weights depends entirely when training the model. Transfer learning helps accelerate the training of neural networks as either a weight initialization scheme or as a feature extraction method.

Working and Need of Transfer Learning

During transfer learning, the knowledge is leveraged from a source task to improve the learning in a new task. If the transfer method used ends up decreasing the performance of a new task, it is termed as a negative transfer. A major challenge when developing such transfer methods is ensuring a positive transfer between the related tasks while still avoiding negative transfer to occur between less related tasks. When applying knowledge from one task to another, the original task’s characteristics get mapped onto those of the other in order to specify correspondences. A human typically provides this mapping, but there are also rapidly evolving processes that perform the mapping automatically. The knowledge or data gained while solving one problem is stored, labelled and then applied to a different but similarly related problem. For example, the knowledge gained by a machine learning algorithm to recognize cars could be later be transferred for use in a separate machine learning model that was being developed to recognize other types of vehicles, such as trucks.

To measure the effectiveness of transfer learning techniques there are three common indicators used: the first one being measuring whether performing the target task is achievable using only the transferred knowledge. The second method is measuring the amount of time it will take to learn the target task using knowledge gained from transferred learning versus how long it would take to learn without it. Another indicator can be the fact that whether the final performance of the task learned via transfer learning is comparable to the completion of the original task without the transfer of knowledge. We generally first train a base network on a base dataset and task, and further repurpose the learned features, or transfer them, to a second target network to be trained on a target dataset and task, this process only tends to work if the features are general, meaning suitable to both base and target tasks, instead of specific to the base task. We can take a model trained on ImageNet and use its learned weight in that model to initialize the training and classification of an entirely new dataset. This way of transfer learning used in deep learning is also known as an inductive transfer. Using this the scope of possible model bias is narrowed in a beneficial way.

How And When To Use Transfer Learning?

First select a related predictive modelling problem with abundant data where there is a relationship in the input data, output data, or concepts learned during the mapping from input to output data. Next, a skilful model must be developed for the first task. The model must not be a naive model so that some feature learning is performed. The model fit on the source task can then be used as the starting point for a model on the second task of interest. This may involve using all or parts of the model, depending on the modelling technique being used. The model may also be needed to be adapted or refined on the input-output pair for data available for the task of interest.

Imagine we want to learn to detect transportation methods, but we do not possess enough vehicle data and only have an abundant supply of images of a particular transportation method with their corresponding type. Assuming that detecting the method of transport and detecting vehicles are related tasks, we can use the knowledge gained through the first task in order to learn the new task more easily i.e with fewer vehicles than we would have needed if we had to train our network from scratch. Transfer learning can be vital in such applications and contexts where obtaining large data sets for training neural networks is either costly or nearly impossible.

An Example Of Transfer Learning

An Example of transfer learning can be using the ResNet50 model, trained on the ImageNet data set, in order to quickly classify new images.

The ResNet50 pre-trained CNN expects inputs of 224×224 resolution and will classify objects into one of 1,000 possible categories. As it is already trained with weights learned from the Imagenet data set, all we have to do now is to use it!

Output :

Predicted: [('n04552348', 'warplane', 0.77994865), ('n03773504', 'missile', 0.12333909), ('n04008634', 'projectile', 0.082195714)]

As you can observe, the top prediction here tells us that the image represents a warplane, which definitely seems to be correct!

Predicted: [('n04366367', 'suspension_bridge', 0.45587245), ('n03933933', 'pier', 0.39334658), ('n03000134', 'chainlink_fence', 0.14159288)]Conclusion

The Transfer Learning approach can easily be inculcated for identifying a related task with abundant data and can be reused on another problem as there is a pre-trained model available that can be used as a starting point. Transfer learning can help to develop skilful models that one simply could not develop in the absence of transfer learning. The choice of source data or source model may require brainstorming and domain expertise or intuition developed via experience.

End Notes

In this article, we understood what Transfer Learning is and when to use this technique. We also saw an example of its implementation for classifying images. As the technique comes with its own set of pros and cons, I would like to encourage the reader to further explore this technique with hands-on implementation.

Happy Learning!