|

Listen to this story

|

There has always been this notion with large language models (LLMs) — the bigger the model, the better it would perform. This has made a lot of companies boast about the number of parameters of their models. GPT-3 has 175 billion parameters, and to compete, Google came up with PaLM, scaling it up to 540 billion.

Garry Kasparov, the famous chess champion to compete against IBM’s supercomputer in 1997, said, “As one Google Translate engineer put it, ‘when you go from 10,000 training examples to 10 billion training examples, it all starts to work’. Data trumps everything.” Ever since, it was seen that as the size of the models increased, so did the performance, but at the cost of compute.

It has taken another turn in recent times. Last month, Sam Altman, the creator of ChatGPT, said, “I think we’re at the end of the era where it’s gonna be these giant models, and we’ll make them better in other ways.” He added that there has been too much focus on the count of parameters of the language models and the focus should be shifted towards making the models perform better even if that means decreasing their size.

At the same time, recently Altman also said that making models bigger is not a bad idea. He iterated that OpenAI can make models a million times bigger than what they already have that would also increase performance, but there is no point in doing it as it might not be sustainable.

The smaller, the better

To quote Socrates – “It is not the size of a thing, but the quality that truly matters. For it is in the nature of substance, not its volume, that true value is found.” Can we say the same about these LLM models?

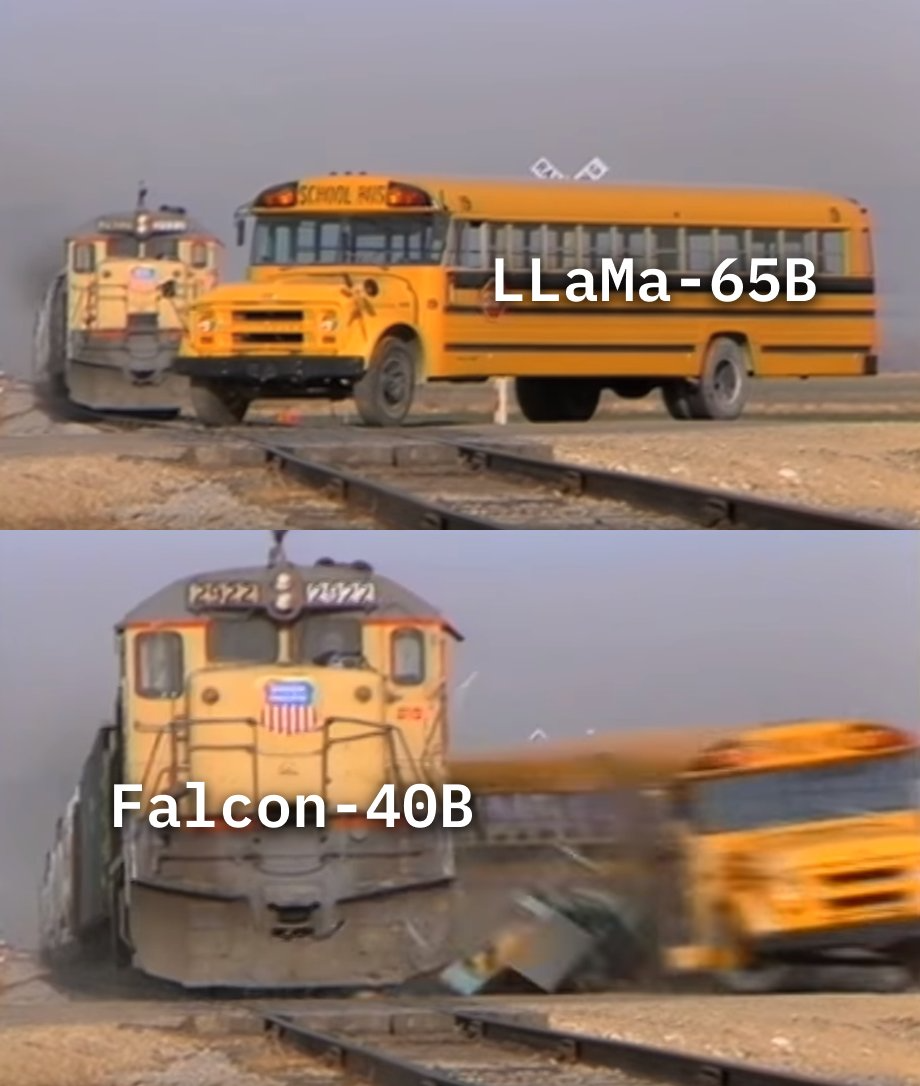

If we compare GPT-3 and PaLM’s capabilities, the difference is not huge. Given the hype, one can even say the GPT-3 is even better than PaLM. Increasingly, we are also witnessing even smaller language models like LLaMa, with only 65 billion parameters in its largest size. Meta also released models with only 7 billion parameters as well which have been performing way better than their larger counterparts in many use cases.

Moreover, to dethrone LLaMa, Technology Innovation Institute has released Falcon, an open source alternative which also has a special licence for allowing commercial use, which LLaMa does not allow. The model has 40 billion parameters and is already sitting on top of the Open LLM Leaderboard on Hugging Face. The researchers say that Falcon outperformed LLaMa, StableLM, and MPT on various benchmarks.

Meta did not stop at just LLaMa. LIMA, the new model from Meta AI is built on top of LLaMa 65B and outperformed GPT-4 and Bard in various performance tests. Interestingly, according to the paper, the model was able to perform exceedingly well even with the 7 billion parameter version of LLaMa with just 1,000 carefully curated prompts and responses. Clearly, as the paper said, less is more for alignment.

There is another algorithm in the LLM town which is making even smaller size models outperform much bigger models. MIT CSAIL researchers self-trained a 350 million parameter entailment model without human generated labels. According to the paper, the model was able to beat supervised language models like GPT-3, LaMDA, and FLAN.

The same researchers have devised a technique called SimPLE (Simple Pseudo-Label Editing), a technique for self-training LLM models. The researchers discovered that self-training could improve a model’s performance by teaching the model to learn through its own predictions. With SimPLE, the researchers were able to take this even another step further by reviewing and modifying pseudo-labels in the initial round of training.

“While the field of LLMs is undergoing rapid and dramatic changes, this research shows that it is possible to produce relatively compact language models that perform very well on benchmark understanding tasks compared to their peers of roughly the same size, or even much larger language models,” James Glass, principal investigator and co-author of the paper.

Developers for the win

“This has potential to reshape the landscape of AI and machine learning, providing a more scalable, trustworthy, and cost-effective solution to language modelling,” said Hongyin Luo, the lead author of the entailment paper. “By proving that smaller models can perform at the same level as larger ones for language understanding, this work paves the way for more sustainable and privacy-preserving AI technologies.”

It is clear that with the rapid development in generative AI technology, smaller models are able to perform the same tasks as larger ones. It all started with the open source LLaMa model through which developers could research and build better AI models on their own systems. Now, the field and options are getting bigger instead of the models.

There has been a push for building up the open source developer ecosystem in the AI landscape. Meta pushed LLaMa, Microsoft calls everyone a developer now, and Google thinks open source is the real winner in the AI race. With these open source smaller models that do not require heavy computation resources, the generative AI landscape will get even more democratised.

This is what the goal looks like: everyone should be able to build their own ChatGPT and run it on their devices. For that, we need smaller, open source, and more efficient models.

With such small models outperforming larger ones, it would soon be possible to run GPT-like models on single devices without the internet. The future would be just how Yann LeCun, Meta AI chief, visioned it – multiple smaller models working together for better performance, calling it the world model. This is what Altman predicts and wishes as well. We are headed in the right direction.