Kubeflow pipelines are an excellent method for creating portable, scalable machine learning operations. It is a component of the larger Kubeflow ecosystem, which seeks to minimize the complexity and time required for training and deploying machine learning models at scale. So, in this article, we will cover Kubeflow theoretically and practically by implementing a pipeline from a particular Jupyter Notebook. Below are the major points that we are going to discuss through this article.

Table of contents

- What is Kubeflow?

- What are the use cases of Kubeflow?

- Setting up the environment

- Building ML pipeline

Let’s first understand the ecosystem of Kubeflow.

What is Kubeflow?

Kubeflow is an end-to-end machine learning stack orchestration toolset based on Kubernetes for deploying, scaling, and managing complex systems. Kubeflow’s features like operating JupyterHub servers, which allow numerous people to contribute to a project at the same time, have proven to be a great tool. Kubeflow emphasizes detailed project management as well as in-depth monitoring and analysis of the said project.

Data scientists and engineers may now create a fully functional pipeline with segmented steps. These segmented phases in Kubeflow are loosely coupled components of an ML pipeline, a feature not seen in other frameworks that allow pipelines to be reused and modified for subsequent workloads.

Kubeflow is a free and open-source project that makes it easier and more coordinated to run Machine Learning workflows on Kubernetes clusters (an open-source container orchestration system for automating software deployment, scaling, and management).

This is a Kubernetes-based Cloud-Native framework for using Machine Learning in containerized systems. Kubeflow’s integration and expansion of Kubernetes have become smooth, and Kubeflow was built to run anywhere Kubernetes does GCP, AWS, Azure, and so on.

What are the use cases of Kubeflow?

Trained models are often assembled into a single file and stored on a server or laptop. The model is then loaded into a server process that accepts network requests for model inference, and the file is copied to a machine hosting the application. When several applications require model inference output from a single model, the process gets more complicated, especially when updates and rollbacks are required.

Kubeflow allows us to upgrade and roll back numerous applications or servers at the same time. Once the update transaction is complete, you can change your model in one place and ensure that all client applications receive the updates fast.

It’s common for machine learning settings and resources to be shared. A multi-tenant machine learning environment is required to enable simple and effective sharing. Kubeflow Pipelines can be used to make one.

You should try to provide each collaborator with their own space. Kubernetes allows you to schedule and manages containers, as well as keep track of pending and running jobs for each collaborator.

To know more about Kubeflow follow these articles where one discusses features, use-cases and the other compares it with MLFlow.

In the following section, we’ll discuss how we can set up environments to build an ML pipeline, and later on, we’ll build an ML pipeline from the Jupyter notebook.

Setting up the environment

The Kubeflow environment setup comes in many forms, here environment setup means creating a virtual instance of the Kubeflow user interface dashboard where we’ll actually work. The deployment can be carried out locally or overcloud services like Google Cloud Platform (GCP) or Amazon web services (AWS).

You can follow this official installation guide for local deployment, though local deployment requires pretty high specifications like high storage space and RAM whereas cloud-based deployment is pretty simple and straightforward using MiniKF. MiniKF is a lighter version of Kubeflow which has now a turnkey solution, for model development, testing, and deployment.

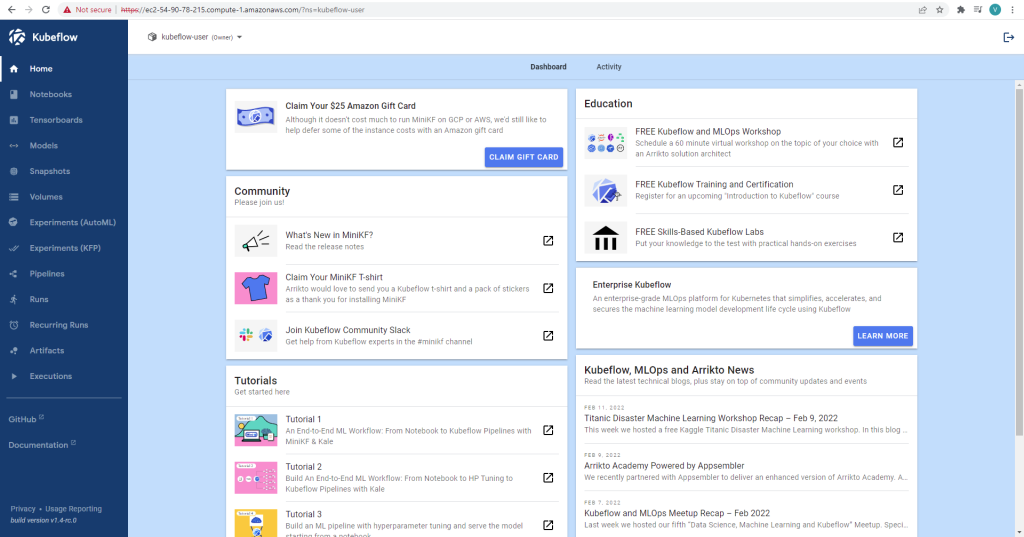

We can set up this either on GCP or AWS you can follow here the official installation for either of one. When you do all the installation steps and login to the dashboard the window should look like this.

On the left-hand panel, there is various functionality present which is part of pipeline building. Below are these components.

- Home: Home is the core location where you may find the most latest materials, ongoing experiments, and relevant documentation.

- Notebooks: To administer servers for notebooks.

- TensorBoards: To administer TensorBoard servers, use TensorBoards.

- Models: To handle KFServing models that have been deployed.

- Volumes: To manage the Volumes of the cluster.

- Experiments (AutoML): To keep track of Katib tests.

- Experiments (KFP): To manage Kubeflow Pipelines (KFP) experiments.

- Pipes: To keep track of the KFP pipelines.

- Runs: To keep track of KFP runs.

- Recurring runs: To control KFP recurrent runs, go to Recurring Runs.

- Artifacts: ML Metadata (MLMD) artefacts are tracked.

- Executions: In MLMD, executions are used to track multiple component executions.

Building ML pipeline

As we do our work in notebooks we can simply directly plug our notebook here through Jupyter lab and build a sophisticated pipeline from the sophisticated notebook. We’ll divide this building procedure in a step by step manner.

Step1: Launch notebook server

Once you set up the dashboard the notebook server can be launched from the notebook tab (the second option in the panel) and create a new server for the notebook mentioning any random name to the server as shown below image.

After the creation of the server, connect to it and it will open the Jupyter lab instance in a new tab.

Step 2: Connecting notebook

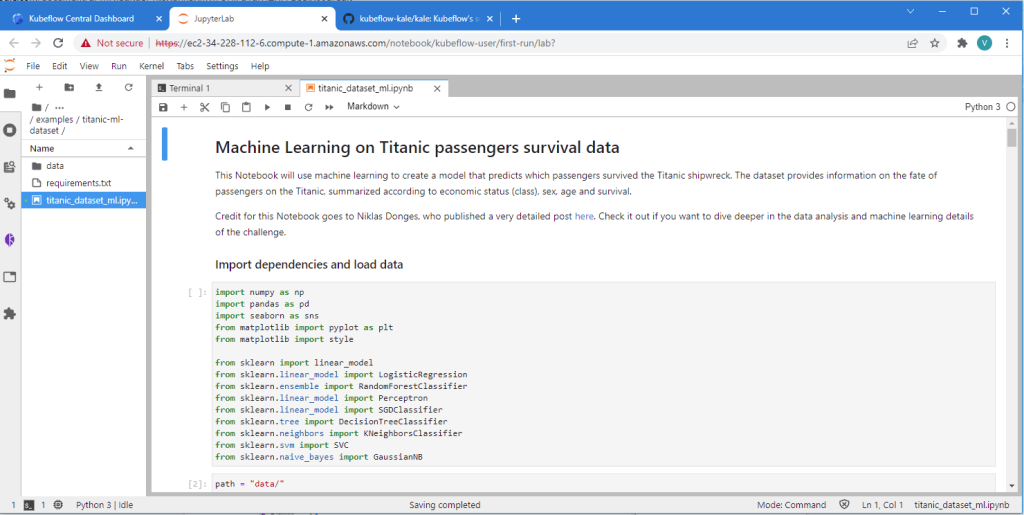

Here you can upload your own notebook or from the terminal, you can clone it from the respective repository. Here I’m using a notebook from Kubeflow’s repository for that we need to open the terminal and do a git clone https://github.com/kubeflow-kale/kale and open and explore titanic ml dataset notebook from the example folder.

Step by step, run the notebook to see if there are any dependency issues. If a library is missing, add it at the top of the notebook and rerun all cells.

In this Notebook, machine learning will be used to create a model that predicts which Titanic passengers survived the shipwreck. The dataset summarises Titanic passengers’ fates depending on their socioeconomic status (class), gender, age, and survival.

Step3: Convert notebook to Kubeflow pipeline

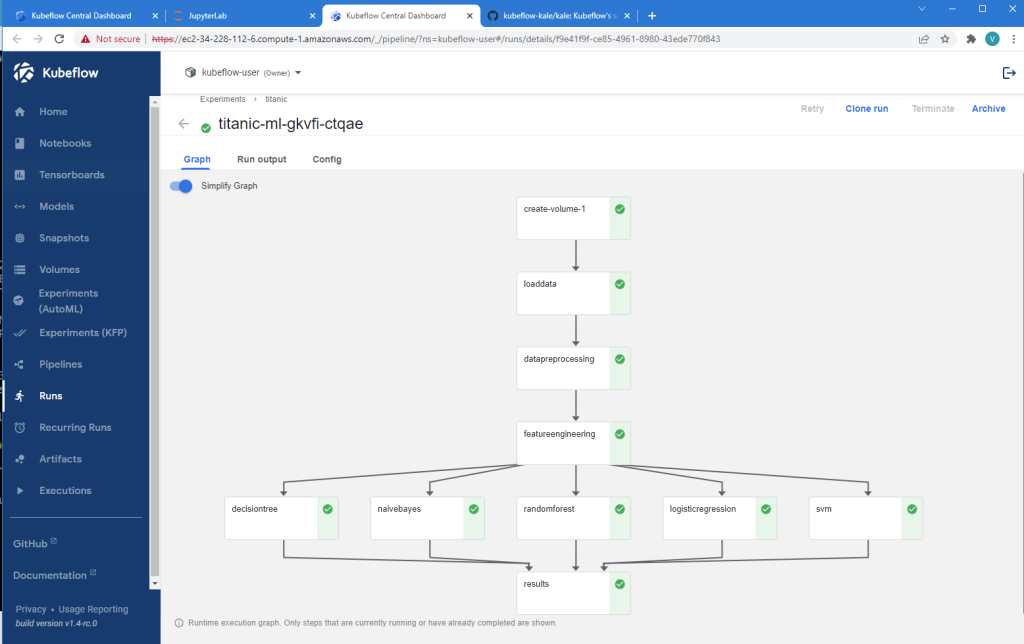

After executing and inspecting the notebook, click the Kubeflow button in the left pane to start the pipeline building method. Examine how numerous cells have become part of a single pipeline step, and how a pipeline step may be dependent on prior steps, which can be changed based on desired flow.

Now Select Compile and Run from the drop-down menu. Keep track of the snapshot’s progress as well as the Pipeline Run’s development. Then, to view the run, click the link to proceed to the Kubeflow Pipelines UI. It will take a few minutes to assemble the entire pipeline, so be patient.

The above process is shown below image.

Now that the pipeline has been built and is ready to go, it’s time to look at the data. The Kubeflow Pipelines dashboard can be accessed by clicking on the notebook link run or the click on the run tab inside the dashboard.

The pipeline components that have been defined will be displayed. When they are finished, the data pipeline path will be modified. You can explore each pipeline by clicking on it and respective observations can be seen in the subsequent window.

That is all, for now, we have successfully created the first pipeline seamlessly.

Final words

Kubeflow and Kubeflow pipelines have now come to be a revolutionary MLOps platform where probationers can directly use their notebook for productionizing the pipeline. Through this post at starting we have seen conceptually what is Kubeflow and practically starting from environment creation to pipeline deployment, we discussed thoroughly all.