Machine learning may give a tough time to songwriters and musicians because the algorithms are definitely getting better at generating music. Composing music using artificial intelligence isn’t a new development as startups and researchers have been attempting it for a long time now. Remember the Chicago-based audio startup, Brain.fm? It promised to provide a new state of medication for the brain using AI-driven music. We also saw a collaborative album between artist Tayrn Southern and Amper software called I AM AI, which became the first album to be entirely composed by AI.

These are just two of the many developments that are flooding the space. Here we shall explore some of the popular use cases where ML and deep learning tools have been used to create music.

Stanford Researchers Are Implementing Deep Learning For Music

Researchers from Stanford University built a generative model from a deep neural in an effort to create music that has both harmony and melody, and that can be compared to the music composed by humans. The researchers suggest that most of the previous work done in music mostly focused on creating a single melody.

They focused on performing end-to-end learning and generating music with deep neural nets alone for which they used two-layered Long Short Term Memory (LSTM) recurrent neural network (RNN) architecture to produce a character level model to predict the next note in a sequence. The researchers explain the process as below:

“We generate music by feeding a short seed sequence into our trained model, generating new tokens from the output distribution from our softmax, and then feeding the new tokens back into our model”, they said. They used a combination of two different sampling schemes:

- Choose the token with maximum predicted probability

- Choose a token from the entire softmax distribution

“We ran our experiments on AWS g2.2xlarge instances. Our deep learning implementation was done in TensorFlow,” added the researchers.

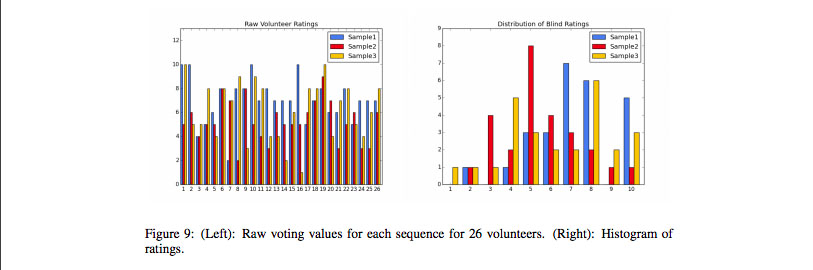

Once the music was created, they focused considerably on the evaluation of this music, to understand how good the music is. They devised a blind experiment and took opinion from 26 volunteers on three samples of the generated music. These three samples were:

- 10-second clip of the “Bach Midi” model

- 16-second clip of the “7 RNN-NADE sequence”

- 11-second clip of the “Piano roll” model trained on the “Muse-All” dataset

The volunteers were asked to hear all the three samples back-to-back and rate them on a scale from 1 to 10. As per the survey, where 1 rating would be “completely random noise”, 5 would be “musically plausible” and 10 would be rated as “composed by a novice composer”.

The results suggested that their models did in fact produce music that is at least comparable in aesthetic quality to the RNN-NADE sequence. In the figure below, it could be seen that only three out of the 26 volunteers said that they liked the sequence from the RNN-NADE better. (An additional 3 said that they liked it just as much as one of their sequences.)

“We were able to show that a multi-layer LSTM, character-level language model applied to two separate data representations is capable of generating music that is at least comparable to sophisticated time series probability density techniques prevalent in the literature. We showed that our models were able to learn meaningful musical structure,” they noted.

How Good Is The Music Created By Google Using ML

Open source deep learning music project by Google called Magenta, also uses ML to generate compelling music. It involves the development of deep learning and reinforcement learning algorithms for generating songs, images, and others. It was started by researchers and engineers from the Google Brain team but many others have significantly contributed in building the project. They use TensorFlow and their models are released in open source on GitHub.

It currently implements a regular RNN and two LSTM’s to create music. It is relatively easy to set it up and the team is constantly working on adding new functionalities to make it more user-friendly.

The user can create music with Google Magenta by using the CodePens, which is probably the easiest way to get started. The user can make their own interactive experience using the model via magenta.js. All the coding to take this forward is available on the open source Python repo. Here is a sample of music created by using MusicVAE, a machine learning model for blending and exploring musical scores.

Analysing Some Of The Other Deep Learning Tools For Creating Music

There are many open deep learning tools available to create music with low to high computational complexity. Below are some of the popular deep learning tools:

DeepJazz: A result of hackathon by Ji-Sung Kim, this tool uses Keras and Theano to generate jazz music, and is open source. It has two-layer LSTM and learns from the given midi file. It uses deep learning and AI to produce a compelling work, which can be considered as deeply human.

BachBot: This AI for classical music is a project by Feynman Liang of Cambridge University. It uses LSTM and generates harmonised chorales. It is presented in the form of a challenge on the website which provides small samples of music either extracted from Bach’s own work or generated by BachBot. It is open source.

FlowMachines: The goal of FlowMachines is to research and develop AI systems which are able to generate music autonomously or in collaboration with human artists. The website notes that the music can come from individual composers, for example Bach or The Beatles, or a set of different artists who are using the system. It is not open source.

Wavenet: A generative model for raw audio, it was developed by researchers at Google’s DeepMind. It is based on CNNs and promises to enhance text-to-speech applications by generating a more natural flow in the vocals. It is not open source but a few samples are available here.

Concluding Note

Based on the references, it can be said that the music generated by ML algorithm is quite close to human composers. As was observed in the music samples by Stanford or the BachBot challenge, it is hard to differentiate the melodies created by machines from humans. However, there are certain challenges with these tools. For example, most of the ML algorithms including Magenta, generate only a single stream of notes. Nonetheless, researchers are extensively working on reducing human inputs as much as possible and a perfect ML composer isn’t a distant reality.