|

Listen to this story

|

Text-to-image AI generation tools have entered their wild wild west phase. The sweeping trend which Open AI’s DALL.E 2 started with great caution has drastically turned into a world where anything goes.

Last week, London and Los Altos-based startup Stability.ai released its open-source text-to-image generator Stable Diffusion to the public. Comparable in quality to DALL.E 2 and Midjourney, the implications of the step taken by Stability.ai are huge and mean exactly this — anyone can now take the code and replicate it to build free apps of their own for text-to-image tasks.

Moreover, Stable Diffusion, unlike its predecessors, has next to no restrictions barring users from generating images with inappropriate content or prominent personalities. DALL.E 2’s beta version, which will now be sold to a million people on its growing waitlist, has certain keyword-level prompt filters as well as tweaks in the model that prevents users from generating likenesses of real people.

Testing #stablediffusion notebook by @deforum_art

— Remi (@remi_molettee) August 24, 2022

I used the init image. It’s amazing at times how some of the images in the video have been turned into cartoon#AIart #deforum pic.twitter.com/bkwtmRVjfX

Level the playing field

During the tool’s release, Emad Mostaque, the founder of Stability.ai, said that he wanted to “democratise image generation in a way that would create an open ecosystem that will truly explore the boundaries of latent space”. Mostaque, an Oxford alumnus, has co-founded a project called Symmitree that was started to reduce the cost of smartphones and the internet for the underprivileged sections of society.

In a recent interview with The Washington Post, Mostaque spoke with pride about his startup’s independence. Speaking against the perceived guardedness of other Big Tech organisations, Mostaque said, “I believe control of these models should not be determined by a bunch of self-appointed people in Palo Alto. I believe they should be open.”

OpenAI’s DALL.E 2 and Google’s Imagen were closed to the public since their launch, except for a select number of artists. While Imagen is still closed, OpenAI sped up the availability of the tool for its waitlist, albeit reluctantly. While experts have recommended open sourcing these tools to improve them, Big Tech was wary of the responsibility and potential censure that would come with the misuse of these tools. It was natural that a smaller player would bite the bullet. And Stable Diffusion did.

Dangers of no filters, free for all

Since the announcement, images generated by Stable Diffusion started appearing everywhere. A number of art-generating services like Artbreeder and Pixelz.ai caught up quickly and started using Stable Diffusion. (Just last week, an Indian portfolio of media ventures Eros Investments announced a partnership with Stability.ai)

And with images that were artistically brilliant and photorealistic, came many problematic ones. The model was leaked on the infamous discussion forum 4chan, popular mainly among young males, which was quick to churn out pornographic content and images with nude celebrities. The unholy combination of open source and restriction-free has immediately brought the possibility of deepfakes much closer than they were.

Images of the Russia-Ukraine war, China’s invasion of Taiwan, British PM Boris Johnson dancing with a woman and Indian PM Modi with a knife can all be easily generated with a passable level of skill. Stable Diffusion also surpassed the quality of alternative open source tools like Hugging Face’s Craiyon, (previously known as DALL.E Mini) and Disco Diffusion by a mile. A number of problematic prompts slip through the cracks in Midjourney too.

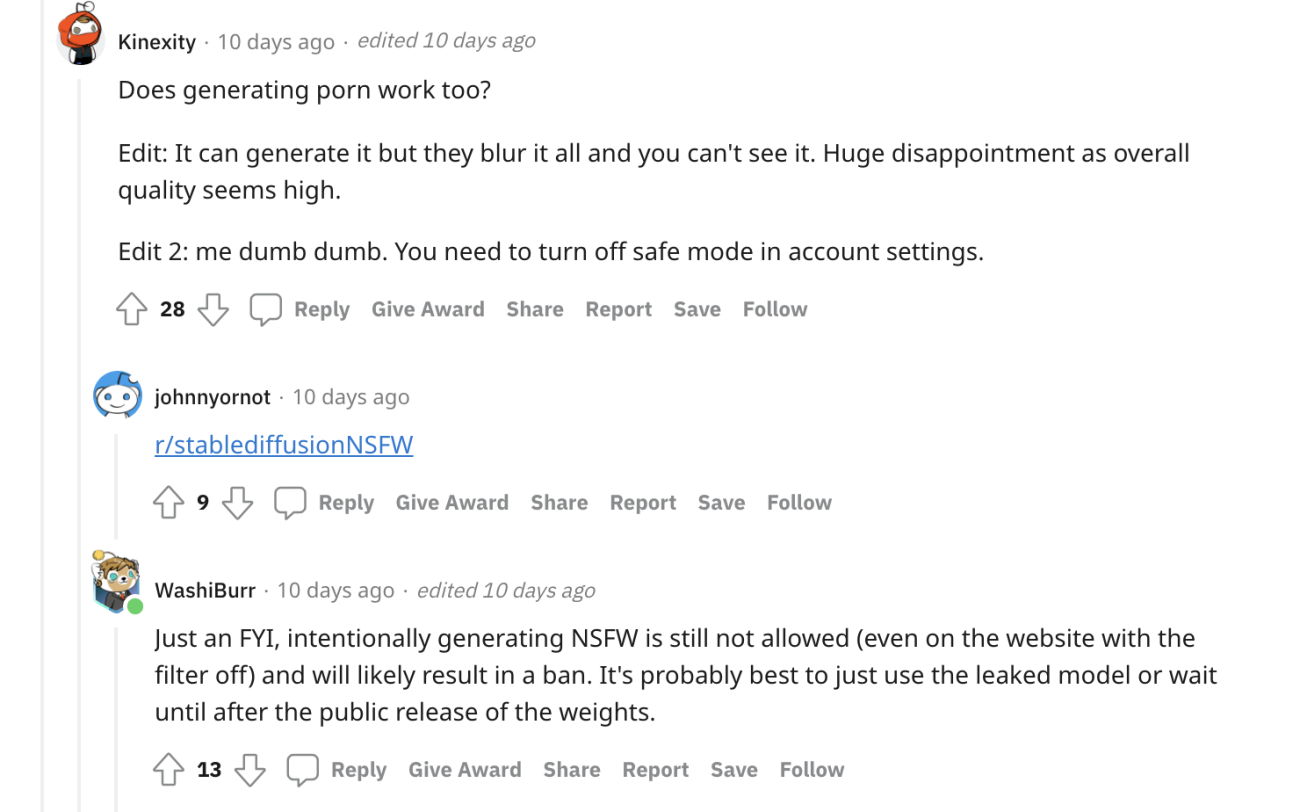

The tool isn’t entirely without any safeguards. It has an adjustable AI tool called Safety Classifier as a part of the Stable Diffusion software package that detects and blocks offensive images. However, the tool can be easily disabled, rendering it useless. People have also developed Google Colabs to create designs from prompts as well as disable watermarks and NSFW filters that were introduced on Stable Diffusion Github version since then.

Copyright and Legal Issues

Aside from the misuse of these images to produce fake news and create hysteria, copyright issues are another point of contention. Presently, there is little or no legal consensus around the copyright of an AI art piece. In February this year, the US Copyright Office ruled that “human authorship is a prerequisite to copyright protection” for AI-generated art and refused to grant copyright protection for images made by AI. The ruling is currently being appealed against in a federal court. The decision is a tricky one. AI-generated art is mostly drawn from art made in the real, physical world. Artists have found projects that were copying Stable Diffusion and using rip-offs of real artwork made by artists.

In another instance, Carlos Paboudjiain, an employee from a creative commerce agency called VMLY&R used DALL.E 2 and Midjourney to produce an entire collection of designer wear in the theme of images from film director Wes Anderson’s work.

The benefits of Stable Diffusion are just as heavy as its downfalls. The Pandora’s box that the tool’s release has opened up begs for nuance and detailed consideration into the ethical issues surrounding it. A few days ago, Mostaque addressed the ethics issue in a tweet, “To be honest, I find most of the AI ethics debate to be justifications of centralised control, paternalistic silliness that doesn’t trust people or society. Stable Diffusion represents the internet as is, but we are going to countries and societies globally to change this.”

For better or worse, the imagery produced by Stable Diffusion is a true reflection of the beautiful mess that is the internet.