Have you ever thought how good you are at opening the lid of a pickle jar? There is no denying that this trivial task doesn’t top the list of things humans can do with their hand with opposable thumbs. However, it still is a complicated task. The degrees of freedom of each finger comes into play to make an object to move by applying force in multiple directions keeping the object moving in the desired direction.

Have you ever thought how good you are at opening the lid of a pickle jar? There is no denying that this trivial task doesn’t top the list of things humans can do with their hand with opposable thumbs. However, it still is a complicated task. The degrees of freedom of each finger comes into play to make an object to move by applying force in multiple directions keeping the object moving in the desired direction.

The complexity of human actions is often realised when attempts are made to replicate the same in machines. Degrees of freedom is the measure of the number of independent movements an object can make. A human arm is said to have 27 degrees of freedom.

Now, to match the dexterity of the humans and make the robotic arms move better, a group of researchers at Google Brain trained a robotic arm having 24 degrees of freedom to improve dexterity using deep dynamic models. And, they make the model to improve on tasks within a few hours. In the context of a robotic manipulator, this is near real-time learning.

Moving two free-floating Baoding balls in the palm, using just four hours of real-world data via BAIR

Although dexterous multi-fingered hands can indeed enable flexibility and success of a wide range of manipulation skills, many of these more complex behaviors are also notoriously difficult to control.

The researchers say that accomplishing these tasks require finely balancing contact forces, breaking and reestablishing contacts repeatedly, and maintaining control of unactuated objects. The same is addressed in the experiments with Deep Dynamics Models for Learning Dexterous Manipulation.

Advanced Robotics With Model-Based RL

The present day robotic manipulators have benefited greatly from the success of deep learning algorithms. Deep reinforcement learning is an active research domain, which keeps getting better. Model-free reinforcement learning, especially, have shown promising results. However, researchers at Google Brain insist that the model-free learning struggle when they are asked to do tasks like moving pencil around and other such complex tasks. These model-based reinforcement learning methods, unlike the model-free ones, are claimed to be more efficient.

The new model PDDM or online planning with deep dynamic models is developed to achieve higher levels of complexity.

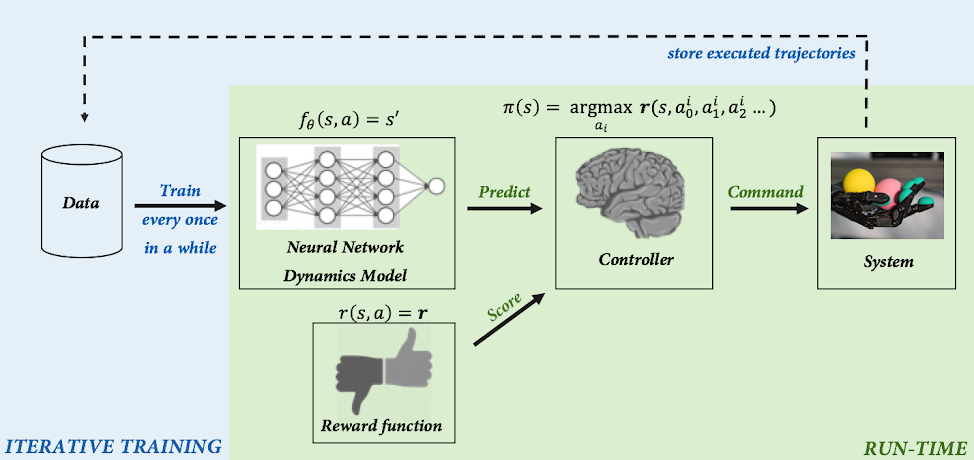

The workflow as illustrated above can be summarised as follows:

- Data is iteratively collected by attempting the task using the latest model.

- Model is updated using this experience and repeating.

- The ensemble of models are utilised, which accurately fits the dynamics of the robotic system

- This results in an effective learning, a nice separation of modeling and control, and an intuitive mechanism for iteratively learning more about the world while simultaneously reasoning at each time step about what to do.

For experiments, the researchers used the ShadowHand — a 24-DoF five-fingered anthropomorphic hand as shown above.A 280×180 RGB stereo image pair is also used to produce 3D position estimates for tracking the Baoding balls.

The team at Google Brain, claim to have addressed the following with PDDM:

- Model-based RL methods can be much more efficient than model-free methods.

- The improvements in online model-predictive control can indeed enable efficient and effective learning of flexible contact-rich dexterous manipulation skills.

- These skills can be transferred to a 24-DoF anthropomorphic hand in the real world, using ~4 hours of purely real-world data to coordinate multiple free-floating objects.

Where Can This Be Applied

Amputees with bionic limbs usually can’t get around trivial tasks like using keys to open doors or use a screwdriver. This is mainly because of the lack of dexterity in the robotic finger joints. The degrees of freedom are less. These deep dynamic models prove that it is possible to move like humans. With ongoing development in the field of brain-computer interface chips, these highly sophisticated robotic motion manipulation can only get better.

The industrial robots running on models such as PDDM can now be employed in more intricate spaces to do more complex tasks with great agility. Picking and placing fragile objects can be easier.

The simulators trained on the PDDM models can result in a new generation of robots that can pour wine, untie shoelaces, flip coins and play carroms with great ease.