Though machine learning has been around for more than three decades, it took a lot of time for the hardware to catch up with the demands of these power-hungry algorithms. With each passing year, the chip-set manufacturers have tried to make the hardware lighter and faster.

Today, over 100 companies are working on building next-generation chips and hardware architectures that would match the capabilities of algorithms. These chips are capable of enabling deep learning applications on smartphones and other edge computing devices.

Here we list a few top hardware innovations that have transformed the world of AI:

Intel’s Nervana

Intel recently revealed new details of upcoming high-performance artificial intelligence accelerators: Intel Nervana neural network processors. It is built to prioritise two key real-world considerations: training a network as fast as possible and doing it within a given power budget. This processor is built with flexibility in mind, striking a balance among computing, communication and memory.

Samsung Exynos 9

Samsung’s Exynos 9820 has a separate hardware AI-accelerator, or NPU, which performs AI tasks around seven times faster than the predecessor. This is aimed at AI-related processing that can be carried out directly on the device rather than sending the task to a server, providing faster performance as well as better security.

AMD Radeon Instinct

Radeon Instinct is a Superior Training Accelerator for Machine Intelligence and Deep Learning

Based on cutting-edge “VEGA” graphics architecture built to handle big data sets and diverse compute workloads. Up to 24.6 TFLOPS of FP16 peak compute performance for deep learning training applications

Nokia’s ReefShark

Nokia’s ReefShark is a completely new chipset that dramatically eases 5G network roll-out. AI is implemented in the ReefShark design for radio and embedded in the baseband to use augmented deep learning to trigger smart, rapid actions by the autonomous, cognitive network, enhancing network optimisation and increasing business opportunities.

Alibaba’s Pingtouge Hanguang

Alibaba unveiled its first AI dedicated processor for cloud-based large-scale AI inferencing.

The 12-nm Hanguang 800 contains 17 billion transistors.and is 15 times more powerful than the NVIDIA T4 GPU, and 46 times more powerful than the NVIDIA P4 GPU.

Huawei’s Ascend 910

The Ascend 910 is a new AI processor that belongs to Huawei’s series of Ascend-Max chipsets. After a year of ongoing development, test results now show that the Ascend 910 processor delivers on its performance goals with much lower power consumption than originally planned.

Ascend 910 is about two times faster at training AI models than other mainstream training cards using TensorFlow.

Apple A13 Bionic Chip

The iPhone 11 is powered by Apple’s new A13 Bionic chip, which Apple touts as its faster processor ever.

The A13 also features an Apple-designed 64-bit ARMv8.3-A six-core CPU, with two high-performance cores running at 2.65 GHz. The 2 high performance cores are 20% faster with 30% reduction in power consumption, the 4 high-efficiency cores are 20% faster with a 40% reduction in power consumption.

Google’s EdgeTPU

Edge TPU is Google’s purpose-built chip designed to run AI at the edge. It delivers high performance in a small physical and power footprint, enabling the deployment of high-accuracy AI at the edge.

Edge TPU combines custom hardware, open software, and state-of-the-art AI algorithms to provide high-quality, easy to deploy AI solutions for the edge.

Graphcore’s IPU

The Intelligence Processing Unit (IPU) is completely different from today’s CPU and GPU processors. It is a highly flexible, easy to use, parallel processor that has been designed from the ground up to deliver state of the art performance on current machine intelligence models for both training and inference.

It is also complemented by Dell’s DSS8440, the first Graphcore IPU server.

Cerebras Wafer Scale Engine (WSE)

As manufacturers race towards developing smaller, thinner, cheaper chips, Cerebras took a u-turn and released a wafer-scale engine(WSE), 215mm x 215mm chip aimed at deep learning applications.

The WSE is 1.2 trillion transistors, packed onto a single with 400,000 AI-optimised cores, connected by a 100Pbit/s interconnect. The cores are fed by 18 GB of super-fast, on-chip memory, with an unprecedented 9 PB/s of memory bandwidth.

Tesla’s AI Chip

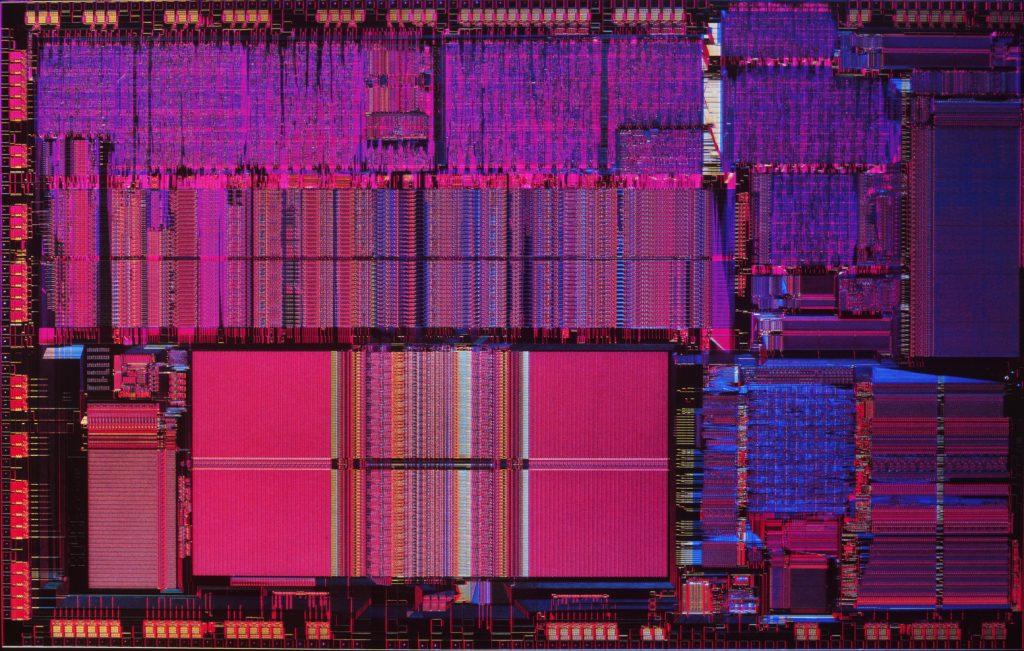

The above image is of the processing unit developed by Tesla called Tesla FSD(full self-driving) computer and the board consist of two chips.

Each chip consists of a die, of area equal to 260 mm^2, which houses 6 billion transistors.

There are two neural network accelerators on the chip and each neural network processor here has a dedicated ReLU hardware and pooling hardware delivering 36 trillion operations per second.

The applications of AI is almost everywhere today. From consumer to enterprise applications. With the explosive growth of connected devices, combined with a demand for privacy, low latency and bandwidth constraints, the hardware used for training AI models needs to have that extra edge.

The above-discussed innovations barely scratch the surface and there are many small to medium level companies conducting their research in developing customised AI solutions at the hardware level.