Since the deep learning boom has started, numerous researchers have started building many architectures around neural networks. It is often speculated that the neural networks are inspired by neurons and their networks in the brain. Computational algorithms often mimic and copy these biological structures. But there is yet a lot to be discovered about how the brain actually works.

Neuroscience is nowhere close to solving the mystery of the brain. That is why artificial intelligence scientists have to come up with many neural network architectures to solve different tasks.

Two of the main families of neural network architecture are encoder-decoder architecture and the Generative Adversarial Network (GAN).

Encoder and Decoder Architecture

In 2015, Sequence to Sequence Learning with Neural Network became a very popular architecture and with that the encoder-decoder architecture also became part of wide deep learning community. The paper proposed a LSTM to match input sequence to a vector with fixed dimensionality. The vector was converted into an output sequence by another LSTM network. Such architectures have been used for tasks like machine translation. The architecture for RNNs and LSTMs has become standard for machine translation tasks that works better than many classical statistical machine translation methods.

The main advantage of this kind of an architecture is that researchers and engineers now have the ability to train a single end-to-end model directly on input (source) and output (target) strings. The architecture also has the ability to variable length input and output strings or sequences. This kind of architecture was specifically designed for natural language processing tasks and it immediately showed the state of the art performance in many tasks.

The encoder-decoder architecture can be applied to a host of problems :

- Machine Translation (translating English to French)

- Modeling a long conversation

- Image-to-text conversion (captioning)

- Gesture tracking and prediction

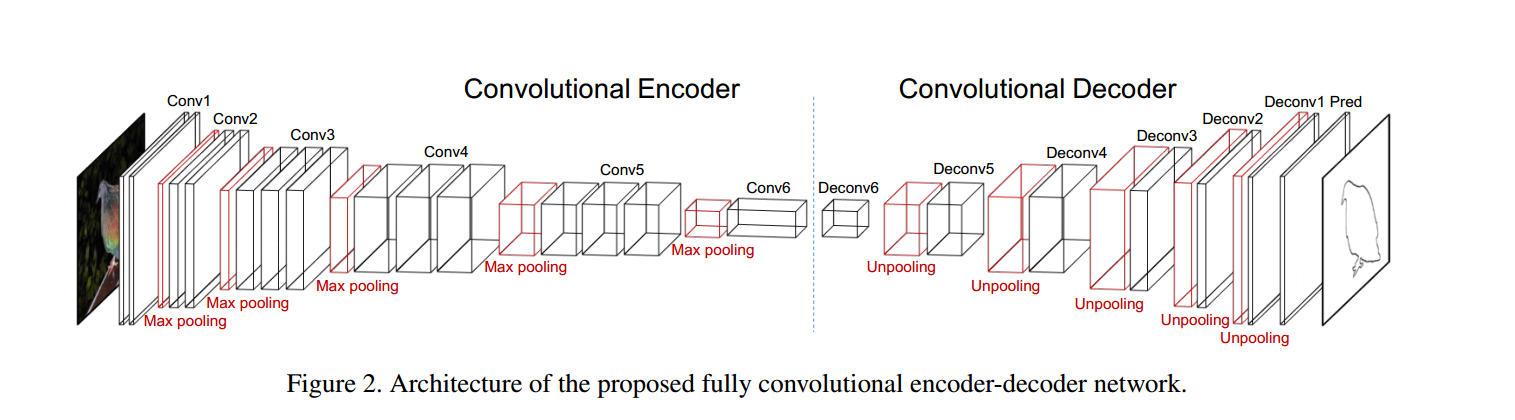

There are two parts of the architecture: the encoder and the decoder. The input sequence is given to the encoder decoder network and it is encoded one character at a time. An encoding is needed to understand and learn the relationships between many steps and then create a representation. Normally, LSTM layers are used to create the encoder model and output of the encoder is a vector of fixed size that also is a representation of the input sequence. The size of vector is the number of memory cells. A decoder now takes this learned representation and tries to change it into the correct output sequence. Again decoder is implemented using layers of LSTM networks and this layer has the job of intaking the input from encoder. A Repeat Vector is used as an transformer and adapter to gel together the output of the encoder and input expected by the decoder.

Generative Adversarial Networks

Deep generative models are a family of powerful deep learning models that are capable of learning many kinds of data distribution in an unsupervised manner. The family of models has won many accolades in the past and has also led to many impressive results. Many academic researchers including Geoffrey Hinton feel that generative modeling is the right way to go ahead and it may also be the correct way to model the brain. The two most popular ways of generative modeling are variational autoencoders and generative adversarial networks. There were still some drawbacks in these models. Ian Goodfellow in 2014 was able to was able to invent an architecture that would solve some of the problems in deep generative modeling. The invention was Generative Adversarial Networks.

Generative adversarial network are used in many tasks. Right from identifying cars on roads to filling the details of a photo that are missing. Moreover, these GANs have been used to predict how people might look when they are old. Generative adversarial networks are introduction of game theory into deep learning. They learn to data distribution and try to generate similar data through a 2-networks game. The two players are called as Generator and Discriminator. The whole architecture is known as adversarial because the two players (networks) are in a battle mode throughout the training process. Here a generator tries to learn the data distribution and generate images which can be passed by the discriminator. The discriminator tries not to get fooled. The job of the discriminator is to identify which of the generated data is not real. Hence as the training process carries on, the generator tries to fool the discriminator. Both the players (networks) have to be good here otherwise the produced model will not be useful.

Comparison

The two architectures are used variety of tasks and both have different applications. As we mentioned above the encoder decoder architecture are mainly used to create good internal representations of the data and can be seen as great data compression engines. The encoder encodes the data and the decoder tries to reconstruct the data back using the internal representations and the learned weights.

Whereas GANs work on a generative principle and try to learn from data distributions to use a game theory approach to build great models. Here the discriminator tries to identify fake data created the generator and hence making the players job difficult and producing better results.