Creating algorithms is difficult and time-consuming. This specific problem has inspired researchers to develop some productivity tools to help young members in this domain. This has given birth to a revolutionary field in Data Science called Auto Machine Learning(AutoML). AutoML provides methods and processes to make Machine Learning available to non-Machine Learning experts, to improve efficiency of Machine Learning and speed up the research.

On the other hand, all the existing AutoML systems cannot be applied to graph datasets. This made the researchers of Tsinghua University to develop a new AutoML framework called Auto Graph Learning(AutoGL). AutoGL is an AutoML framework that can be used on graph datasets and tasks. This toolkit handles various stages as shown in Figure below. You can read more about it, here.

Source:https://autogl.readthedocs.io/en/latest/

Without further ado, let’s jump into the quick tutorial.

- Installation

Requirements

Make sure to meet the requirements before installing the library.

- Python >= 3.6.02.

- PyTorch (>=1.5.1)

- PyTorch Geometricsee

import sys

#to print the current python version

print(sys.version)

!pip install torch

!pip install torch-geometricInstall from pip

Might take some time to install.

!pip install auto-graph-learning2) Learning

AutoGL is based on the concept of AutoML, we need to provide only dataset and tasks to be done and AutoGL Solver will do all the wonder for us from feature engineering to ensembling the model(as suggested in the diagram above).

The whole mechanism can be summarised as : AutoGL contains a dataset to maintain graph datasets given by the user then we create an AutoGL solver object to define the task. AutoSolver includes feature engineering, auto model, hyperparameter optimization and auto ensemble, which automatically preprocess the data, choose the best model, optimize and ensemble in the best way. For example, we are applying AutoGL on Cora dataset.

First let’s fetch the cora data set from the datasets module of AutoGL.

#We can easily connect fetch the using datasets module

from autogl.datasets import build_dataset_from_name

#fetching cora dataset

cora_dataset = build_dataset_from_name('cora')Now, creating an AutoGL solver object to define the required task.

#Import the required libraries

import torch

#import the AutoNodeClassifier method from solver module to make node

#classification solver for handling auto training process.

from autogl.solver import AutoNodeClassifier

#take device as 'cuda' if available else 'cpu'

device = torch.device('cuda' if torch.cuda.is_available() else 'cpu')

#creating a solver object defining 'deepgl' as feature engineering model,

#['gcn','gat'] as graph models , 'anneal' hyperparameter model and 'voting' as ensemble method

#and lastly giving the device.

solver = AutoNodeClassifier(

feature_module='deepgl',

graph_models=['gcn', 'gat'],

hpo_module='anneal',

ensemble_module='voting',

device=device

)Fitting the model to the cora dataset.

#fitting the above method solver object to cora_dataset

#time_limit is required so as to make sure the whole auto graph process

#wont exceed 1 hour limit

#you can exclusively define train and test datasets.

solver.fit(cora_dataset, time_limit=3600)

#solver.show() represents the model present in solver object and its

#performance on validation dataset.

solver.get_leaderboard().show()Now, we will predict and evaluate using the evaluation functions. Here, we don’t need to pass cora_dataset again as it is already saved in the solver object and it will be reused when no dataset is passed at prediction but we can always new dataset while predicting and it will consider the new dataset instead of older one.

#Importing the accuracy method from train module

from autogl.module.train import Acc

#predicting the probabilities of cora_dataset

#if not specifically mentioned, solver will consider the

#cora_dataset on predicting. You can also mention different dataset.

predicted = solver.predict()

print('Test accuracy: ', Acc.evaluate(predicted.reshape(-1,1),

cora_dataset.data.y[cora_dataset.data.test_mask].numpy().reshape(-1,1)))3) Dataset

This dataset can import datasets from CogDL, Pytorch Geometric and from OGB. You can refer to these links for creating and building datasets. You can check all the datasets supported by AutoGL, here. If your dataset contains two matrices, groups and networks then you can directly register that dataset through url, please refer here. You can also create a local dataset for testing. For this, please refer here.

There are many engineering pipelines provided by AutoGL toolkit for nodes and subgraphs. Along with that, it provides an automatic feature engineering pipeline. An example of how we can apply feature Engineering is given below. Majorly, there are three kinds of feature engineering atom names supported by AutoGL toolkit. Here is a list of all the selectors, generators and subgraphs. Apart from that you can also create your own feature engineering object, please refer to this.

# 1. Choose a dataset.

from autogl.datasets import build_dataset_from_name

data = build_dataset_from_name('cora')

# 2. Compose a feature engineering pipeline

from autogl.module.feature import BaseFeatureAtom,AutoFeatureEngineer

from autogl.module.feature.generators import GeEigen

from autogl.module.feature.selectors import SeGBDT

from autogl.module.feature.subgraph import SgNetLSD

# you may compose feature engineering atoms through BaseFeatureAtom.compose

fe = BaseFeatureAtom.compose([

GeEigen(size=32) ,

SeGBDT(fixlen=100),

SgNetLSD()

])

# or just through '&' operator

fe = fe & AutoFeatureEngineer(fixlen=200,max_epoch=3)

# 3. Fit and transform the data

fe.fit(data)

data1=fe.transform(data,inplace=False)

print(data1.data)5) Model

You can check out all the models available in this toolkit, here. Apart from that, you can create your own model and automodel. An example of this is given here.

6) Trainer

AutoGL Trainer handles all the auto training of tasks. There are two type of trainer supported by it namely

- NodeClassificationTrainer for semi-supervised node classification

- GraphClassificationTrainer for supervised graph classification

You can build a trainer with the help of this example, here. After the initialization of the trainer, we can train and test. Till now, training and testing is available for node classification and graph classification and after testing, you can also evaluate the prediction through different metrics available.

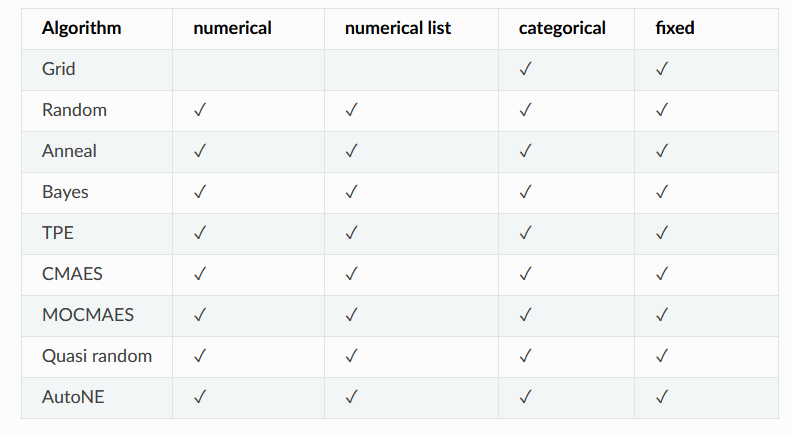

7) Hyper Parameter Optimization

This toolkit used black-box hyperparameter optimization. Here is the list of all the algorithms supported by it. Apart from that you can create your own Hyper Parameter Optimizer.

8) Ensemble

Voting and Stacking are the two methods supported by this ensemble. Voting is based on the number of occurrences . Stacking combines the predictions from the well performing model. You can create your own ensembler by inheriting BaseEnsembler and overloading methods.

9) Solver

It handles the auto-solvation of tasks. Currently, there are two methods provided by it.

- AutoNodeClassifier for semi-supervised node classification

- AutoGraphClassifier for supervised graph classification

Its initialization has already been discussed in the above example, please refer to AutoGL Learning.

Conclusion

We have discussed all the basics of Auto Graph Learning in this tutorial. This project is currently under development and researchers are adding on new features. Following are the incoming features we can see in the near future.

- Neural Architecture Search

- Large-scale graph datasets support

- More graph tasks (e.g. Link prediction, Heterogeneous graph tasks, Spatial & Temporal tasks)

- Graph Boosting & Bagging

- More graph library backend support (e.g. Deep Graph Library)

Tutorials and other resources used above:

I hope you find this article interesting and useful.