In today’s fast-paced lives, time is a precious entity. Therefore reading long lines of text in a news post or article can become a lethargic task. But Reading a short paragraph with helpful context delivering the same meaning helps save a lot of time. A Text Summarizer can become a very useful tool in such cases. Text summarization is creating a short, accurate, and fluent summary from a longer text document. It can also be defined as the technique of shortening long pieces of text. The intention is to create a summary that consists only of the main points outlined in the document. Today the world around us is dependent on gathering and processing huge amounts of data. With such a big amount of data circulating every day in the digital space, there is a prolonged need to develop machine learning algorithms that can automatically shorten longer texts and help create and deliver accurate summaries that do not miss out on fluently passing the intended messages.

We always enjoy quick access to enormous amounts of information. But most of the information present is redundant, insignificant, and may not convey the intended meaning. Even if you are looking for specific information from an online news article, you might have to dig through its whole content and spend a lot of time separating the unnecessary stuff before getting to the information you need. Therefore, applying the text summarization reduces reading time and accelerates searching for information, and, in turn, increases the amount of information that can fit in a small area. As manual text summarization is very time consuming and seems to be a laborious task, the automatization of such a task is gaining increasing popularity day by day. It therefore also makes up for a strong motivation of academic research in this area. Automatic text summarization aims to transform lengthy documents into shortened versions without performing it manually.

Machine learning algorithms can be trained to comprehend documents and identify the specific sections that convey important facts and information before producing a required summarized text. There are also two main types of text summarization techniques, namely Abstractive and Extractive summarization. In extraction-based summarization, a subset of words representing the most important points in the text document is pulled out and combined to summarize. It acts as a highlighter that selects the main information from a source text. In machine learning, extractive summarization usually involves weighing out all the essential sections of sentences and using the results to generate the summaries. Different algorithms and methods can be used to identify the weights of the sentences and then rank them down according to their relevance and similarity with one another, later joining them to generate a summary. An advanced deep learning technique is applied to paraphrase and shorten the original document for abstraction-based summarization techniques. Since abstractive machine learning algorithms can generate new phrases and sentences that represent the most important information from the source text, they can also assist in overcoming the grammatical inaccuracies of the extraction techniques. Although the abstraction technique performs better at text summarization, developing its algorithms requires complicated deep learning techniques and language modelling techniques to be used. It is also important to keep in mind that the final summary generated from any of these must be of perfect length. It should neither be too long or be too short. The structure needs to be reader-friendly as well as the sentences have to be coherent and make sense.

What is Beautiful Soup?

Beautiful Soup is an open-source Python library for getting data out of HTML, XML, and other markup languages. If you have some web pages that display the data relevant to your research, such as date, address information or important lines of text, but do not have any way of downloading the data directly, using Beautiful Soup can help you pull particular content from the webpage, remove the HTML markup present, and save the information as data. It is a magical tool for web scraping that helps you clean up and parse the documents you have pulled down from the web. Beautiful Soup provides simple methods and Pythonic idioms for navigating, searching, and modifying. Beautiful Soup also automatically converts the incoming documents to Unicode and outgoing documents to UTF-8. It is top-ranked out of popular Python parsers like lxml and html5lib, which allows us to try different parsing strategies but trades speed for flexibility.

Getting Started With the Code

In this article, we will be creating a Text summarizer using Hugging Face Transformer and Beautiful Soup for Web Scraping text from webpages. Our goal will be to generate a summarized paragraph that derives important context from the whole webpage text present. A Text summarizer video tutorial inspires the following code; you can find the video using the link here.

Installing The Library

The first step to creating a Text Summarizer model will be to install the required library. Here we will be installing a Transformer from Hugging Face that contains PyYAML, a data serialization format designed for human readability and interaction with the scripting languages. You can do so with the following line of code,

#installing the library !pip install transformers

Setting the Pipeline

Next, we will be setting up the summarization pipeline calling the Beautiful Soup method from the Transformer,

#setting up the summarizer pipeline from transformers import pipeline from bs4 import BeautifulSoup import requests

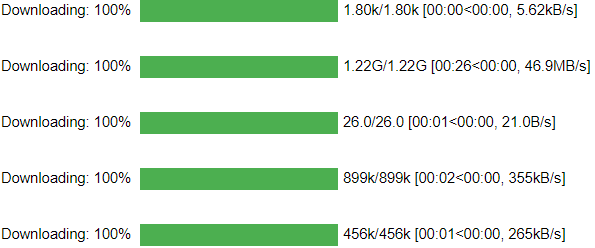

Output :

Let us now provide the webpage URL path for Beautiful Soup to scrape out text from,

#webpage path for scraping text URL = "https://analyticsindiamag.com/complete-guide-to-augly-a-modern-data-augmentation-library/"

Here I am using a single URL path, but you can also combine the text with two URLs!

Grabbing the entire webpage using the request method,

r = requests.get(URL)

But we do not want unnecessary information from the entire webpage; hence we will now use a piece of code that just extracts the headings and the text from the webpage.

#using html parser to sort out text only soup = BeautifulSoup(r.text, 'html.parser') #scraping only title and paragraph results = soup.find_all(['h1', 'p']) #saving the results generated text = [result.text for result in results] ARTICLE = ' '.join(text) #visualizing scraping result ARTICLE

As we can observe, we have now extracted only the necessary information required to summarize.

Now we will be preprocessing the text by feeding it to our pipeline. Before that, we need to remove the unnecessary special characters and full stops that might be present and replace them with characters and end of sentences in the text. It will be easier for our summarizer model to summarize from and figure out the end of the sentence in the result.

#setting chunk length to 500 words

max_chunk = 500

#removing special characters and replacing with end of sentence

ARTICLE = ARTICLE.replace('.', '.<eos>')

ARTICLE = ARTICLE.replace('?', '?<eos>')

ARTICLE = ARTICLE.replace('!', '!<eos>')

Let’s proceed with further text processing; here, we will split our text into individual words and then collaborate them using the append function. We will be looping through the whole text and processing it to limit the length to 500 words only and derive the summary.

#splitting out each sentence from the text into words

sentences = ARTICLE.split('<eos>')

current_chunk = 0

chunks = []

#looping through split text to process

for sentence in sentences:

if len(chunks) == current_chunk + 1:

if len(chunks[current_chunk]) + len(sentence.split(' ')) <= max_chunk:

chunks[current_chunk].extend(sentence.split(' '))

else:

current_chunk += 1

chunks.append(sentence.split(' '))

else:

print(current_chunk)

chunks.append(sentence.split(' '))

for chunk_id in range(len(chunks)):

chunks[chunk_id] = ' '.join(chunks[chunk_id])

Summarizing the Text Block

Finally, we will be setting up our summarizer to derive our output. We are setting our summary length to be derived to 120 words only and min summary length being 30 words.

#setting our summarizer res = summarizer(chunks, max_length=120, min_length=30, do_sample=False)

Doing this provides us with multiple short summaries; let us combine all the summaries into a single one.

#obtaining the resultant summary ' '.join([summ['summary_text'] for summ in res])

Output :

As we can see, the result is a summarized text from the whole webpage text!

Saving the derived summary as a Text File,

#saving the summary into a text file

text = ' '.join([summ['summary_text'] for summ in res])

with open('victorsummary.txt', 'w') as f:

f.write(text)

End Notes

In this article, we learned about what a Text Summarizer is and how it can be helpful in our day to day lives. We also created a Text Summarizer model that scraps text from web pages and provides short and meaningful summaries using Hugging Face Transformer and Beautiful Soup Library. The following implementation can be found in a Colab notebook, using the link here.

Happy Learning!