In the field of deep learning, A convolutional neural network (CNN or ConvNET) is a special type of artificial neural network which is widely used in the field of image processing and computer vision, classification and regression modelling. They are totally based on the shared-weight architecture of the convolutional kernel or filters that slide on the features of the input and output the feature map which can be called the summarized information of the input according to the requirement. The CNN can be considered as the upgraded version of the multilayer perceptron(MLP) wherein upgrade more information is added in MLP to solve the problem of being fully connected. This means if all the neurons of one layer are connected with all the neurons of another layer then it can be called a fully connected layer. CNN imposes the use of a hierarchical pattern of the data in which it learns from the few important features of the input data and after learning is used across the whole data.

Taken any image with the number of objects presented in it as an example where the size is of the image is 8 * 8 pixels each. An MLP model will require 64 input weights. Where CNN approaches to learn and preserve the spatial relationship between the pixels and learns the feature representation consuming the squares of input data. And those learned features are used across the whole image. This whole thing helps the model to work on any situation whether the objects in an image or data points in any data are shifted or moved.

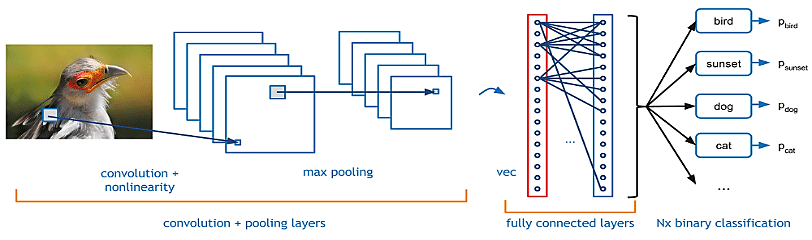

The basic architecture of CNN consists of three main layers.

- Convolutional layer: this layer consists of filters and feature maps. Where filters carry the input weights according to that output value and the feature map is the output according to the weight applied on the filter.

- Pooling layers: basic work of the pooling layer is to downsample the feature map.

- Fully connected layer: this is a conventional feed-forward neural network. Which consists of an activation function in order to make predictions.

The image above can show a basic layout of a CNN which is used for image classification. This article is mainly focused on the pooling layers which we will discuss in the next steps.

Pooling Layers

As the name suggests the pooling layers are used in CNN for consolidating the features learned by the convolutional layer feature map. It basically helps in the reduction of overfitting by the time of training of the model by compressing or generalizing the features in the feature map.

Pooling layers are very simple because they often use the maximum or average values of input to downsample the data.

Where the convolutional layers summarize the features available in the data in terms of feature maps and the feature maps are very sensitive regarding their location in the data. for example in any image if the object has shifted by chance so the convolutional layers will need to make the feature map all over again. Because the older preserved one we lead our predictions in the wrong direction.

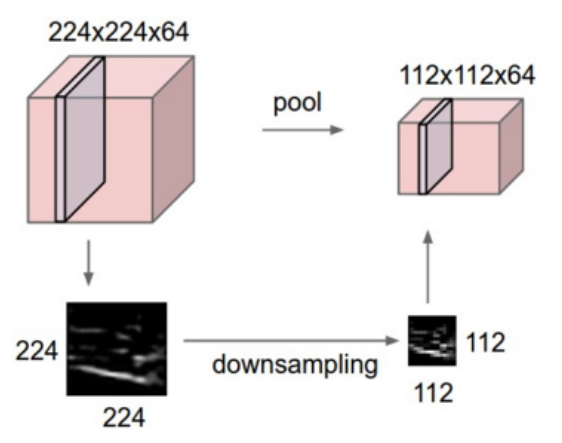

To solve this issue downsampling of the map is required and for this, the pooling layers are introduced in the CNN which summarizes the features from the feature map. two commonly used polling methods are average pooling and max pooling which summarizes the average presence of features and maximized presence of the features from a feature map.

Mathematically the pooling operation works by sliding a two-dimensional filter across the three-dimensional feature map and summarizes the features that come in the way of filters. So if a feature map of dimension h * w * c is presented then the output obtained by the pooling will be.

(h – f+ 1)/ s * (w – f + 1) * c

Where

- h and w are the height and width of the feature map respectively

- c is the channel presented in the feature map

- f is the size of the filter

- s is stride length

The above image is a general overview of a simple pooling layer that shows how it downsampled the data.

Why do we use pooling layers?

As we have seen that pooling layers reduces the dimension of the feature maps, so if in any condition where the structure or the dimensions of any data is high we can use the pooling layers with the convolutional layer so the feature map generated by the convolutional layer is high dimensional can be reduced in the low dimensional and rest computational work will cost low amount of efforts.

Pooling layers summarizes the featured map so that the model will not need to be trained on precisely positioned features. This makes a model more reliable and robust.

Types of pooling layers

Roughly we can divide pooling layers into three categories.

- Max pooling layer

- Min pooling layer

- Average pooling layer

- Global pooling layer

Max pooling

In max-pooling, the layer operates with the most prominent feature of the feature map provided by the convolutional layer. More basically we can say it selects the maximum valued element from the region captured by the filter in any feature map.

The image below represents the operation of the max-pooling layer with a 2-dimensional feature map

In the image let’s consider the image in the left with 4*4 dimensions is a feature map with a written value so when the max-pooling layer will be applied on the map layer will generate a feature map of dimensions 2*2. Where the layer will select the maximum value from every patch.

This will be helpful for the rest of the model to understand the data and easily make calculations over the data, so the whole process will be pretty robust than before.

Why do we use Max Pooling?

Max polling helps in extracting low-level features from the data like edges, points etc. or if we talk about image processing the max-pooling helps to extract the sharpest features on the image and the sharpest features are a best lower-level representation of the image.

Min Pooling

In min pooling, the layer operates with the most non-prominent feature of the feature map provided by the convolutional layer. More basically we can say it selects the minimum valued element from the region captured by the filter in any feature map.

The image below represents the operation of the min pooling layer with a 2-dimensional feature map

In the image let’s consider the image in the left with 4*4 dimensions is a feature map with a written value so when the min pooling layer will be applied on the map layer will generate a feature map of dimensions 2*2. Where the layer will select the minimum value from every patch.

Why do we use Min pooling?

As the example image showed above we use the min pooling where we are required to extract the most irrelevant features from the data or if we talk about the image data it helps to extract the features which have lower sharp values or the edgeless features from the image.

Average pooling

In average pooling, the layer operates by selecting the average values of the elements available in the patch of the feature map. Basically, the whole feature map gets downsampled to the average value captured by the region of the feature map. So the max-pooling gives the most prominent feature of any patch where the average pooling gives the average of the covered area.

The below image can be an example of the average pooling of an image of size 4*4 with a 2*2 feature map of the pooling layer.

In the image let’s consider image in the left with 4*4 dimensions is a feature map with written value so when the average pooling layer will be applied on the map, the layer will generate a feature map of dimensions 2*2. Where the layer will select the maximum value from every patch.

Why do we use Average Pooling?

Pooling gives us some amount of translation invariance. Also, pooling is faster to compute than convolutions. When we use average pooling it helps to extract the smooth features. If talking about image data if we apply the average pooling layers we will get them out as the combination of all colors presented in the region covered by the feature map. So if the distribution of the data points in data and colors in any image is smooth or more basically the distribution is proper then we can use the Average pooling to get proper results.

Global Pooling Layer

Till now we have seen how we can downsample data by using different pooling layers but after this when the downsampled data goes into the fully connected layer of the CNN the data gets vectorized and fed directly to the fully connected layer which the activation functions work according to their task. This procedure works like a bridge between the convolutional structure and the traditional neural network.

After performing the feature extraction by convolutional structure the final features are classified in a traditional way. Where the fully connected layers are prone to overfitting. We can use the dropout function. Dropout function has improved the generalized ability and it prevents overfitting. The dropout function helps to set half of the activation function to zero during training. Here we can use another strategy called the global pooling layer.

We can use them to replace the flattened layers in CNN. What happens in a flatten layer is that it takes a tensor of any size and transforms it into a one-dimensional tensor by keeping all the values in a one-dimensional tensor. Acces of the values in this type of tensor can increase the probability of overfitting.

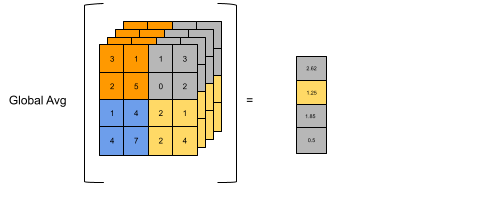

The global pooling layer takes the average or max of the feature map and the resulting vector can directly feed into the softmax layer which prohibits the chances of overfitting so basically, we can divide the global pooling layer into two types.

- Global average pooling.

- Global max pooling

Global Average Pooling

The global average pooling layer takes the average of each feature map then sends the average value to the activation layer.

Global Max Pooling

The global max-pooling layer takes the maximum of each feature map and sends it directly to the activation layer in a fully connected layer.

The image below can represent the working of the global average pooling layer.

Why do we use the Global Pooling Layer?

As we have discussed a global average layer can be used in place of the flatten layer which saves the CNN from being overfitted and the global maximum pooling layer also can work as the replacement of the flattening layer.

We can see global average pooling as a structural regularizer that explicitly enforces feature maps to be confidence maps of categories.

Conclusion

In this article, we have seen what are the different pooling layers and how they can be helpful for improving the results provided by CNN. also we have seen a general overview of CNN. for a better overview of the programming of the layer, I have implemented all pooling layers. You can cross-check the python codes using this link.

References: