The major thrust of AI is the development of computer functions associated with human intelligence, such as reasoning, learning, and problem-solving, which can be particularly very helpful for the markets. Trading and investing in the market takes nothing but a series of reasoning and number crunching, based on the data and solving the problem of forecasting the future direction of the current stock prices. Manual fundamental and technical analysis is getting out of fashion nowadays. The application of Machine learning technology in trading or stock market is being utilized so that the system automatically learns the trade complexity and improves its algorithms to attend the best trade present. In the past decade, there seemed to be a usage of the portfolio of traders, so that everyone could earn their profits. But, With the help of AI, one can perfectly analyze the underlying data points presented very fastly and accurately.

Using such data points, we can analyze the current trends in the market and form patterns at high speed, which are the two necessary things generally being used for smart trading. Using the headlines in news channels and news sources, social media reviews, and comments present on other platforms, AI can analyse the stock by performing sentiment analysis on such data. Machine Learning usually stores the outcomes and the parameters that gave those outcomes and can better analyze the stock market.

Data often aids in reaching a better solution, particularly in probability-based, sentiment-led activities like stock market trading. But to this day, financial engineers also believe that it’s impossible for a machine, left to its own devices, to beat the stock market. With the rise in technology, incredibly powerful computers can crunch almost countless data points in a matter of minutes. This means they are also very capable to detect historical and replicating patterns for smart market trading that are often hidden to the mere human investors. We humans simply aren’t capable of processing such data or seeing these patterns at the same rate as a technologically capable machine. AI can evaluate and analyze thousands of stocks in moments, and hence this technology adds even more speed to trading. Today every millisecond counts, and with AI as a means of automated trading, it is a wonder. AI is already learning to improve upon its own mistakes continuously. It deploys automated trading assistant bots and constantly works to improve its performance by fine-tuning programming and to input huge masses of new data.

What Is Open AI Gym Anytrading?

AnyTrading is an Open Source collection of OpenAI Gym environments for reinforcement learning-based trading algorithms. Trading algorithms are mostly implemented based on two of the biggest markets present: FOREX and Stock. AnyTrading aims to provide Gym environments to improve upon and facilitate the procedure of developing and testing Reinforcement Learning based algorithms in the area of Market Trading. This is obtained by implementing it on three Gym environments: TradingEnv, ForexEnv, and StocksEnv. AnyTrading can help learn about stock market trends and help with powerful analysis, providing deep insights for data-driven decisions.

Getting Started With Code

In this article, we will implement a Reinforcement Learning Based Market Trading Model, where we will be creating a Trading environment using OpenAI Gym AnyTrading. We will use historical GME price data, then we will train and evaluate our model using Reinforcement Learning Agents and Gym Environment. The following code is partially inspired by a video tutorial on Gym Anytrading, whose link can be found here.

Installing the Library

The first essential step would be to install the necessary library. To do so, you can run the following lines of code,

!pip install tensorflow-gpu==1.15.0 tensorflow==1.15.0 stable-baselines gym-anytrading gym

Stable-Baselines will give us the reinforcement learning algorithm and Gym Anytrading will give us our trading environment

Importing the Dependencies

Now let us install the required dependencies to create a base framework for our model, and we are going to use the A2C reinforcement learning algorithm to create our Market Trading Model.

# Importing Dependencies

import gym

import gym_anytrading

# Stable baselines - rl stuff

from stable_baselines.common.vec_env import DummyVecEnv

from stable_baselines import A2C

# Processing libraries

import numpy as np

import pandas as pd

from matplotlib import pyplot as pltProcessing our Dataset

Now with our pipeline setup, let’s load our GME Market data. You can download the dataset using the link here. You can also use other relevant datasets such as Bitcoin data to perform this model upon.

#loading our dataset

df = pd.read_csv('/content/gmedata.csv')

#viewing first 5 columns

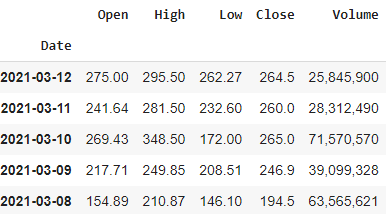

df.head()

#converting Date Column to DateTime Type

df['Date'] = pd.to_datetime(df['Date'])

df.dtypesOutput :

Date datetime64[ns]

Open float64

High float64

Low float64

Close float64

Volume object

dtype: object

#setting the column as index

df.set_index('Date', inplace=True)

df.head()

We will now pass the data and create our gym environment for our Agent to later train upon.

#passing the data and creating our environment

env = gym.make('stocks-v0', df=df, frame_bound=(5,100), window_size=5)

Setting the window size parameter will specify how many previous prices references our trading bot will have so that it can decide upon to place a trade.

Testing our Environment

Now with our model setup, let’s test our basic environment and deploy our reinforcement learning agent.

#running the test environment

state = env.reset()

while True:

action = env.action_space.sample()

n_state, reward, done, info = env.step(action)

if done:

print("info", info)

break

plt.figure(figsize=(15,6))

plt.cla()

env.render_all()

plt.show()

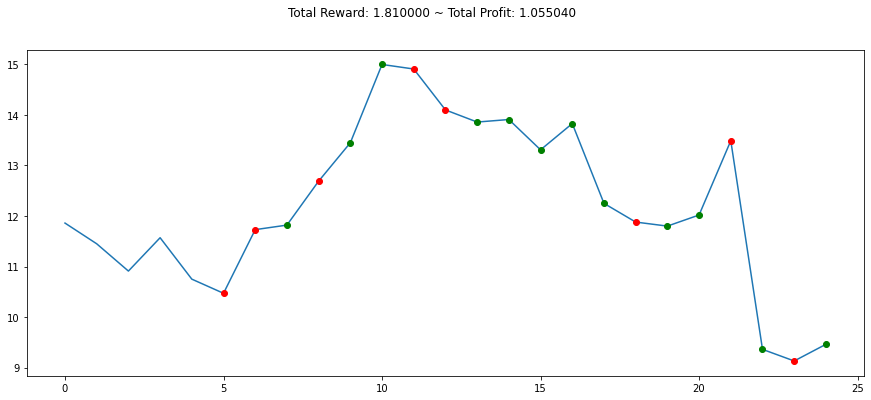

As we can see, our RL agent has randomly bought and sold stocks. Our profit margin seems to be more than 1, so we can determine that our bot has earned us profits through the trades it made. But these were random steps taken, now let’s properly train our model to achieve better trades.

Training our Environment

Setting up our environment to train our reinforcement learning agent upon,

#setting up our environment for training

env_maker = lambda: gym.make('stocks-v0', df=df, frame_bound=(5,100), window_size=5)

env = DummyVecEnv([env_maker])

#Applying the Trading RL Algorithm

model = A2C('MlpLstmPolicy', env, verbose=1)

#setting the learning timesteps

model.learn(total_timesteps=1000)

---------------------------------

| explained_variance | 0.0016 |

| fps | 3 |

| nupdates | 1 |

| policy_entropy | 0.693 |

| total_timesteps | 5 |

| value_loss | 111 |

---------------------------------

---------------------------------

| explained_variance | -2.6e-05 |

| fps | 182 |

| nupdates | 100 |

| policy_entropy | 0.693 |

| total_timesteps | 500 |

| value_loss | 2.2e+04 |

---------------------------------

---------------------------------

| explained_variance | 0.0274 |

| fps | 244 |

| nupdates | 200 |

| policy_entropy | 0.693 |

| total_timesteps | 1000 |

| value_loss | 0.0663 |

#Setting up the Agent Environment

env = gym.make('stocks-v0', df=df, frame_bound=(90,110), window_size=5)

obs = env.reset()

while True:

obs = obs[np.newaxis, ...]

action, _states = model.predict(obs)

obs, rewards, done, info = env.step(action)

if done:

print("info", info)

break

#Plotting our Model for Trained Trades

plt.figure(figsize=(15,6))

plt.cla()

env.render_all()

plt.show()

As we can see here, our trained agent is now placing much better trades and a lot less random trades, giving us profit at the same time with a lot more conscience about when to buy and when it is the right time to sell the stock.

End Notes

In this article, we tried to understand how artificial intelligence can be applied to market trading to help leverage the art of buying and selling. We also created a Reinforcement Learning model through which our trained agent can buy and sell stocks, booking us profit simultaneously. The following implementation above can be found as a colab notebook, which can be accessed using the link here.

Happy Learning!