The Curse of Dimensionality is termed by mathematician R. Bellman in his book “Dynamic Programming” in 1957. According to him, the curse of dimensionality is the problem caused by the exponential increase in volume associated with adding extra dimensions to Euclidean space.

The curse of dimensionality basically means that the error increases with the increase in the number of features. It refers to the fact that algorithms are harder to design in high dimensions and often have a running time exponential in the dimensions. A higher number of dimensions theoretically allow more information to be stored, but practically it rarely helps due to the higher possibility of noise and redundancy in the real-world data.

Gathering a huge number of data may lead to the dimensionality problem where highly noisy dimensions with fewer pieces of information and without significant benefit can be obtained due to the large data. The exploding nature of spatial volume is at the forefront is the reason for the curse of dimensionality.

The difficulty in analysing high-dimensional data results from the conjunction of two effects.

- High-dimensional spaces have geometrical properties which are counter-intuitive, and far from the properties which can be observed in two-or three-dimensional spaces.

- Data analysis tools are most often designed having in mind intuitive properties and examples in low-dimensional spaces and usually, data analysis tools are best illustrated in 2-or 3-dimensional spaces.

The difficulty is that those tools are also used when data are high-dimensional and more complex and hence, there is a probability to lose the intuition of the behavior of the tool and thus drawing incorrect conclusions.

Domains of CoD

Some of the domains of the curse of dimensionality are discussed below.

Anomaly Detection

Anomalies in high-dimensional data may show a significant number of attributes which are irrelevant in nature, different subspaces produce incomparable scores, given the large search space, for every desired significance a hypothesis can be found, certain objects occur more frequently in neighbor lists than others, etc.

Combinatorics

Here the curse of dimensionality occurs when the complexity increases rapidly which is caused by the increasing number of possible combinations of inputs.

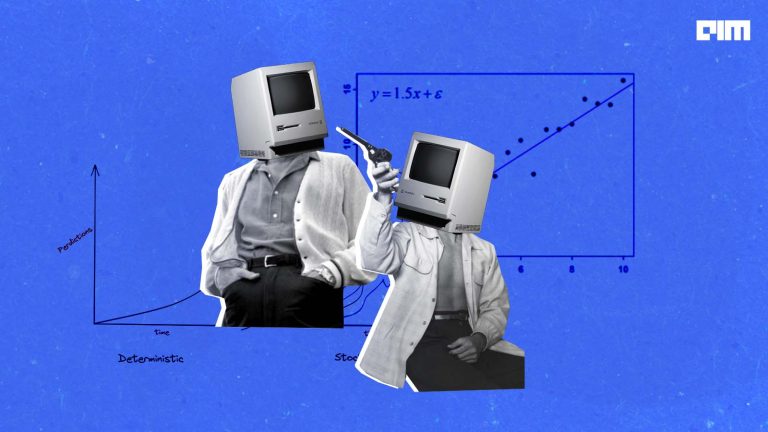

Machine Learning

One of the key ingredients in the successful development of learning algorithms is to have enough data for learning so that they fill the space or part of the space where the model must be valid. An increase in the dimensionality in data results in the sparsification of data and this exponential increase is the first consequence of what is called the curse of dimensionality.

In machine learning, a small increase in the dimensionality would require a large increase in the volume of the data in order to maintain a similar level of performance. Thus the curse of dimensionality is the expression of all phenomena that appear with high-dimensional data, and that have most often unfortunate consequences on the behavior and performances of learning algorithms.

How To Combat The CoD

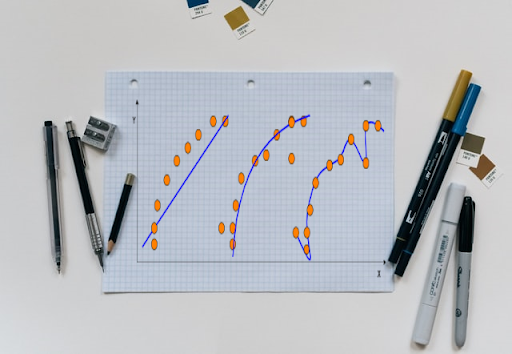

Dimensionality Reduction

Dimensionality reduction is a method of converting the high dimensional variables into lower dimensional variables without changing the specific information of the variables. To overcome the issue of the curse of dimensionality, Dimensionality Reduction is used to reduce the feature space with consideration by a set of principal features. Dimensionality Reduction contains no extra variables that make the data analyzing easier and simple for machine learning algorithms and resulting in a faster outcome from the algorithms.

Click here to read more.

Regularisation

The problem of curse of dimensionality comes from that parameter estimates which are unstable, hence, regularising these estimates will help that the parameters to make correct estimation.

PCA

PCA (Principal Component Analysis) is one of the most traditional tools used for dimension reduction. It transforms the data into the most informative space, thereby allowing the use of lesser dimensions which are almost as informative as the original data. But since it is a linear tool, nonlinear relations between the components of the initial data may be lost in the preprocessing.

References