GANs have achieved state-of-the-art results in image and video generation, and have been successfully applied for unsupervised feature learning among many other applications. Generative adversarial networks have seen rapid development in recent years, however, their audio generation prowess has largely gone unnoticed.

In an attempt to explore the audio generation abilities of GANs, a team of DeepMind researchers published a work where they introduce a new model called GAN-TTS.

Audio Generation With Deep Learning So Far

Text-to-Speech (TTS) is a process for converting text into a humanlike voice output.

Many audio generation models operate in the waveform domain. They directly model the amplitude of the waveform as it evolves over time. Autoregressive models achieve this by factorising the joint distribution into a product of conditional distributions.

Whereas, the invertible feed-forward model can be trained by distilling an autoregressive model using probability density distillation.

Models like Deep Voice 2 and 3 and Tacotron 2, in the past, have achieved some accuracy by first generating a representation of the desired output, and then using a separate autoregressive model to turn it into a waveform and fill in any missing information. However, since the outputs are imperfect, the waveform model has the additional task of correcting any mistakes.

GANs too have been explored. WaveGAN and GANSynth, have both successfully applied GANs but to much simpler datasets of audio data.

The authors believe that GANs have not yet been applied for large scale audio generation operations. With GAN-TTS, they try to do the same.

Overview Of GAN-TTS

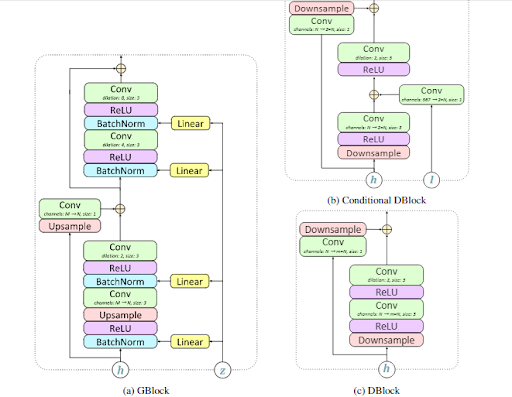

GAN-TTS is a Generative Adversarial Network for text-conditional high-fidelity speech synthesis. Its feed-forward generator is a convolutional neural network, as shown in the figure above, is coupled with an ensemble of multiple discriminators which evaluate the generated (and real) audio based on multi-frequency random windows.

The inner workings of the architecture in both generator and discriminator can be summarised as follows:

- The generator has seven “GBlocks,” each containing two skip connections: the first performs upsampling if the output frequency is higher than the input.

- The second contains a size-1 convolution when the number of output channels does not match the input channels.

- The convolutions are preceded by Conditional Batch Normalisation. Blocks 3–7 gradually up-sample the temporal dimension of hidden representations.

- The final convolutional layer with Tanh activation then produces a single-channel audio waveform.

- Whereas, the discriminators consists of blocks (DBlocks) that are similar to the GBlocks used in the generator, but without batch normalisation.

- Instead of a single discriminator, an ensemble of Random Window Discriminators (RWDs) was used.

- Notably, the number of discriminators only affects the training computation requirements, as at inference time only the generator network is used.

- In the first layer of each discriminator, the input raw waveform is downsampled to a constant temporal dimension.

- The conditional discriminators have access to linguistic and pitch features and can measure whether the generated audio matches the input conditioning.

The results from the experiments show that the GAN-TTS is capable of generating highly-fidelity speech, with the best model achieving a MOS score of 4.2, only 0.2 below state-of-the-art performance.

Conclusion

The researchers believe that the use of RWD is the game-changer here although they say that don’t know the reason behind this.

They posit that RWDs work much better than the full discriminator because of the relative simplicity of the distributions that the former must discriminate between, and the number of different samples one can draw from these distributions.

GAN-TTS is capable of generating high-fidelity speech with naturalness comparable to state-of-the-art models, and unlike autoregressive models, it is highly parallelizable thanks to an efficient feed-forward generator.

Though the widely popular WaveNet has been around for a while, it largely depends on the sequential generation of one audio sample at a time, which is undesirable for present-day applications. GANs, however, with their parallelizable traits, make for a much better option for generating audio from text.