Most of the time in our day to day life we experience audio and visual experiences together. When you are watching a movie, there is a simultaneous experience of listening to the actors deliver dialogues. In this day and age, our experience has very much adapted to having audio and visual feeds simultaneously. Now, artificial intelligence scientists are trying to build systems which have the ability to process, analyse and understand visual events and related sounds together, just like humans.

The research papers titled Look, Listen, and Learn and Objects that Sound presented by researchers at Google’s DeepMind explore the same questions. Google researchers say, “…We explore this observation by asking, what can be learnt by looking at and listening to a large number of unlabelled videos? By constructing an audio-visual correspondence learning task that enables visual and audio networks to be jointly trained from scratch”

Learning Multimodal Concepts

The researchers at Google DeepMind have many targets and problems that they aim at solving. They are successful at demonstrating that:

- The neural networks have the ability to learn useful semantic concepts.

- The two different modalities (visuals and sounds) can be used to look for the other.

- The visual object can be mapped to a sound in the scene.

The researchers also talk about other approaches to multi-modal learning and how they fall short of achieving the desired results. The research aim of the project is not novel but in the past, researchers have tried building dual datasets like image-text and audio-vision datasets. The most familiar and straightforward way researchers trained a network in one medium (say, video) and trained an additional network in another medium. This technique is known as the ‘teacher-student supervision’ technique. One of the best examples is the popular neural network ImageNet, which is used to annotate frames of an online video to a particular label and which is helpful for the student network to learn. But again, this approach missed the target of localising sounds.

Self Supervision

The researchers talk about their key ideas saying, “Our core idea is to use a valuable source of information contained in the video itself: the correspondence between visual and audio streams available by virtue of them appearing together at the same time in the same video.” The motivation also comes from infants learn from similar data and experiences. The researchers start by applying a simple binary classification task known as audio-visual correspondence (AVC) which given an example video frame and a sample audio, make a decision whether they correspond to each other or not. The way a neural network can be successful at this task if it is able to detect minute semantic concepts in both the visual and audio mediums.

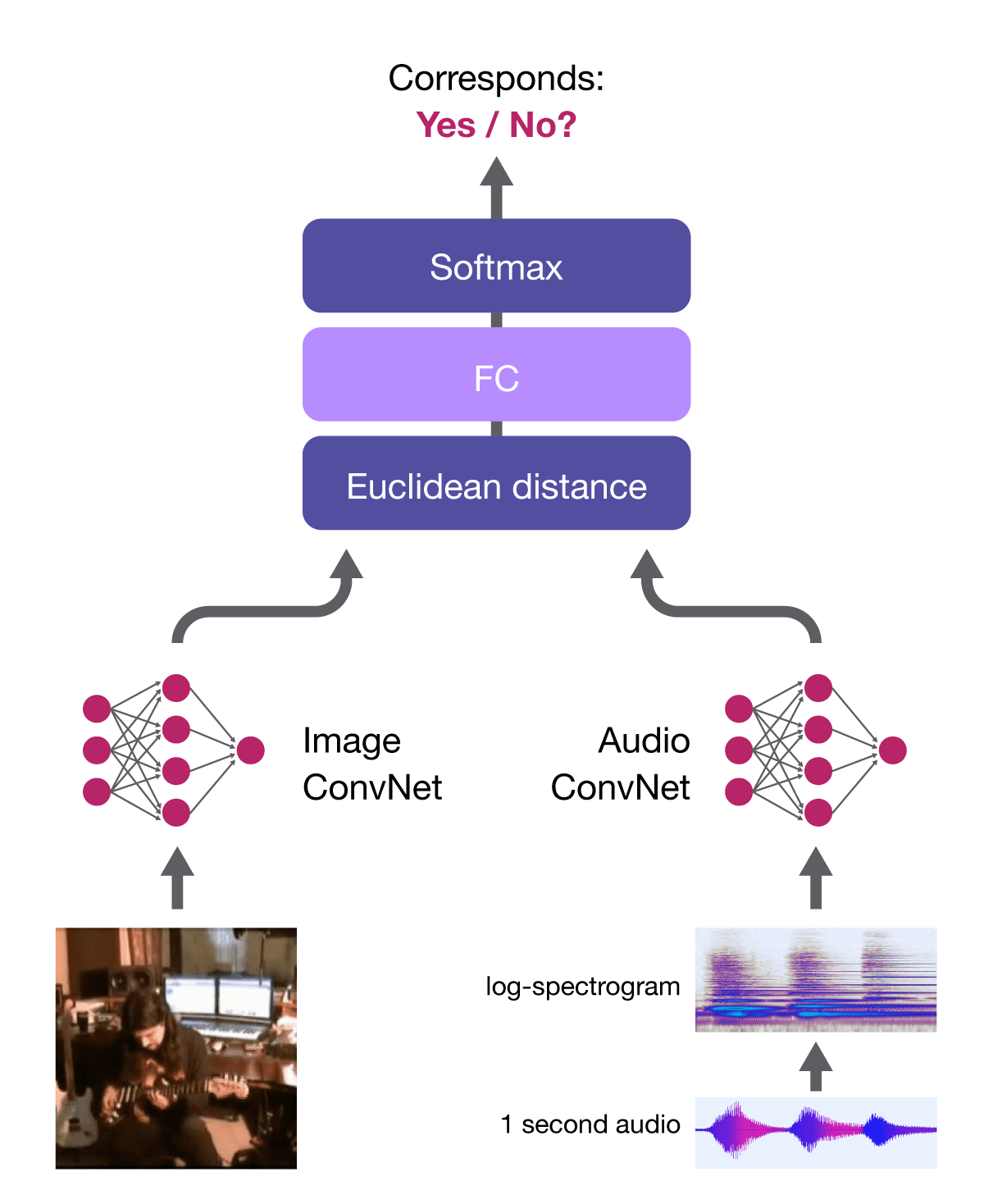

The research team proposes the following neural network architecture:

As you see, the architecture has two sub-networks. They try to extract visual and audio embeddings or representation. A correspondence score is computed taking in account the distance between two representation. If the embeddings (representations) are similar, the correspondence score will also be high. This design learns very useful semantic representations and since the distance between mediums is considered the two embeddings are forced to be in a similar representation. This important feature also enables great retrieval. Here is a demo:

Connecting Sounds To Local Objects

AVE-NET does a great job of recognising semantic concepts in the audio and visual domain. However, the researchers underlined that the network can not answer the question, “Where is the object that is making the sound?” To solve this problem they again use the AVC prototype to connect sounds to a local object in the visual. This is done without the use of labels. The researchers do this by again computing the correspondence scores between audio embedding and a grid regional-level image descriptor embeddings. This is known as multiple-instance learning and an image-level correspondence score is assigned. For this task, the algorithms help at least one area of the corresponding (image, audio) pairs — video to respond to a sound — and helps map the sound to a certain area in the image.

The great thing is that this task is a wholly unsupervised audio-visual correspondence. It also gives rise to other great features like cross-modal retrieval and semantic-based localisation of objects that sound. In this process, the architecture for this task also breaks two sound classification benchmarks. The researchers also think that these techniques can be used in reinforcement learning and can be useful in other research beyond audio-visual tasks in the future.

The researchers Relja Arandjelović and Andrew Zisserman say that the work is motivated mainly by audio and video around in the daily life and additional data in these domains can give hints because both the events are synchronised in some way. This kind of concurrent training takes video (multiple frames) as input and a sound. The researchers also say that great big datasets can also help advance this research and build more sensory intelligent systems.