|

Listen to this story

|

Google‘s AI subsidiary DeepMind unveiled DreamerV3, a scalable reinforcement learning algorithm (RL), based on world models that claim to outperform previous approaches across different domains with fixed hyperparameters. These areas include continuous and discrete actions, visual and low-dimensional inputs, 2D and 3D worlds, different data budgets, reward frequencies, and reward scales.

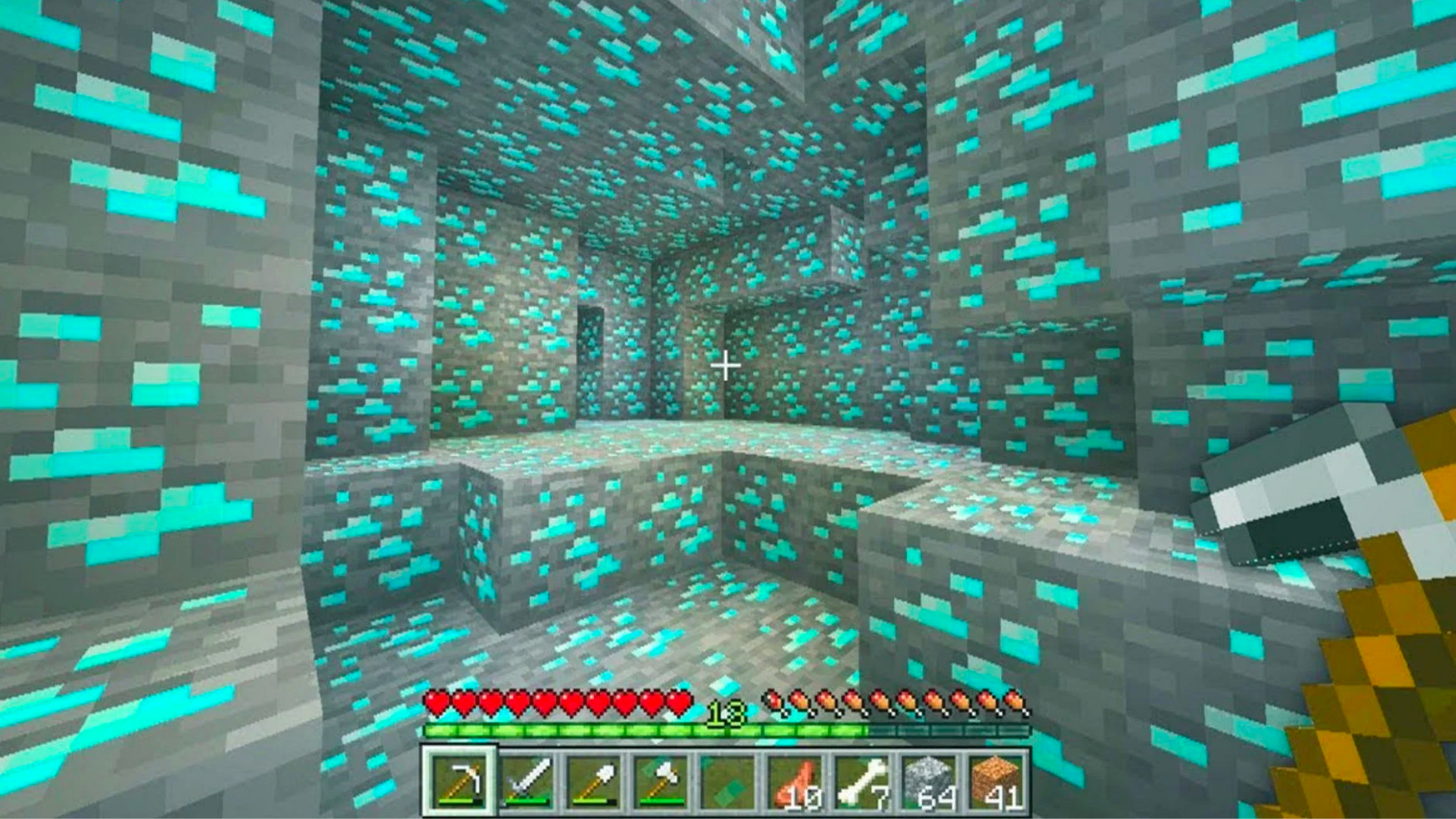

DreamerV3 is the first RL to solve the long-standing Minecraft diamond challenge without human data or domain-specific heuristics.

Read the full paper here.

Features of DreamerV3

According to DeepMind, DreamerV3 exhibits better scaling features, with larger models directly translating to better data efficiency and overall performance. In addition, its general algorithm permits scalability to difficult decision-making issues and broadly applies reinforcement learning.

The three neural networks comprise the DreamerV3 algorithm—the world model, the critic, and the actor. They are simultaneously trained on replayed data without sharing gradients. These components must handle various signal intensities and securely balance terms to be effective across domains. It is discovered that the world model can learn without tuning when KL balancing (introduced in DreamerV2) and free bits are combined and that a fixed policy entropy regularizer may be used by scaling down large returns without amplifying small returns.

Read: Bengio & LeCun debate on how to crack human-level AI

DeepMind worked with variable signal magnitudes and instability in each of its parts. Seven benchmarks are completed by DreamerV3, which also sets a new record for continuous control from states and images on BSuite and Crafter.

DreamerV3 outperforms IMPALA in DMLab tasks with 130 times fewer interactions and is the first algorithm to acquire diamonds in Minecraft end-to-end from sparse rewards. DreamerV3 trains successfully in 3D environments that call for spatial and temporal reasoning. DreamerV3’s ultimate performance and data efficiency increase monotonically with model size.

Limitations of DreamerV3

Instead of learning to acquire diamonds in every episode of Minecraft, DreamerV3 only occasionally does so within the first 100 million environment steps. However, human experts can usually acquire diamonds in every situation, even though some procedurally generated environments are more challenging than others.

Additionally, it accelerated block-breaking to enable Minecraft learning with a stochastic policy, which inductive biases might have managed in earlier research. Future implementations at a larger scale will be required to demonstrate how far DreamerV3’s scalability properties expand. Finally, it trained different agents for each task in this work.

The possibility for significant task transfer exists in world models. Therefore, a viable avenue for future research could be to train larger models to tackle many tasks across overlapping domains.

DreamerV2 Vs DreamerV3

In 2021, DeepMind, Google Brain and the University of Toronto released DreamerV2- a new reinforcement learning agent. The only sources of behaviour learning for this reinforcement learning agent are the predictions made by a robust world model in its limited latent space. The researchers claim that DreamerV2 is the first AI to perform at a human-level level on the Atari benchmark.

DreamerV3 uses a similar network architecture to that of DreamerV2 but incorporates layer normalization and SiLU as the activation function. DeepMind uses the fast critic network for computing returns instead of the slow critic. DreamerV3’s hyperparameters were adjusted to work well both on the Atari 200M and the visual control suite. The replay buffer utilised by DreamerV2 only replays time steps from completed episodes, unlike DreamerV3, which uniformly samples from all inserted subsequences of size batch length to reduce the feedback loop.