Image synthesis tasks are performed generally by deep generative models like GANs, VAEs, and autoregressive models. Generative adversarial networks (GANs) have been a research area of much focus in the last few years due to the quality of output they produce. Another interesting area of research that has found a place are diffusion models. Both of them have found wide usage in the field of image, video and voice generation. Naturally, this has led to an ongoing debate on what produces better results—diffusion models or GANs.

Has anyone tried diffusion-based models, yet? Heard that they produce better results than GAN (e.g,. https://t.co/aSNmE8tyUX is quite convincing) and heard they are easier to train. True? People also say they are pretty slow to sample from. Anyone any experience with these?

— Sebastian Raschka (@rasbt) January 30, 2022

GAN is an algorithmic architecture that uses two neural networks that are set one against the other to generate newly synthesised instances of data that can pass for real data. Diffusion models have become increasingly popular as they provide training stability as well as quality results on image and audio generation.

How does a diffusion model work?

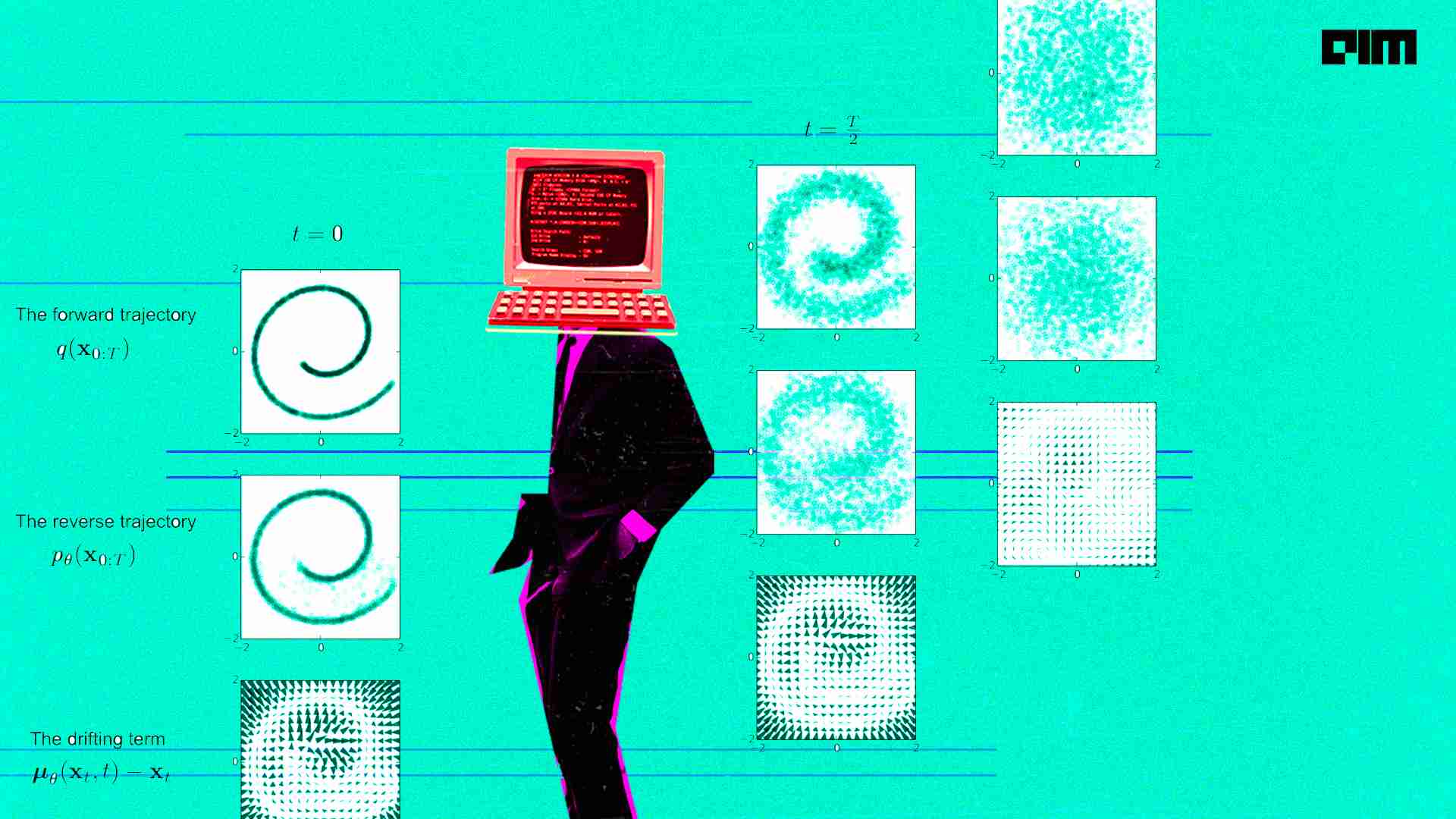

Google explains how diffusion models work. They work by corrupting the training data by progressively adding Gaussian noise. This removes details in the data till it becomes pure noise. Then, it trains a neural network to reverse the corruption process. “Running this reversed corruption process synthesises data from pure noise by gradually denoising it until a clean sample is produced,” adds Google.

GAN architecture

GANs have two parts:

- Generator: It learns to generate plausible data.

- Discriminator: The discriminator decides whether or not each instance of data that it reviews belongs to the actual training dataset. It also penalises the generator for producing implausible results.

The generator, as well as the discriminator, are neural networks. The generator output is directly connected to the discriminator output. With the process of backpropagation, the discriminator’s classification gives a signal that the generator uses to update its weights.

Image: Google

When the generator training goes well, we can see that the discriminator will get worse at differentiating between real and fake data. This leads to a reduction in accuracy.

Some common issues with GANs

Though GANs form the framework for image synthesis in a vast section of models, they do come with some disadvantages that researchers are actively working on. Some of these, as pointed out by Google, are:

- Vanishing gradients: If the discriminator is too good, the generator training can fail due to the issue of vanishing gradients.

- Mode collapse: If a generator produces an especially plausible output, it can learn to produce only that output. If this happens, the discriminator’s best strategy is to learn to always reject that output. Google adds, “But if the next generation of discriminator gets stuck in a local minimum and doesn’t find the best strategy, then it’s too easy for the next generator iteration to find the most plausible output for the current discriminator.”

- Failure to converge: GANs also have this frequent issue to converge.

Diffusion models to the rescue

OpenAI

A paper titled ‘Diffusion Models Beat GANs on Image Synthesis’ by OpenAI researchers has shown that diffusion models can achieve image sample quality superior to the generative models but come with some limitations.

The paper said that the team could achieve this on unconditional image synthesis by finding a better architecture through a series of ablations. For conditional image synthesis, the team improved sample quality with classifier guidance.

The team also said that they think that the gap between diffusion models and GANs come from two factors:

“The model architectures used by recent GAN literature have been heavily explored. GANs are able to trade off diversity for fidelity, producing high-quality samples but not covering the whole distribution,” the paper added.

Google AI

Last year, Google AI introduced two connected approaches named Super-Resolution via Repeated Refinements (SR3) and Cascaded Diffusion Models (CDM) to improve the image synthesis quality for diffusion models. The team said that by scaling up diffusion models and with carefully selected data augmentation techniques, they could outperform existing approaches. SR3 attained strong image super-resolution results that surpass GANs in human evaluations, while CDM generated high fidelity ImageNet samples that surpassed BigGAN-deep and VQ-VAE2 on both FID score and Classification Accuracy Score by a large margin.

DiffWave

DiffWave is a probabilistic model for conditional and unconditional waveform generation. The paper said that DiffWave produces high-fidelity audios in different waveform generation tasks. It includes neural vocoding conditioned on Mel spectrogram, class-conditional generation, and unconditional generation. Results showed that it significantly outperforms autoregressive and GAN-based waveform models in the unconditional generation task in areas of audio quality and sample diversity from various automatic and human evaluations.