The current benchmarks used for reinforcement learning tasks are in many ways underperforming in absorbing real-world data. The testing of reinforcement learning algorithms in low complexity simulation environments ends up with algorithms that are very specific.

The families of benchmark RL domains should contain some of the complexity of the natural world, while still supporting fast and extensive data acquisition. The domains should also permit a

Results showing poor adaptability of RL algorithms to a problem suggests that either the algorithm itself is inefficient or it is the simulators not being diverse enough to induce interesting learned behaviours, and there are very few who concentrate on the latter.

What we need is a new class of Reinforcement Learning simulators that incorporate signals or data from the real world as part of the state space

There are 2 main advantages of using a real-world signal.

- It ensures that we have a more meaningful task characteristic

- we can achieve a fair test/train separation.

Compared to the traditional simulation for Reinforcement Learning where it might require a common robot infrastructure or animal model or actual plant ecosystem, the proposed system only requires a computer and Internet connection with added advantages of having domains that can be easily installed, being able to acquire large quantities of data fast and fair evaluations and comparisons.

Why do we need a better Simulation for RL?

The Atari Learning Environment (ALE) is the most widely used Simulator for RL benchmarking.

By definition, ALE is a simple object-oriented framework that allows researchers and hobbyists to develop AI agents for Atari 2600 games. It is built on top of the Atari 2600 emulator Stella.

The original source code for the Atari games tested in ALE are very less in fact less than 100KB2. Even MuJoCo, a physics engine which simulates basic physical dynamics of the real world has a code volume of only 1MB3.

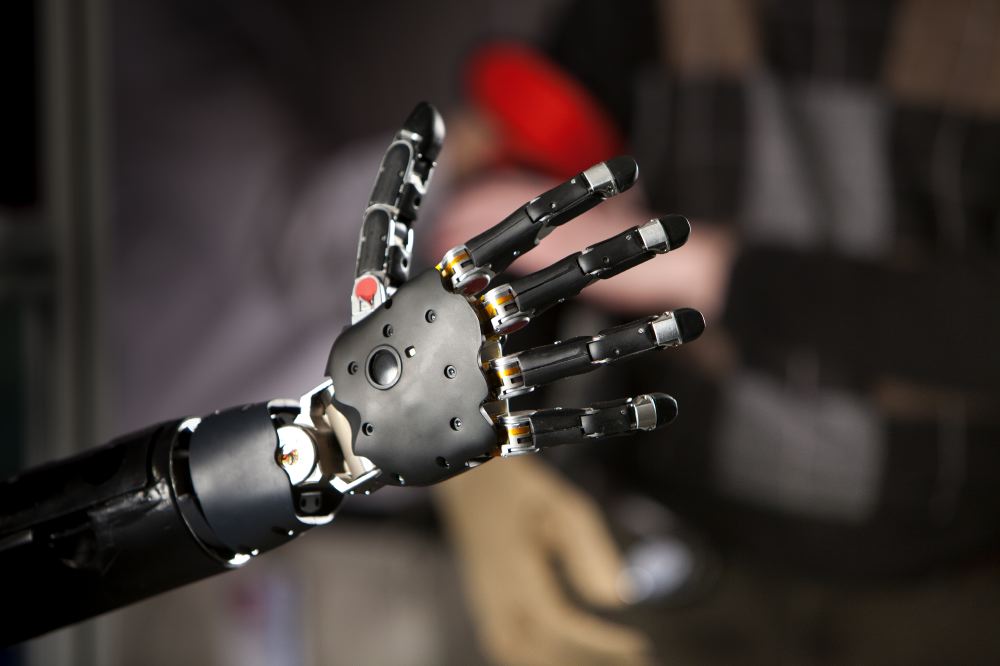

Let’s now compare it with a robot that has to operate in the real world. All the inputs depend on the robots sensors and their resolution.

For example, a standard Bumblebee stereo vision camera4 will generate over 10MB per second.

That by itself is a huge volume and then imagine the robot operating in a world with zettabytes of human made information. Clearly that too much for the present generation simulators.

Thus we require benchmark RL domains that

- contain some of the complexity of the natural world

- support fast and plentiful data acquisition

- allow fair train/test separation

- enable easy replication and comparison

Different Methods In RL

In reinforcement learning, an agent interacts with an environment which is modelled as a Markov Decision Process (MDP).

- Value-based methods aim to learn the value function of each state or state-action pair of the optimal policy .

- Policy-based methods are methods that directly learn the policy as a parameterized function rather than learn the value function explicitly.

- Actor-Critic methods are hybrid value-based and policy-based methods that directly learn both the policy (actor) and the value function (critic).

Popular RL Algorithms

Advantage Actor-Critic (A2C): A2C is a method based on actor-critic with several parallel actors which replace the value estimate with the advantage of executing an action in a state.

Actor-Critic using Kronecker-Factored Trust-Region (ACKTR): ACKTR uses trust-region optimization and Kronecker-factored approximation with actor-critic.Kronecker-factored approximation reduces the complexity of calculating the inverse.

Proximal Policy Optimization (PPO): PPO proposes a family of policy gradient methods that also use trust-region optimization to clip the size of the gradient and multiple epochs of stochastic gradient ascent for each policy update. PPO uses a penalty to constrain the update to be close to the previous policy.

Deep Q-Network (DQN): DQN modifies Q-learning to use a deep neural network with parameters to model the state-action value function.

Conclusion

In a generation that is depending so much on Artificial Intelligence to solve real-world problems, it is rather very important to train and test the algorithms with real-world data. Although it might seem a bit impractical in the case of Reinforcement Learning techniques which has its applications mainly rooted in areas like robotics, it is equally important like any other machine learning algorithms where real-world data is used. Testing is a process that cannot be compromised in any domain. The simulation environments that test algorithms are no different and has to stand out.