|

Listen to this story

|

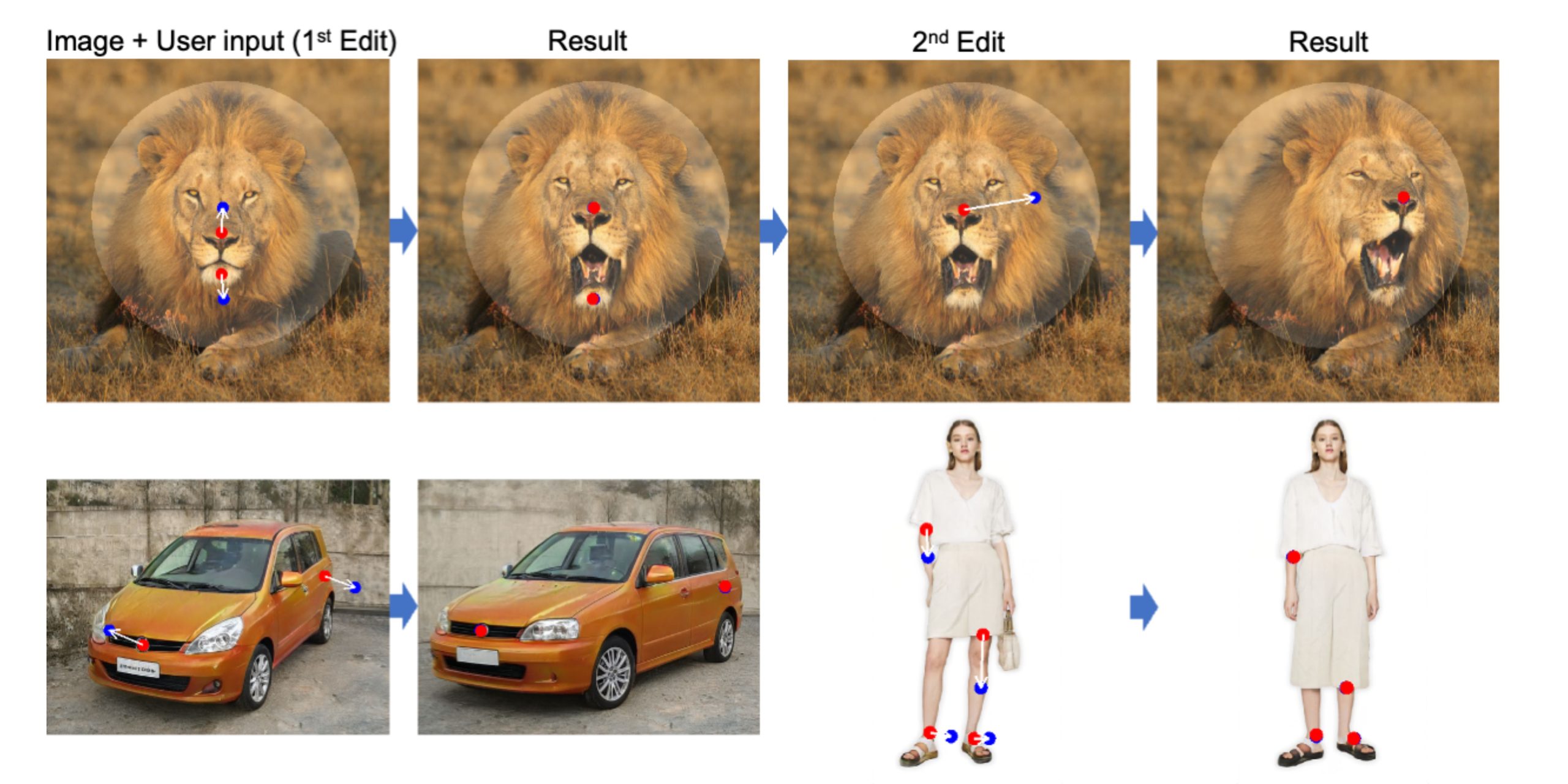

A group of researchers from Google, alongside the Max Planck Institute of Informatics and MIT CSAIL, recently released DragGAN, an interactive approach for intuitive point-based image editing. This new method leverages a pre-trained GAN to synthesise images that not only precisely follow user input, but also stay on the manifold of realistic images.

In comparison to many previous approaches, the researchers have presented a general framework by not relying on domain-specific modelling or auxiliary networks. In order to achieve this, they used an optimisation of latent codes that incrementally moves multiple handle points towards their target locations, alongside a point tracking procedure to faithfully trace the trajectory of the handle points.

Both components use the discriminative quality of intermediate feature maps of the GAN to yield pixel-precise image deformations and interactive performance. The researchers claimed that their approach outperforms the SOTA in GAN-based manipulation and opens new directions for powerful image editing using generative priors. In the coming months, they look to extend point-based editing to 3D generative models.

GAN vs Diffusion Models

This new technique shows that GAN models are more impactful than pretty pictures generated from diffusion models – namely used in tools like DALLE.2, Stable Diffusion, and Midjourney.

While there are obvious reasons why diffusion models are gaining popularity for image synthesis, general adversarial networks (GANs) saw the same popularity, sparked interest and were revived in 2017, three years after they were proposed by Ian Goodfellow.

GAN uses two neural networks—generator and discriminator—set against each other to generate new and synthesised instances of data, whereas diffusion models are likelihood-based models that offer more stability along with greater quality on image generation tasks. Read: GANs in The Age of Diffusion Models